TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

4 Ways to Run LLMs Locally

Being able to run LLMs also has many upsides:

- Privacy since your data never leaves your machine.

- Testing things locally before moving to the cloud and more.

Here are four ways to run LLMs locally.

#1) Ollama

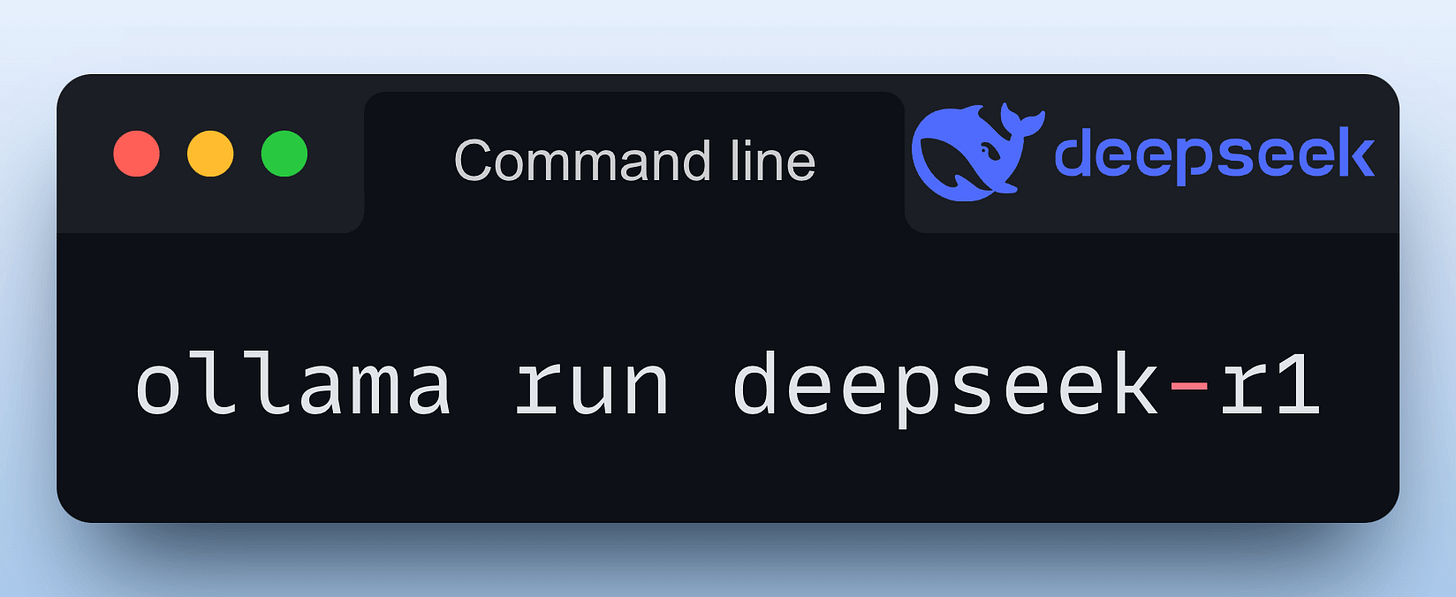

Running a model through Ollama is as simple as executing this command:

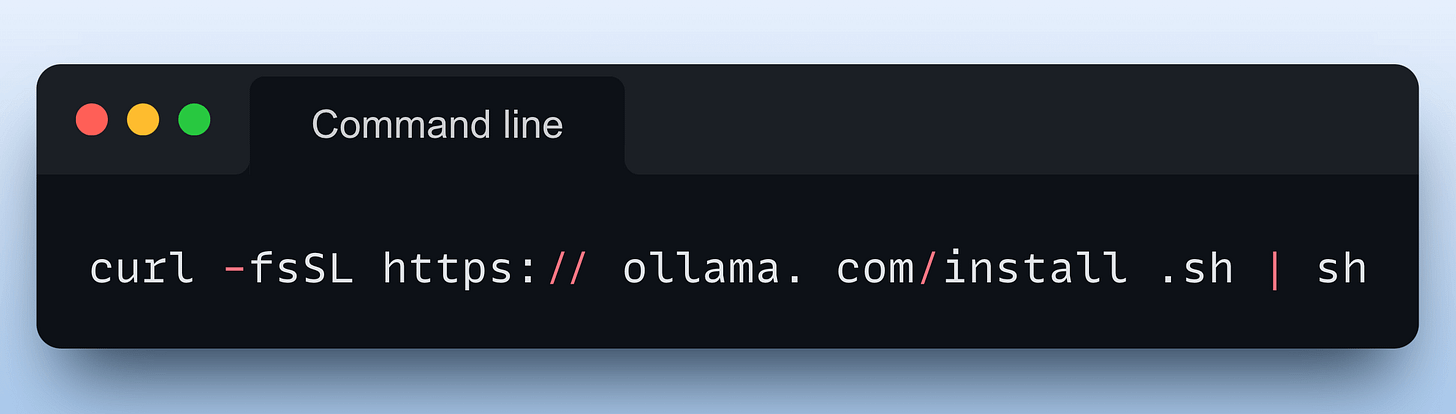

To get started, install Ollama with a single command:

Done!

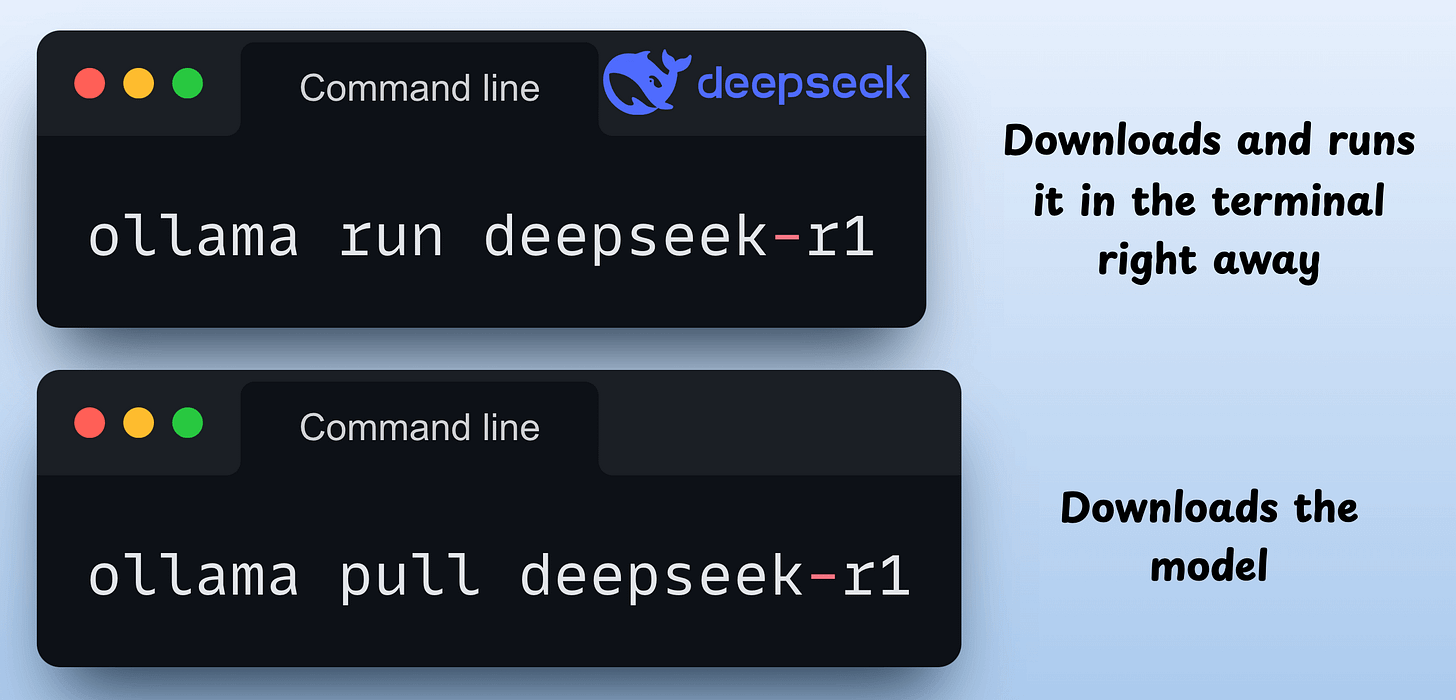

Now, you can download any of the supported models using these commands:

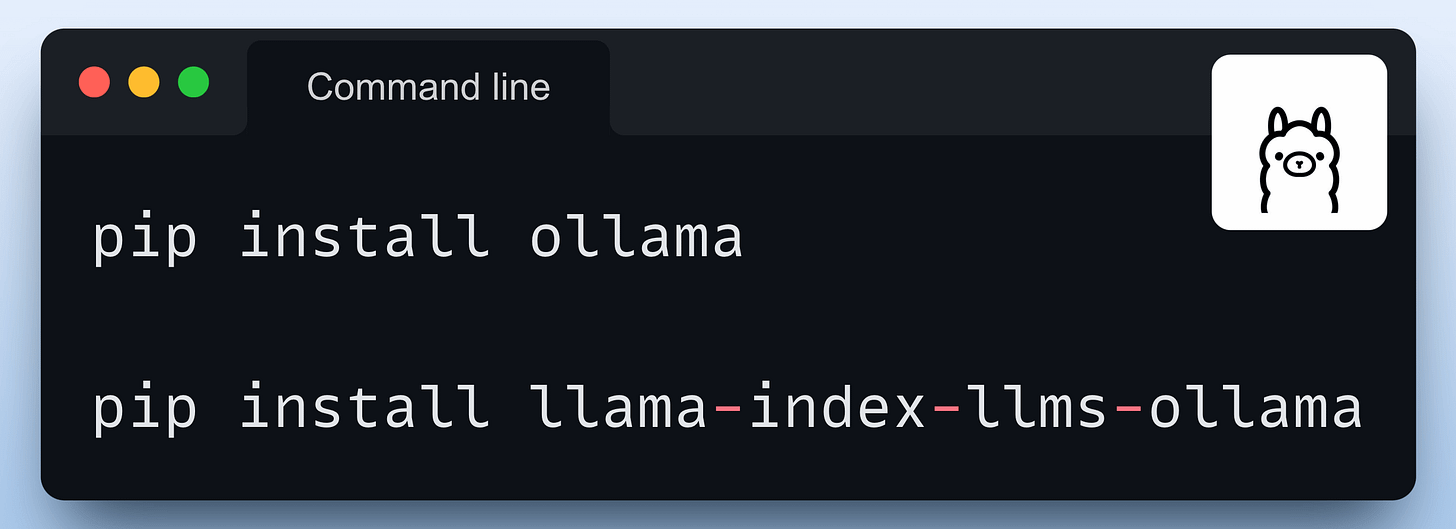

For programmatic usage, you can also install the Python package of Ollama or its integration with orchestration frameworks like Llama Index or CrewAI:

We heavily used Ollama in our RAG crash course if you want to dive deeper.

The video below shows the usage of ollama run deepseek-r1 command:

#2) LMStudio

LMStudio can be installed as an app on your computer.

The app does not collect data or monitor your actions. Your data stays local on your machine. It’s free for personal use.

It offers a ChatGPT-like interface, allowing you to load and eject models as you chat. This video shows its usage:

Just like Ollama, LMStudio supports several LLMs as well.

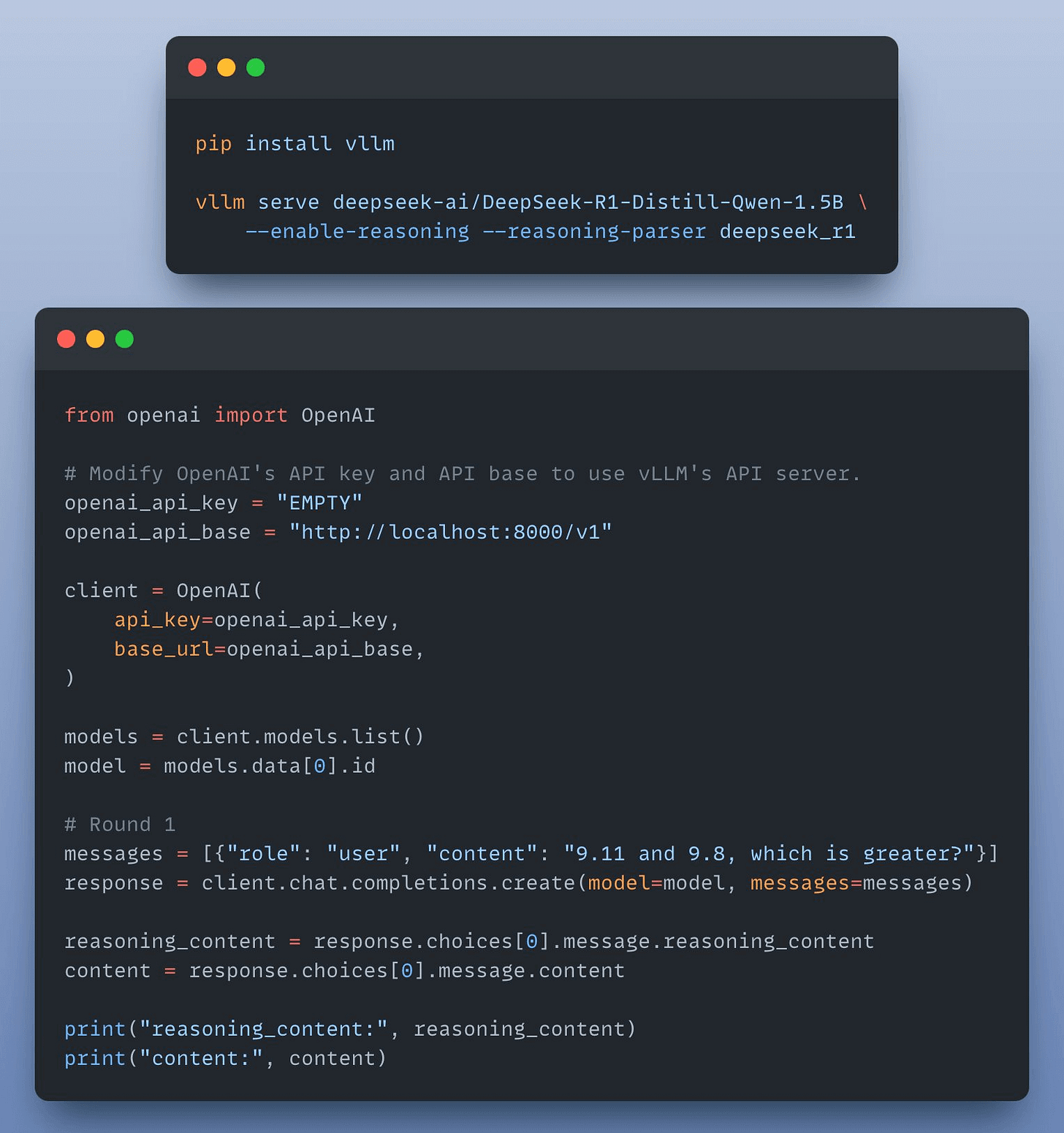

#3) vLLM

vLLM is a fast and easy-to-use library for LLM inference and serving.

With just a few lines of code, you can locally run LLMs (like DeepSeek) in an OpenAI-compatible format:

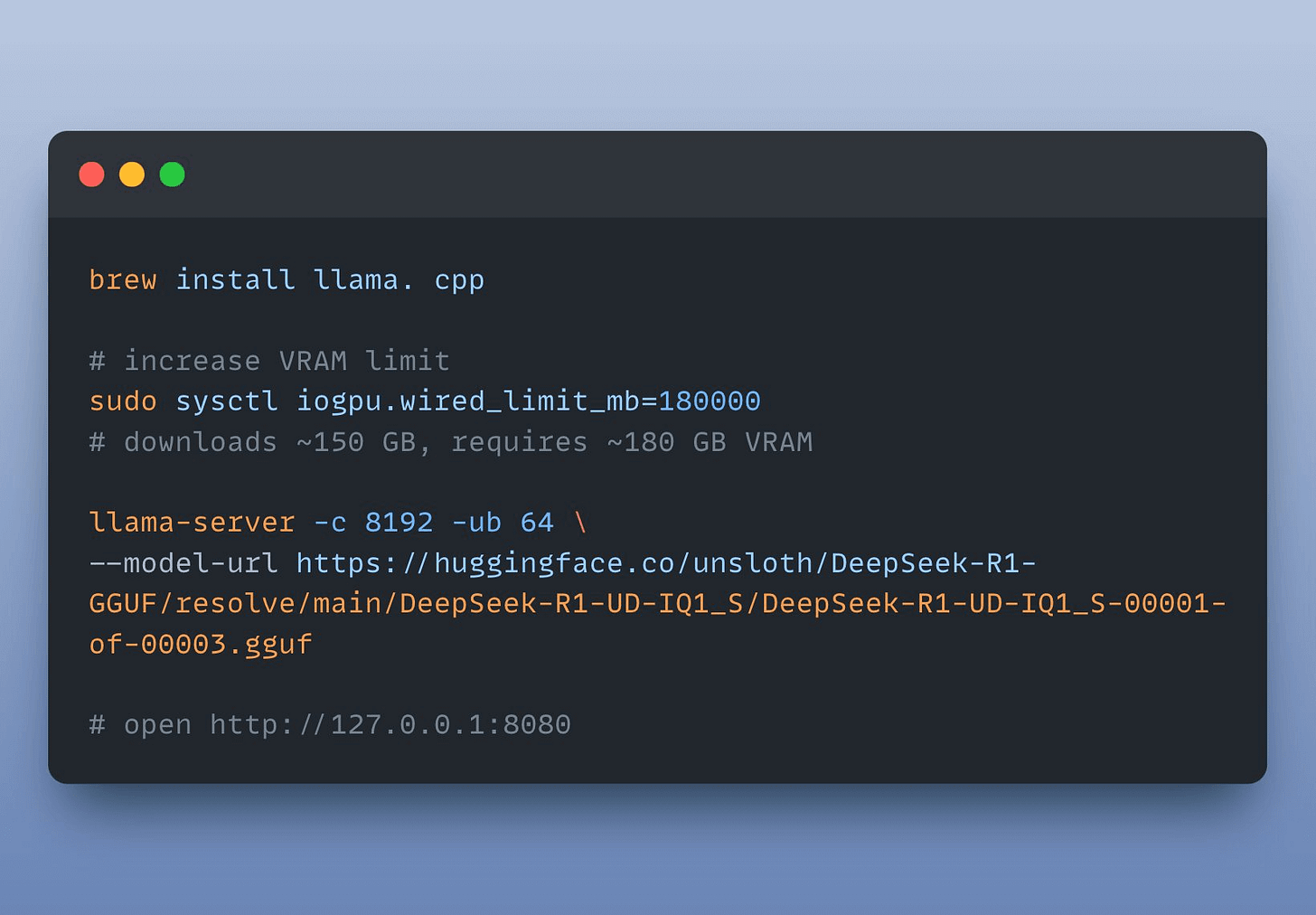

#4) LlamaCPP

LlamaCPP enables LLM inference with minimal setup and good performance.

Here’s DeepSeek-R1 running on a Mac Studio:

And these were four ways to run LLMs locally on your computer.

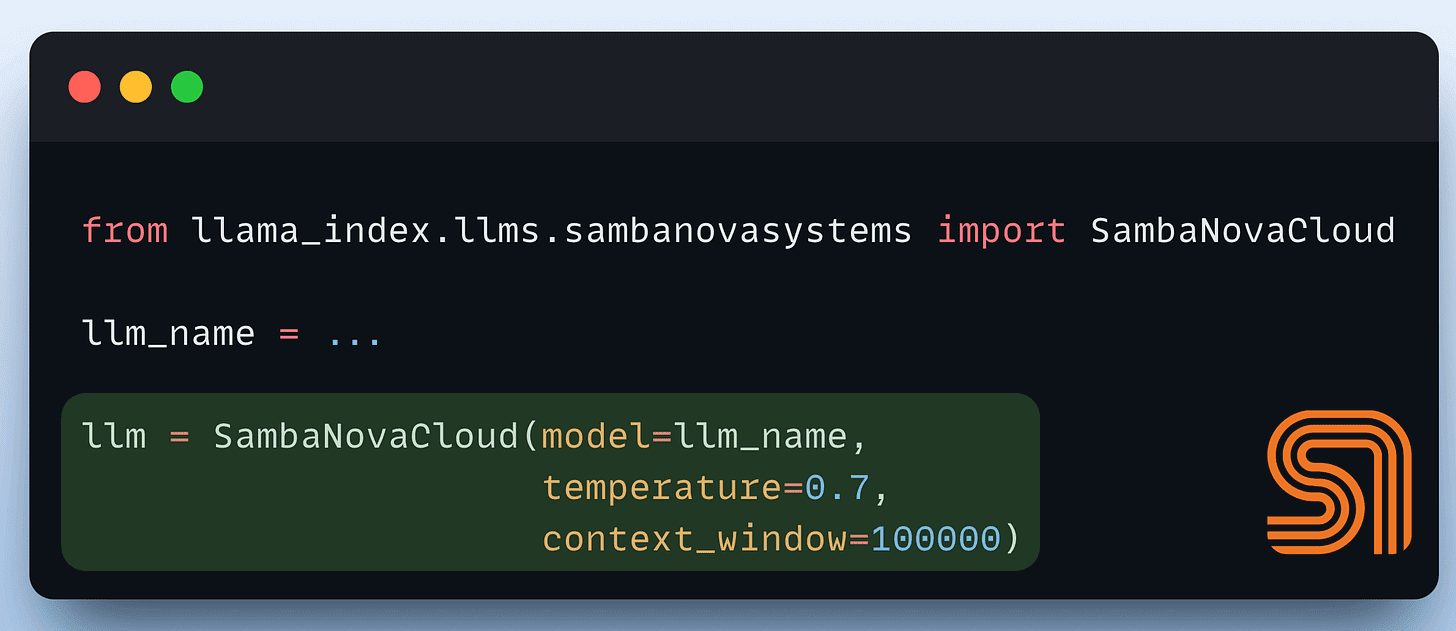

If you don’t want to get into the hassle of local setups, SambaNova’s fastest inference can be integrated into your existing LLM apps in just three lines of code:

Also, if you want to dive into building LLM apps, our full RAG crash course discusses RAG from basics to beyond:

- RAG fundamentals

- RAG evaluation

- RAG optimization

- Multimodal RAG

- Graph RAG

- Multivector retrieval using ColBERT

- RAG over complex real word docs ft. ColPali

👉 Over to you: Which method do you find the most useful?

Thanks for reading!

IN CASE YOU MISSED IT

LoRA/QLoRA—Explained From a Business Lens

Consider the size difference between BERT-large and GPT-3:

I have fine-tuned BERT-large several times on a single GPU using traditional fine-tuning:

But this is impossible with GPT-3, which has 175B parameters. That's 350GB of memory just to store model weights under float16 precision.

This means that if OpenAI used traditional fine-tuning within its fine-tuning API, it would have to maintain one model copy per user:

- If 10 users fine-tuned GPT-3 → they need 3500 GB to store model weights.

- If 1000 users fine-tuned GPT-3 → they need 350k GB to store model weights.

- If 100k users fine-tuned GPT-3 → they need 35 million GB to store model weights.

And the problems don't end there:

- OpenAI bills solely based on usage. What if someone fine-tunes the model for fun or learning purposes but never uses it?

- Since a request can come anytime, should they always keep the fine-tuned model loaded in memory? Wouldn't that waste resources since several models may never be used?

LoRA (+ QLoRA and other variants) neatly solved this critical business problem.

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.