Corrective RAG Agentic Workflow

...explained visually and implemented.

...explained visually and implemented.

TODAY'S ISSUE

Corrective RAG is a common technique to improve RAG systems. It introduces a self-assessment step of the retrieved documents, which helps in retaining the relevance of generated responses.

Here’s an overview of how it works:

The video below shows how it works:

Let’s implement this today!

Here’s our tech stack for this demo:

The code is available in this Studio: Corrective RAG with DeepSeek-R1. You can run it without any installations by reproducing our environment below:

Let’s implement Corrective RAG now!

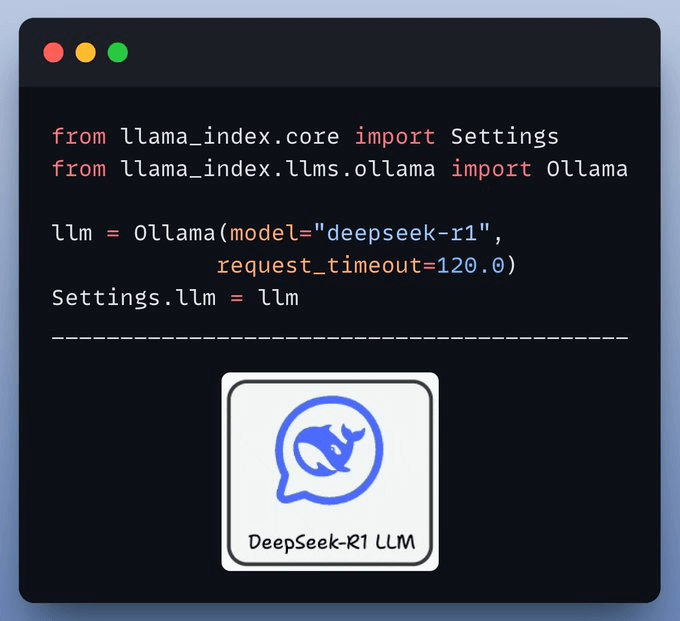

We will use DeepSeek-R1 as LLM, locally served using Ollama.

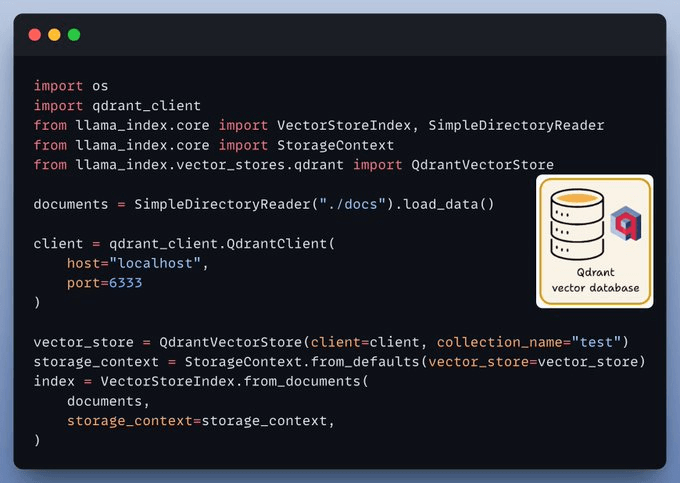

Our primary source of knowledge is the user documents that we index and store in a Qdrant vectorDB collection.

To equip our system with web search capabilities, we will use Linkup's state-of-the-art deep web search features. It also offers seamless integration with LlamaIndex.

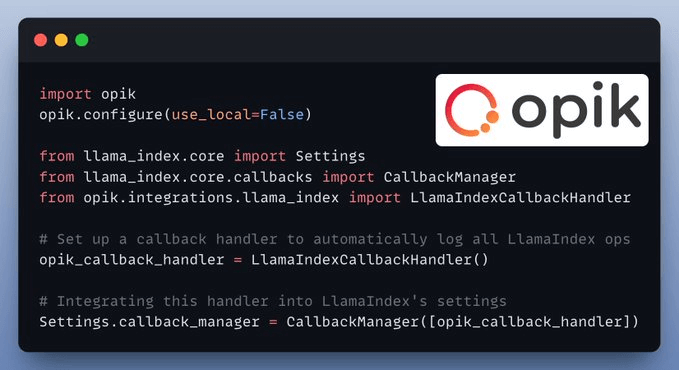

LlamaIndex also offers a seamless integration with CometML’s Opik. You can use this to trace every LLM call, monitor, and evaluate your LLM application.

Now that we have everything set up, it's time to create the event-driven agentic workflow that orchestrates our application.

We pass in the LLM, vector index, and web search tool to initialise the workflow.

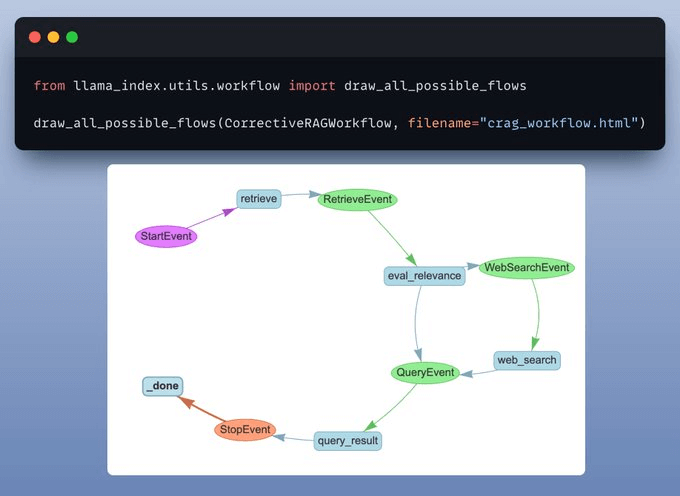

You can also plot and visualize the workflow, which is helpful for documentation and understanding how the app works.

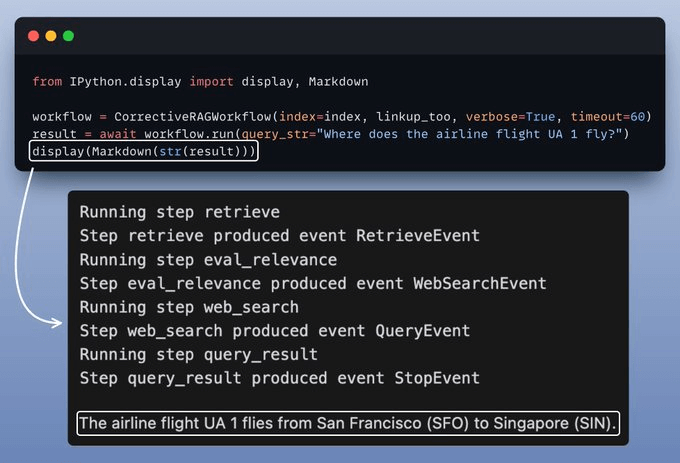

Finally, when we have everything ready, we kick off our workflow.

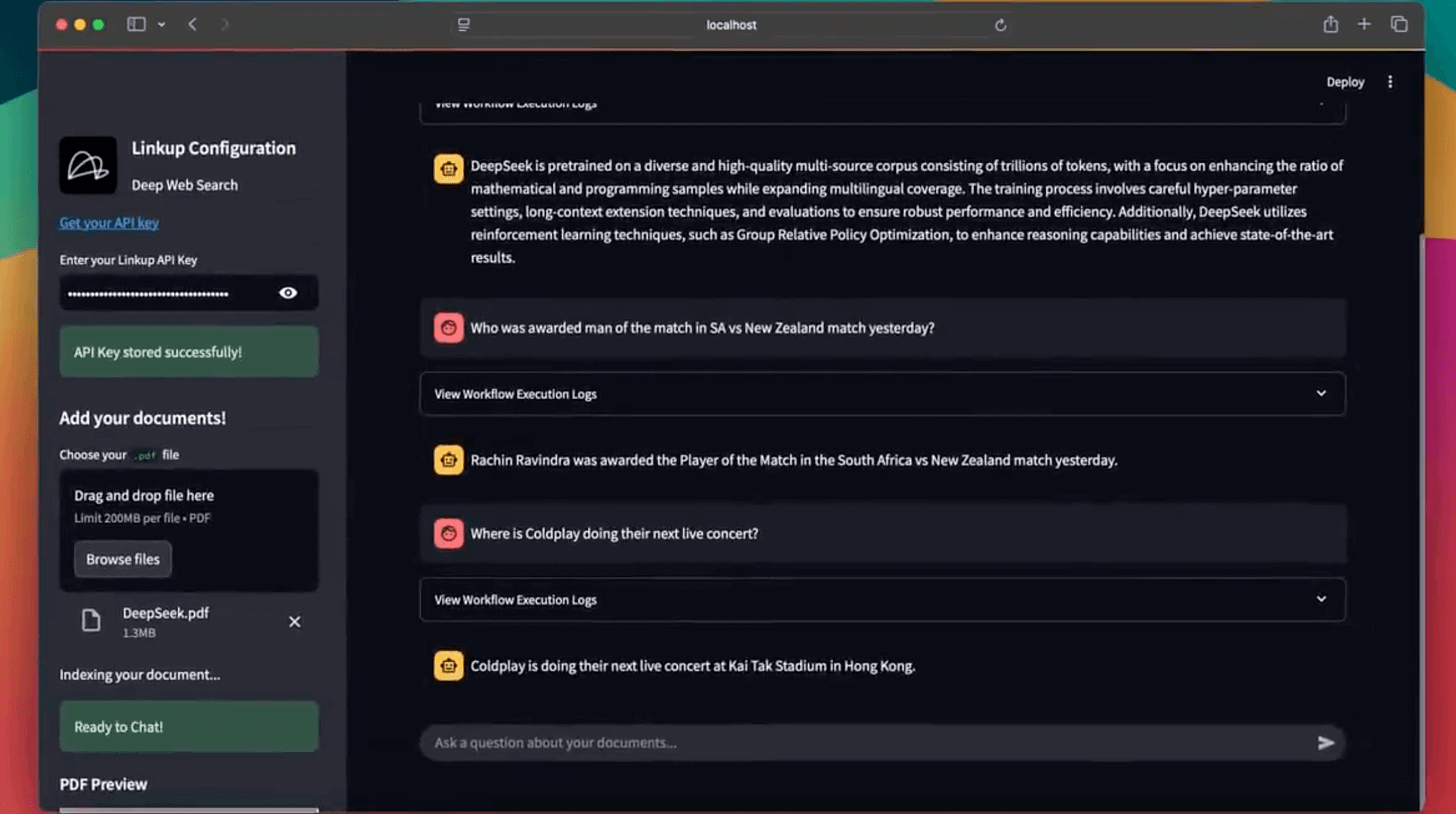

Here’s something interesting. While the vector database holds information about some research papers, it is still able to answer completely unrelated questions—thanks to the web search (which provides additional context when needed):

The code is available in this Studio: Corrective RAG with DeepSeek-R1. You can run it without any installations by reproducing our environment below:

If you want to dive into building LLM apps, our full RAG crash course discusses RAG from basics to beyond:

👉 Over to you: What other RAG demos would you like to see?

Thanks for reading!