TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

[Hands-on] Building a Llama-OCR app

Last week, we shared a demo of a Llama-OCR app that someone else built.

This week, we created our own OCR app using the Llama-3.2-vision model, and today, we are sharing how we built it.

You can upload an image, and it converts it into a structured markdown using the Llama-3.2 multimodal model, as shown in the video below.

Here’s what we'll use:

- Ollama for serving Llama 3.2 vision locally

- Streamlit for the UI

The entire code is available here: Llama OCR Demo GitHub.

Now, let’s build the app.

For simplicity, we are not going to show the Streamlit part. We will just show how the LLM is utilized in this demo.

Also, talking of LLMs, we started a beginner-friendly crash course on RAGs recently with implementations. Read the first four parts below:

Step 1) Download Ollama

Ollama provides a platform to run LLMs locally, giving you control over your data and model usage.

Go to Ollama.com, select your operating system, and follow the instructions.

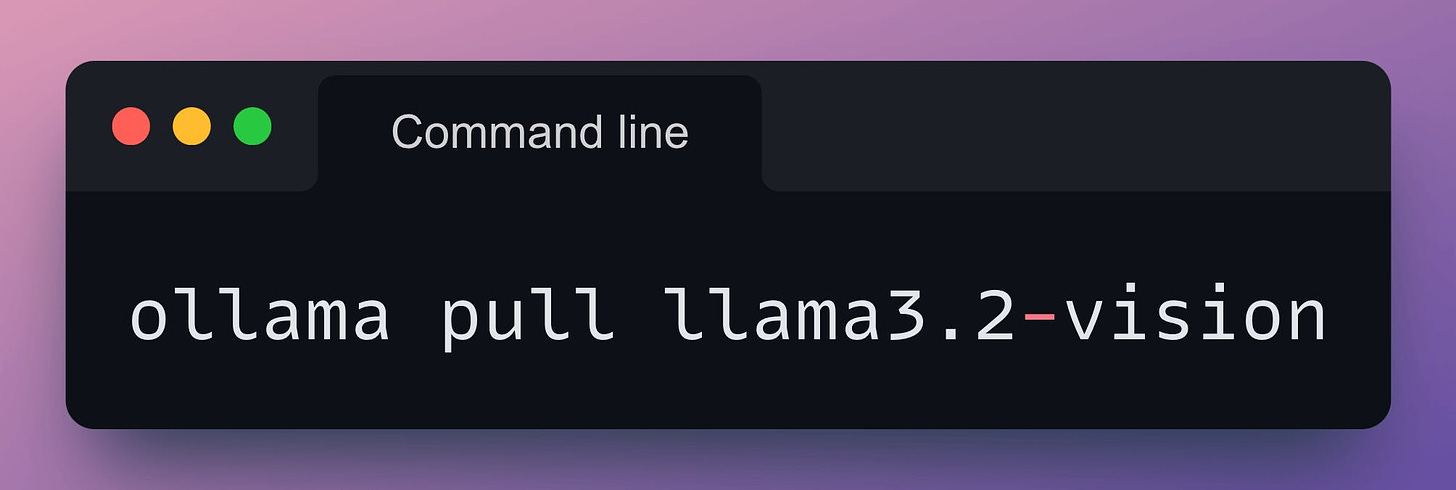

Step 2) Download Llama3.2-vision

Llama3.2-vision is a multimodal LLM for visual recognition, image reasoning, captioning, and answering general questions about an image.

Download it as follows:

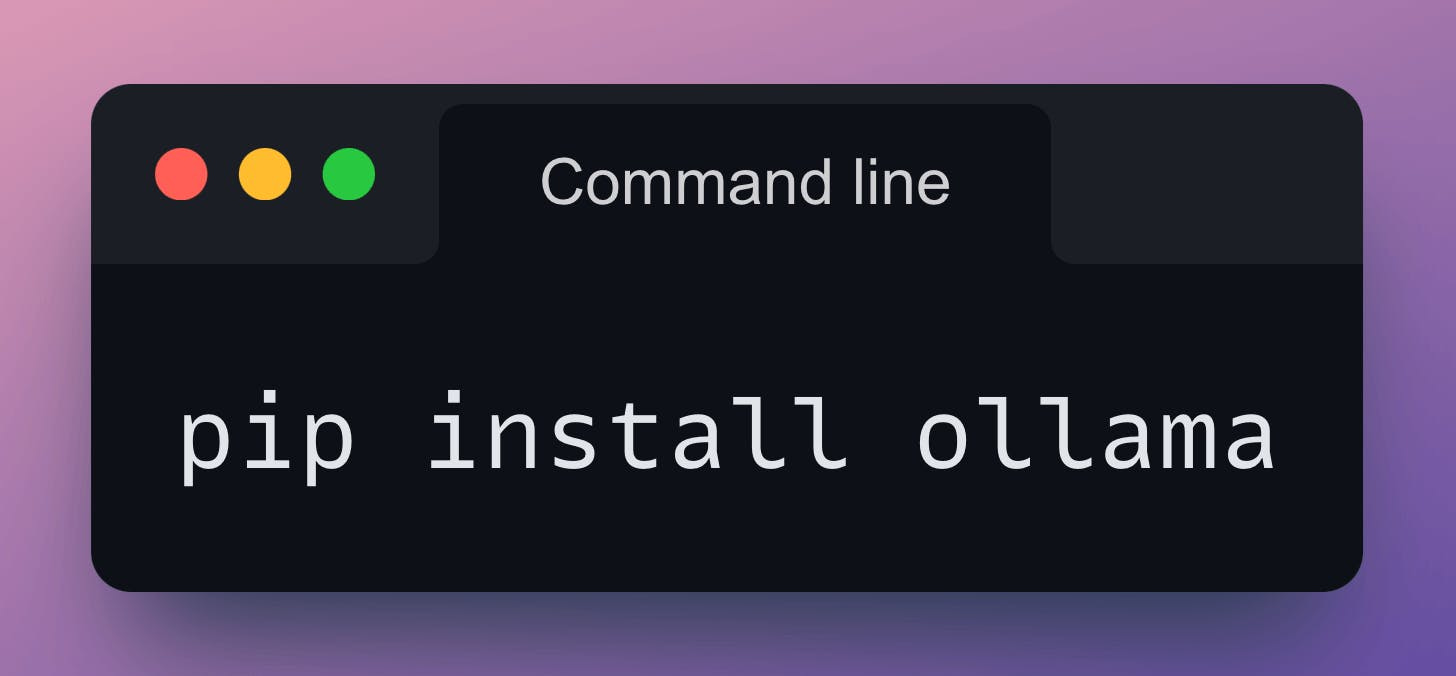

Step 3) Download Ollama Python package

Finally, install the Ollama Python package as follows:

Step 4) Prompt Llama3.2-vision

Almost done!

Finally, prompting Llama3.2-vision with Ollama is as simple as this code:

Done!

Of course, the full Streamlit app will require more code to build, but everything is still just 50 lines of code!

Also, as mentioned above, building the full Streamlit app is the focus of today's issue.

Instead, the goal is to demonstrate how simple it is to use frameworks like Ollama to build LLM apps.

In the app we built, you can upload an image, and it converts it into a structured markdown using the Llama-3.2 multimodal model, as shown in this demo:

The entire code (along with the code for Streamlit) is available here: Llama OCR Demo GitHub.

👉 Over to you: What other demos do you want us to cover next?

IN CASE YOU MISSED IT

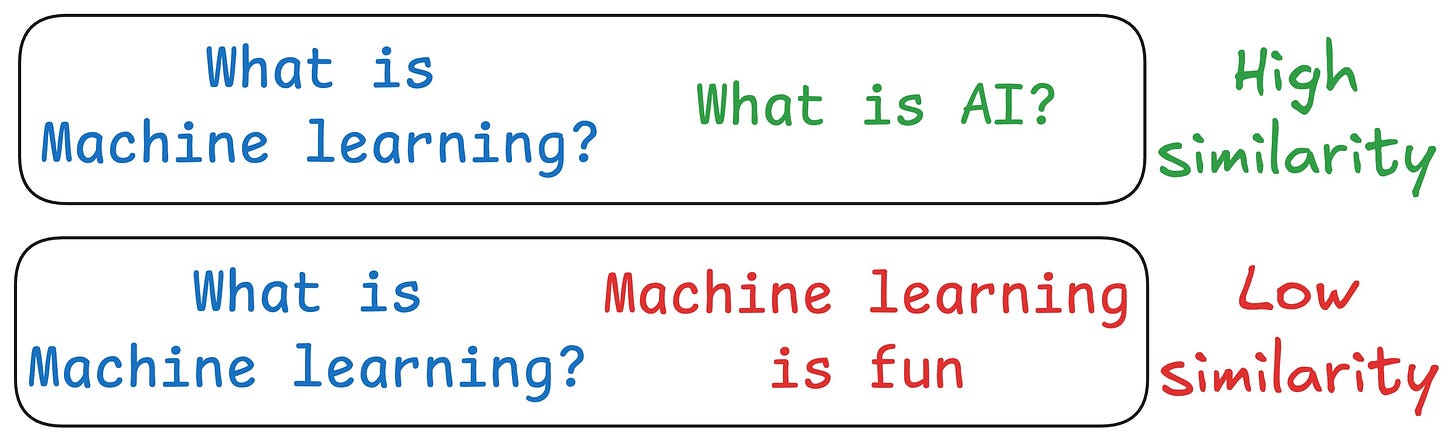

Traditional RAG vs. HyDE

One critical problem with the traditional RAG system is that questions are not semantically similar to their answers.

As a result, several irrelevant contexts get retrieved during the retrieval step due to a higher cosine similarity than the documents actually containing the answer.

HyDE solves this.

The following visual depicts how it differs from traditional RAG and HyDE.

We covered this in detail in a newsletter issue published last week →

TRULY REPRODUCIBLE ML

Data Version Control

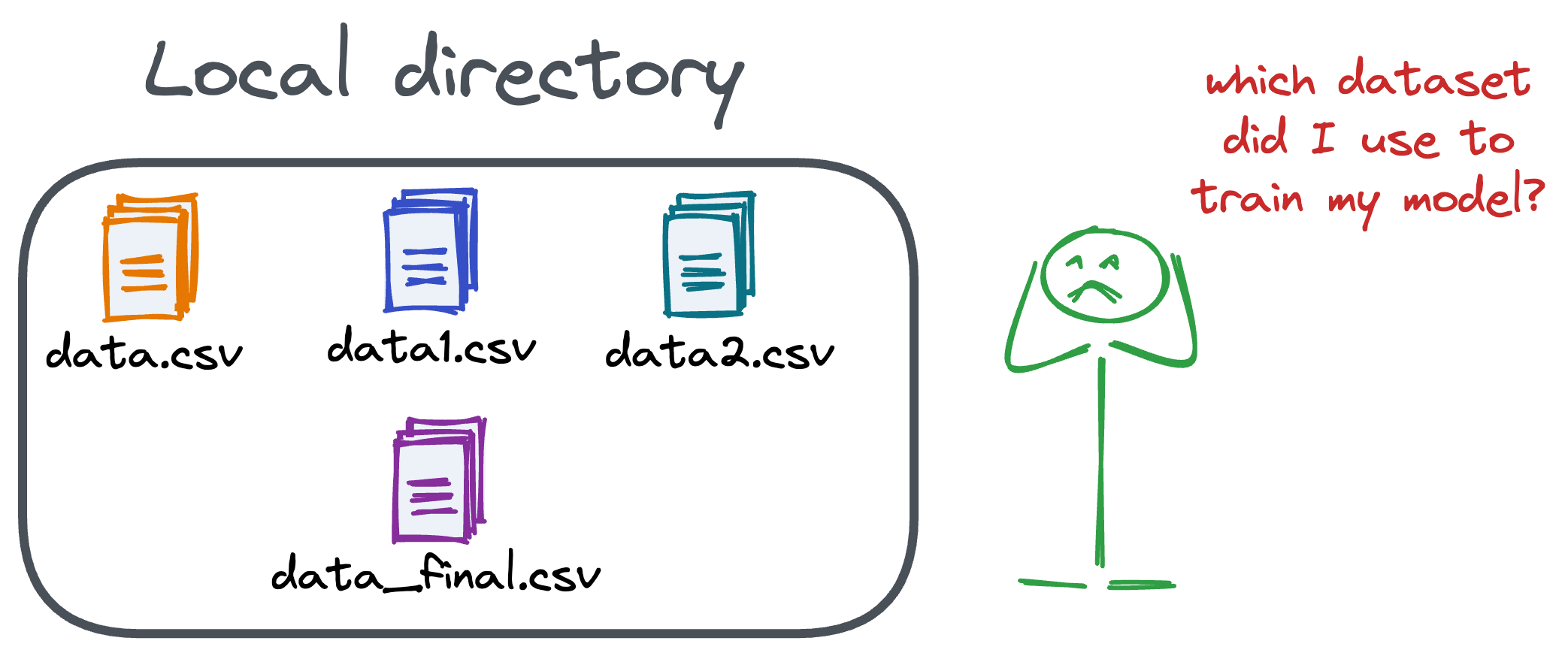

Versioning GBs of datasets is practically impossible with GitHub because it imposes an upper limit on the file size we can push to its remote repositories.

That is why Git is best suited for versioning codebase, which is primarily composed of lightweight files.

However, ML projects are not solely driven by code.

Instead, they also involve large data files, and across experiments, these datasets can vastly vary.

To ensure proper reproducibility and experiment traceability, it is also necessary to version datasets.

Data version control (DVC) solves this problem.

The core idea is to integrate another version controlling system with Git, specifically used for large files.

Here's everything you need to know (with implementation) about building 100% reproducible ML projects →

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.