5 Levels of Agentic AI Systems

...explained visually.

...explained visually.

TODAY'S ISSUE

Agentic AI systems don't just generate text; they can make decisions, call functions, and even run autonomous workflows.

The visual explains 5 levels of AI agency—from simple responders to fully autonomous agents.

Note: If you want to learn how to build Agentic systems, we have published 6 parts so far in our Agents crash course (with implementation):

Let’s dive in to learn more about the 5 levels of Agentic AI systems.

A manager agent coordinates multiple sub-agents and decides the next steps iteratively.

The most advanced pattern, wherein, the LLM generates and executes new code independently, effectively acting as an independent AI developer.

To recall:

👉 Over to you: Which one do you use the most?

Note: If you want to learn how to build Agentic systems, we have published 6 parts so far in our Agents crash course (with implementation):

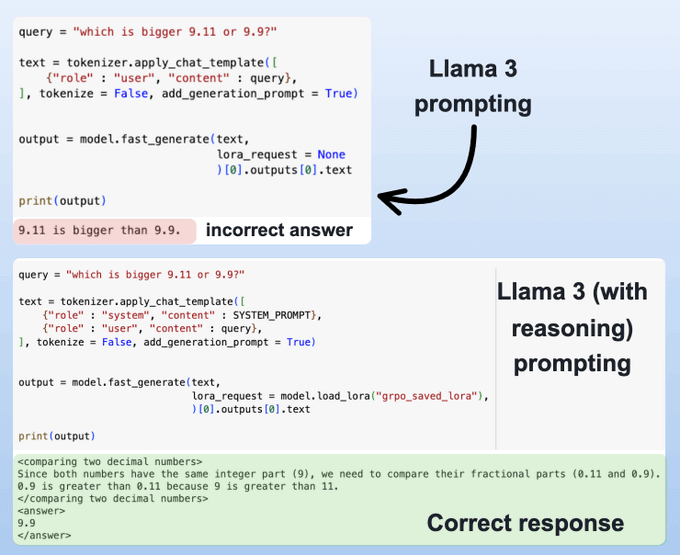

If you have used DeepSeek-R1 (or any other reasoning model), you must have seen that they autonomously allocate thinking time before producing a response.

Last week, we shared how to embed reasoning capabilities into any LLM.

We trained our own reasoning model like DeepSeek-R1 (with code).

To do this, we used:

Find the implementation and detailed walkthrough newsletter here →