TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

KernelPCA vs. PCA

During dimensionality reduction, principal component analysis (PCA) tries to find a low-dimensional linear subspace that the given data conforms to.

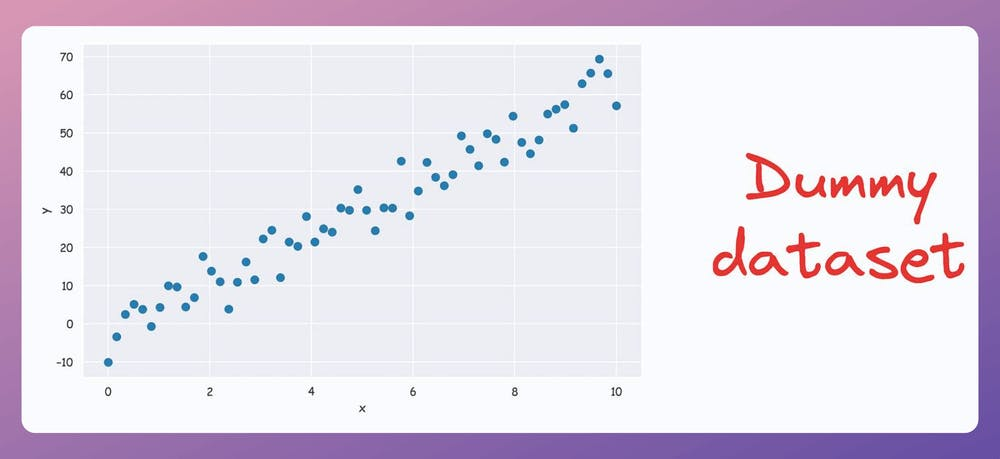

For instance, consider the following dummy dataset:

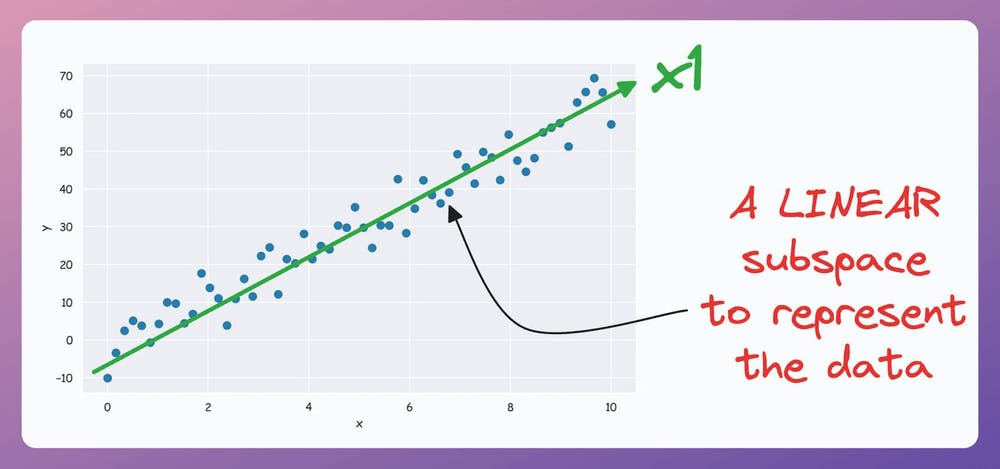

It’s pretty clear from the above visual that there is a linear subspace along which the data could be represented while retaining maximum data variance. This is shown below:

But what if our data conforms to a low-dimensional yet non-linear subspace?

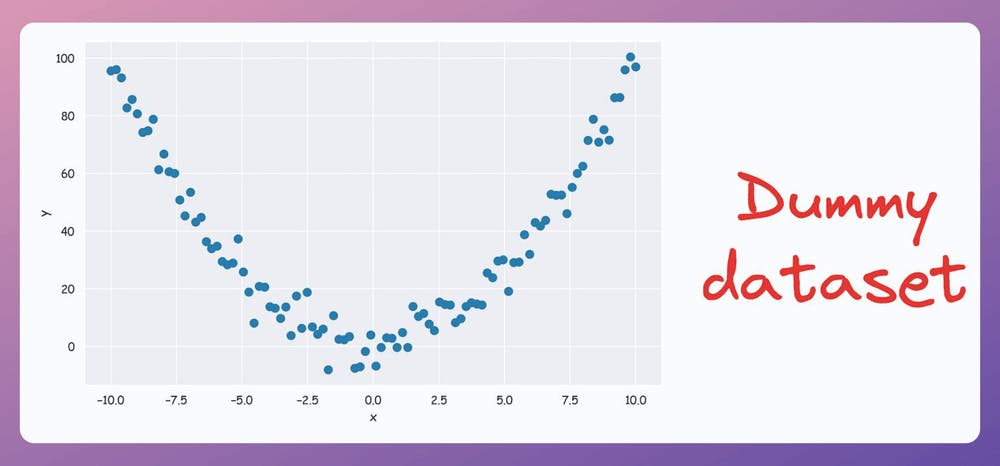

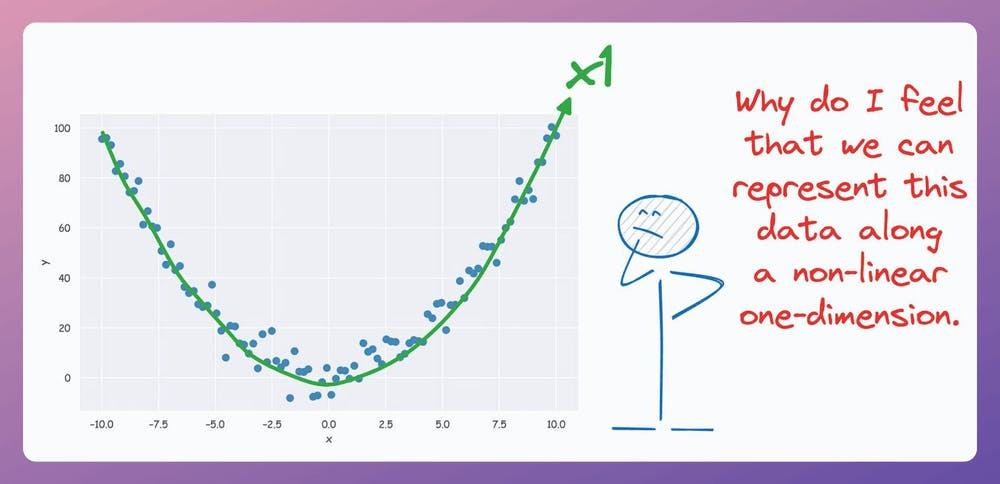

For instance, consider the following dataset:

Do you see a low-dimensional non-linear subspace along which our data could be represented?

No?

Don’t worry. Let me show you!

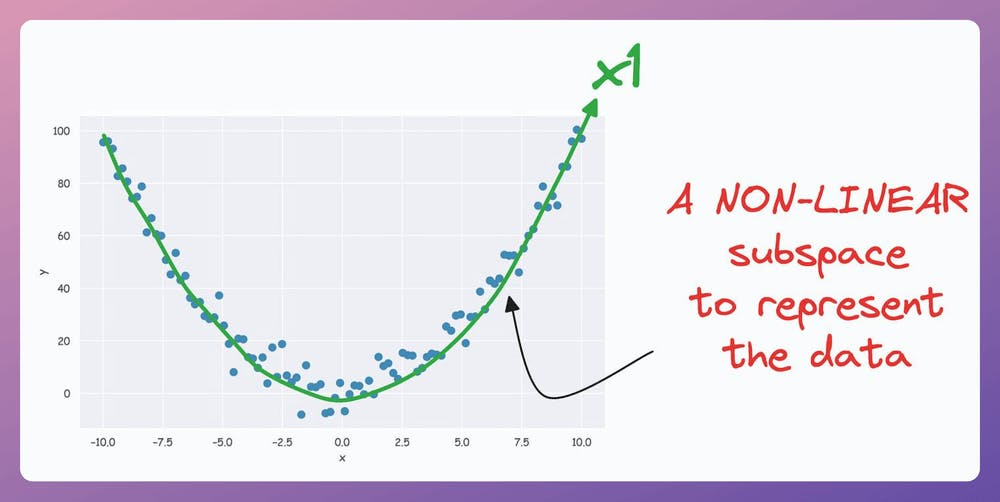

The above curve is a continuous non-linear and low-dimensional subspace that we could represent our data given along.

Okay…so why don’t we do it then?

The problem is that PCA cannot determine this subspace because the data points are non-aligned along a straight line.

In other words, PCA is a linear dimensionality reduction technique.

Thus, it falls short in such situations.

Nonetheless, if we consider the above non-linear data, don’t you think there’s still some intuition telling us that this dataset can be reduced to one dimension if we can capture this non-linear curve?

KernelPCA precisely addresses this limitation of PCA

The idea is pretty simple.

In standard PCA, we compute the eigenvectors and eigenvalues of the standard covariance matrix (we covered the mathematics here).

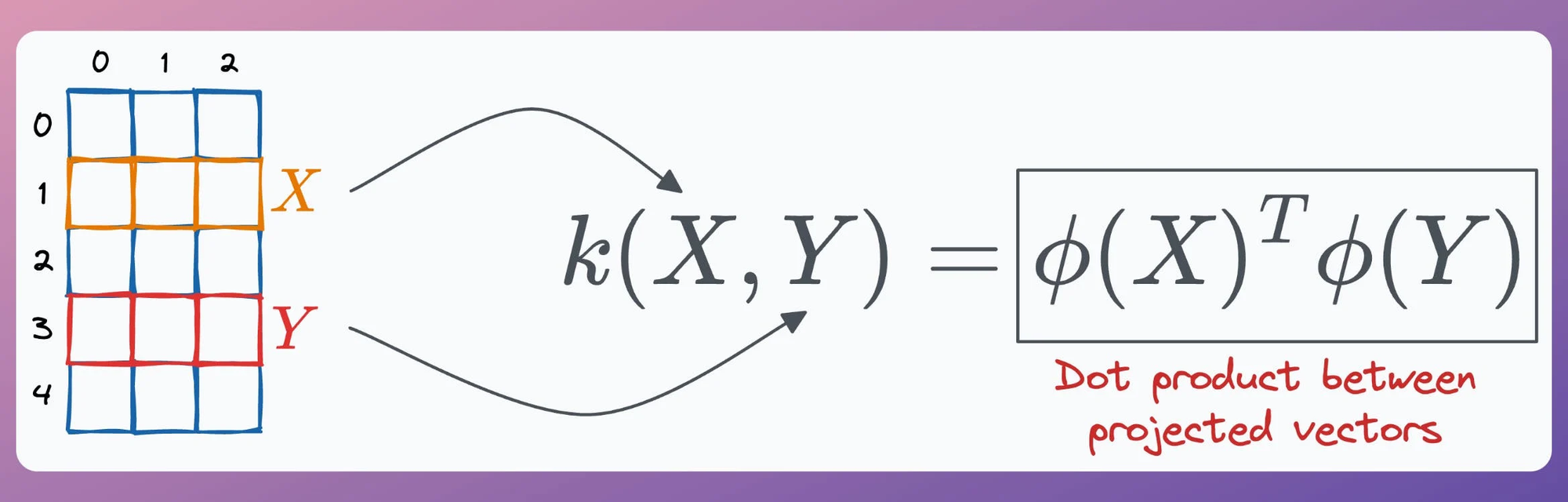

In KernelPCA, however:

- We first use a kernel function to compute the pairwise high-dimensional dot product between two data points, X and Y, without explicitly projecting the vectors to that space.

- This produces a kernel matrix.

- Next, perform eigendecomposition on this kernel matrix instead and select the top “

p” components. - Done!

If there's any confusion in the above steps, I would highly recommend reading this deep dive on PCA, where we formulated the entire PCA algorithm from scratch. It will help you understand the underlying mathematics.

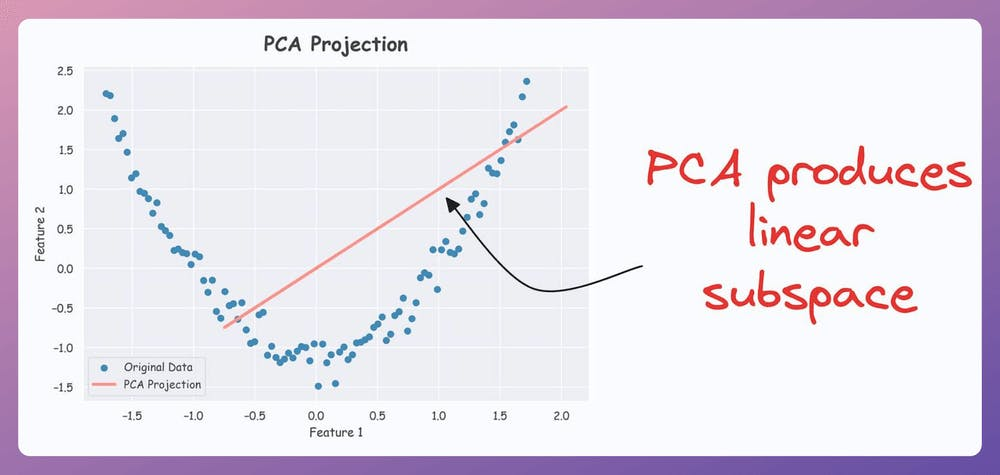

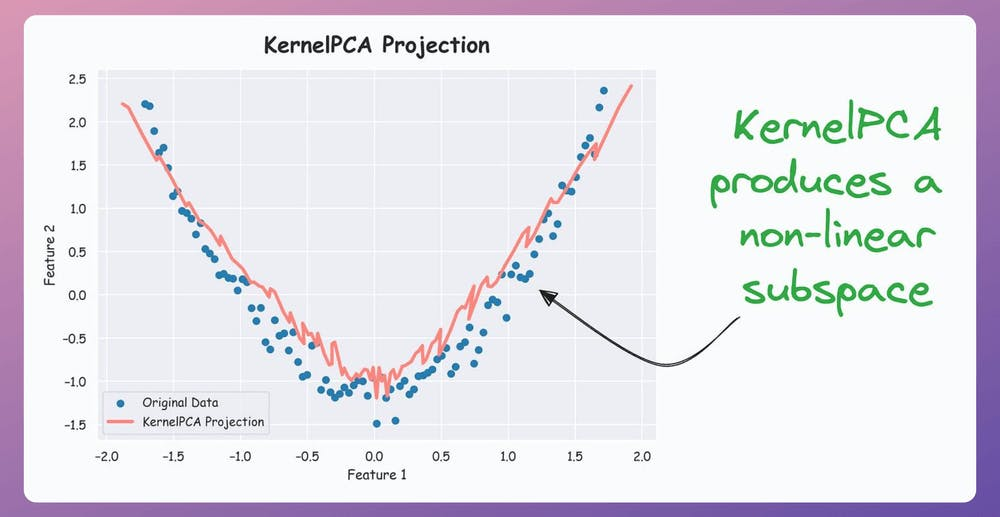

That said, the efficacy of KernelPCA over PCA is evident from the demo below.

As shown below, even though the data is non-linear, PCA still produces a linear subspace for projection:

However, KernelPCA produces a non-linear subspace:

That's handy, isn't it?

The catch here is the run time.

Since we compute the pairwise dot products in KernelPCA, this adds an additional O(n^2) time-complexity.

Thus, it increases the overall run time. This is something to be aware of when using KernelPCA.

If you want to dive into the clever mathematics of the kernel trick and why it is called a “trick,” we covered this in the newsletter here:

👉 Over to you: What are some other limitations of PCA?

EXTENDED PIECE #1

Beyond grid and random search

There are many issues with Grid search and random search.

- They are computationally expensive due to exhaustive search.

- The search is restricted to the specified hyperparameter range. But what if the ideal hyperparameter exists outside that range?

- They can ONLY perform discrete searches, even if the hyperparameter is continuous.

Bayesian optimization solves this.

It’s fast, informed, and performant, as depicted below:

Learning about optimized hyperparameter tuning and utilizing it will be extremely helpful to you if you wish to build large ML models quickly.

EXTENDED PIECE #2

Beyond linear regression

Linear regression makes some strict assumptions about the type of data it can model, as depicted below.

Can you be sure that these assumptions will never break?

Nothing stops real-world datasets from violating these assumptions.

That is why being aware of linear regression’s extensions is immensely important.

Generalized linear models (GLMs) precisely do that.

They relax the assumptions of linear regression to make linear models more adaptable to real-world datasets.

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.