TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

Building a RAG app using Llama-3.3

Meta released Llama-3.3 yesterday.

So we thought of releasing a practical and hands-on demo of using Llama 3.3 to build a RAG app.

The final outcome is shown in the video below:

The app accepts a document and lets the user interact with it via chat.

We’ll use:

- LlamaIndex for orchestration.

- Qdrant to self-host a vector database.

- Ollama for locally serving Llama-3.3.

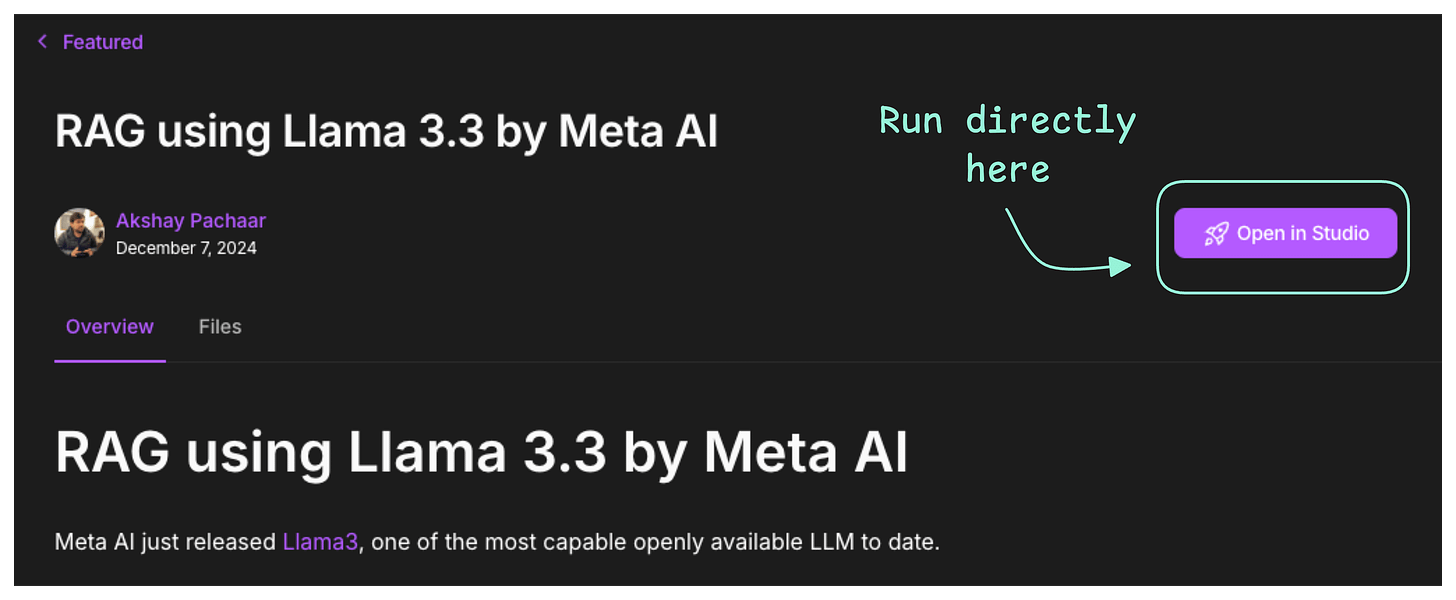

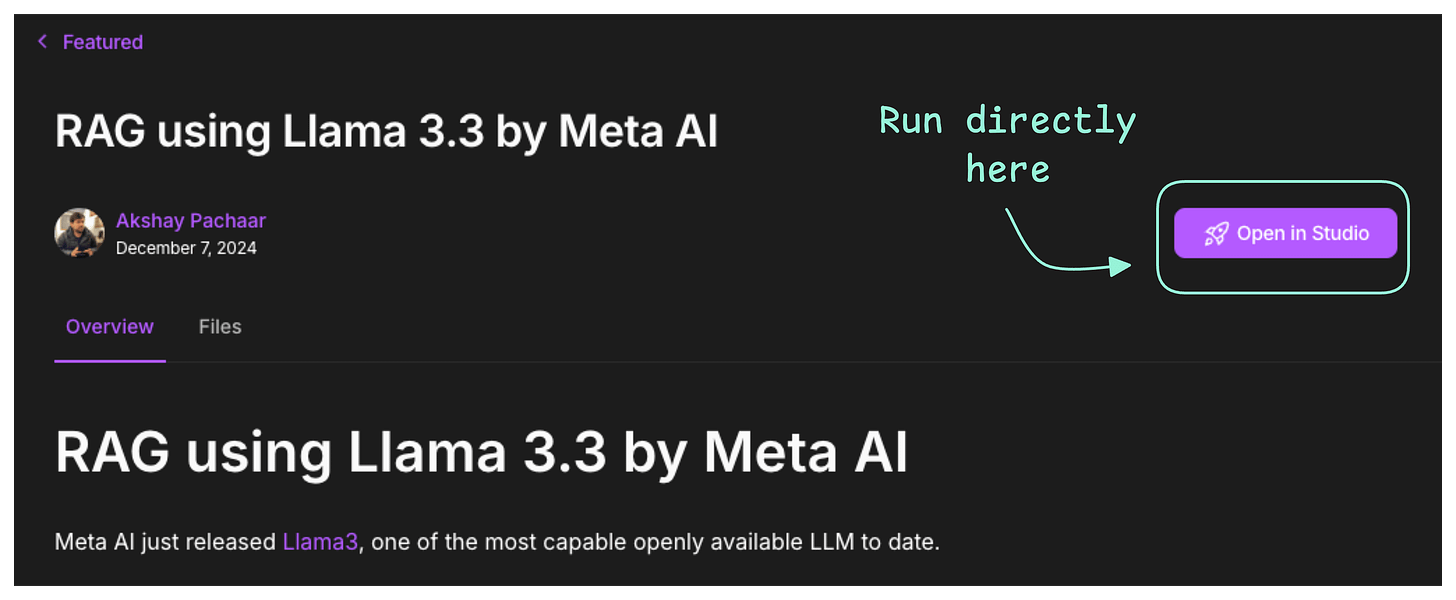

The code is available in this Studio: Llama 3.3 RAG app code. You can run it without any installations by reproducing our environment below:

Let’s build it!

Workflow

The workflow is shown in the animation below:

Implementation

Next, let’s start implementing it.

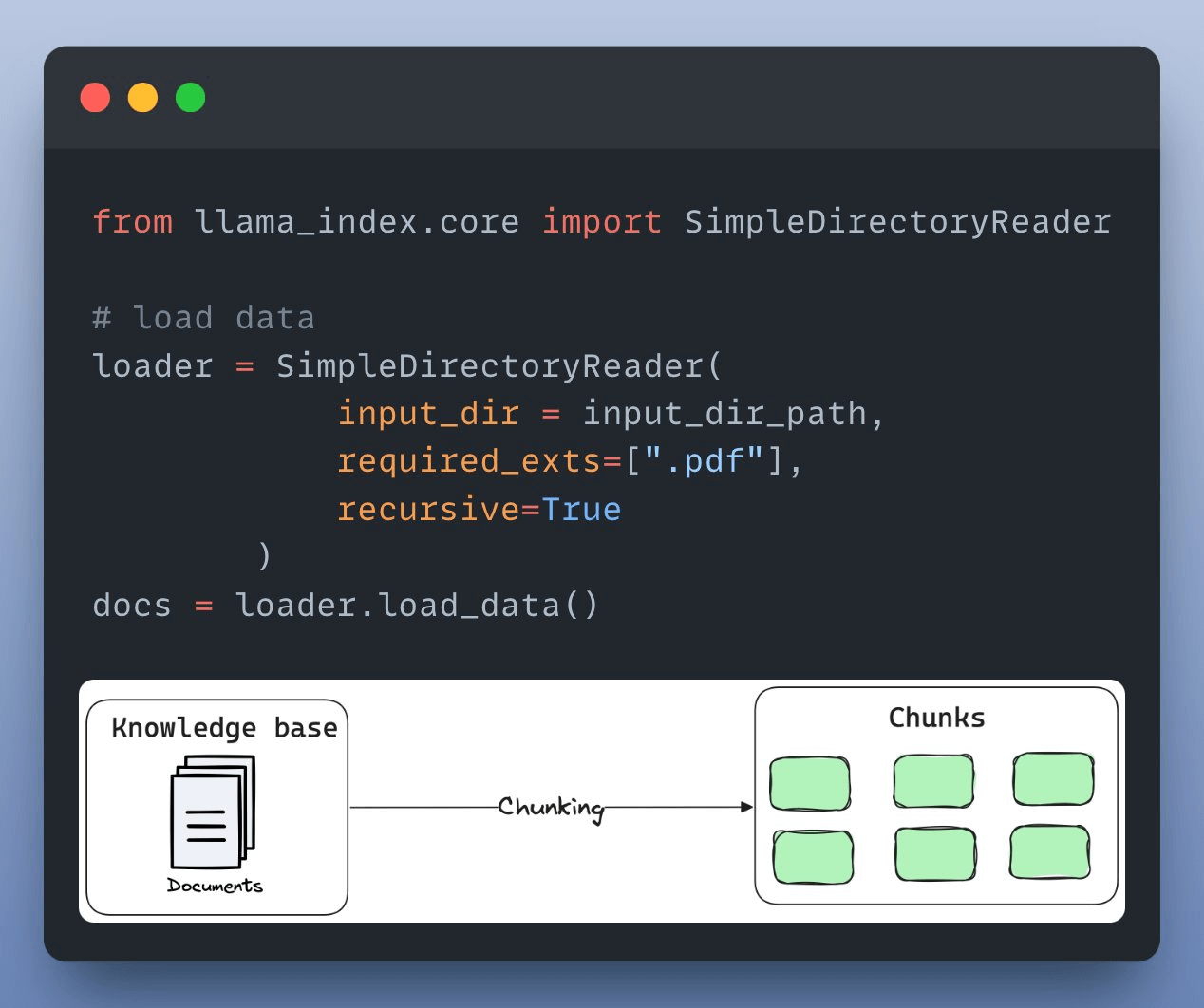

First, we load and parse the external knowledge base, which is a document stored in a directory, using LlamaIndex:

Next, we define an embedding model, which will create embeddings for the document chunks and user queries:

After creating the embeddings, the next task is to index and store them in a vector database. We’ll use a self-hosted Qdrant vector database for this as follows:

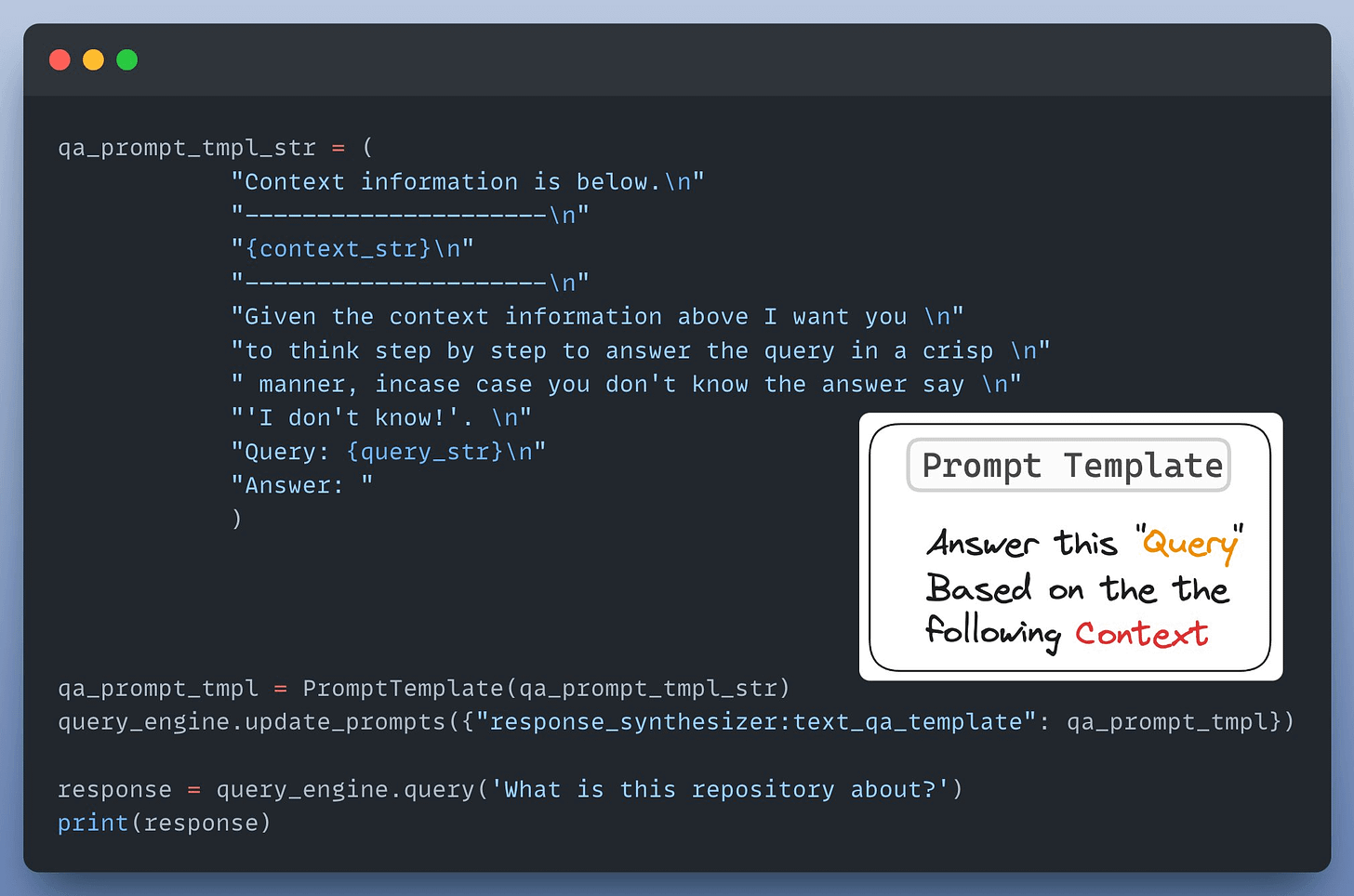

Next up, we define a custom prompt template to refine the response from LLM & include the context as well:

Almost done!

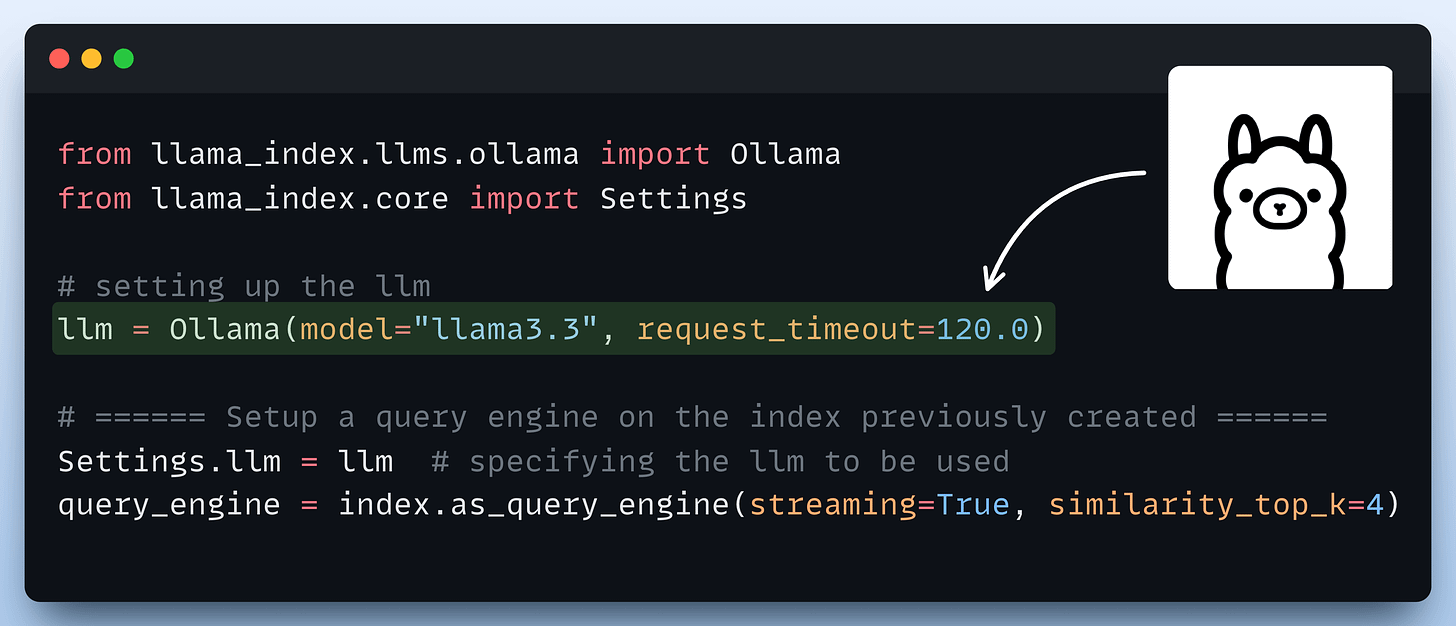

Finally, we set up a query engine that accepts a query string and uses it to fetch relevant context.

It then sends the context and the query as a prompt to the LLM to generate a final response.

This is implemented below:

Done!

There’s some streamlit part we have shown here, but after building it, we get this clear and neat interface:

Wasn’t that easy and straightforward?

The code is available in this Studio: Llama 3.3 RAG app code. You can run it without any installations by reproducing our environment below:

👉 Over to you: What other demos would you like to see with Llama3.3?

Thanks for reading, and we'll see you next week!

IN CASE YOU MISSED IT

Prompting vs. RAG vs. Fine-tuning

If you are building real-world LLM-based apps, it is unlikely you can start using the model right away without adjustments. To maintain high utility, you either need:

- Prompt engineering

- Fine-tuning

- RAG

- Or a hybrid approach (RAG + fine-tuning)

The following visual will help you decide which one is best for you:

Read more in-depth insights into Prompting vs. RAG vs. Fine-tuning here →

ROADMAP

From local ML to production ML

Once a model has been trained, we move to productionizing and deploying it.

If ideas related to production and deployment intimidate you, here’s a quick roadmap for you to upskill (assuming you know how to train a model):

- First, you would have to compress the model and productionize it. Read these guides:

- Reduce their size with Model Compression techniques.

- Supercharge PyTorch Models With TorchScript.

- If you use sklearn, learn how to optimize them with tensor operations.

- Next, you move to deployment. Here’s a beginner-friendly hands-on guide that teaches you how to deploy a model, manage dependencies, set up model registry, etc.

- Although you would have tested the model locally, it is still wise to test it in production. There are risk-free (or low-risk) methods to do that. Learn what they are and how to implement them here.

This roadmap should set you up pretty well, even if you have NEVER deployed a single model before since everything is practical and implementation-driven.

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.