TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

10 Most Common Regression and Classification Loss Functions

Loss functions are a key component of ML algorithms.

They specify the objective an algorithm should aim to optimize during its training. In other words, loss functions tell the algorithm what it should minimize or maximize to improve its performance.

Therefore, being aware of the most common loss functions is extremely crucial.

The visual below depicts the most commonly used loss functions for regression and classification tasks.

Regression

Mean Bias Error

- Captures the average bias in the prediction.

- However, it is rarely used in training ML models.

- This is because negative errors may cancel positive errors, leading to zero loss, and consequently, no weight updates.

- Mean bias error is foundational to the more advanced regression losses discussed below.

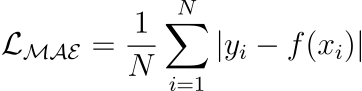

Mean Absolute Error (or L1 loss)

- Measures the average absolute difference between predicted and actual value.

- Positive errors and negative errors don’t cancel out.

- One caveat is that small errors are as important as big ones. Thus, the magnitude of the gradient is independent of error size.

Mean Squared Error (or L2 loss)

- It measures the squared difference between predicted and actual value.

- Larger errors contribute more significantly than smaller errors.

- The above point may also be a caveat as it is sensitive to outliers.

- Yet, it is among the most common loss functions for many regression models.

Root Mean Squared Error

- Mean Squared Error with a square root.

- Loss and the dependent variable (y) have the same units.

Huber Loss

- It is a combination of mean absolute error and mean squared error.

- For smaller errors, mean squared error is used, which is differentiable through (unlike MAE, which is non-differentiable at

x=0). - For large errors, mean absolute error is used, which is less sensitive to outliers.

- One caveat is that it is parameterized — adding another hyperparameter to the list.

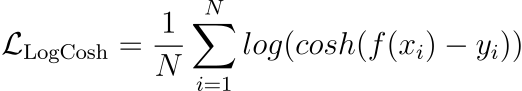

Log Cosh Loss

- For small errors, log cosh loss is approximately →

x²/2— quadratic. - For large errors, log cash loss is approximately →

|x| - log(2)— linear. - Thus, it is very similar to Huber loss.

- Also, it is non-parametric.

- The only caveat is that it is a bit computationally expensive.

Classification

Log Cosh Loss

- A loss function used for binary classification tasks.

- Measures the dissimilarity between predicted probabilities and true binary labels, through the logarithmic loss.

- Where did the log loss originate from? We discussed it here: why Do We Use log-loss To Train Logistic Regression?

Hinge Loss

- Penalizes both wrong and right (but less confident) predictions).

- It is based on the concept of margin, which represents the distance between a data point and the decision boundary.

- The larger the margin, the more confident the classifier is about its prediction.

- Particularly used to train support vector machines (SVMs).

Cross-Entropy Loss

- An extension of Binary Cross Entropy loss to multi-class classification tasks.

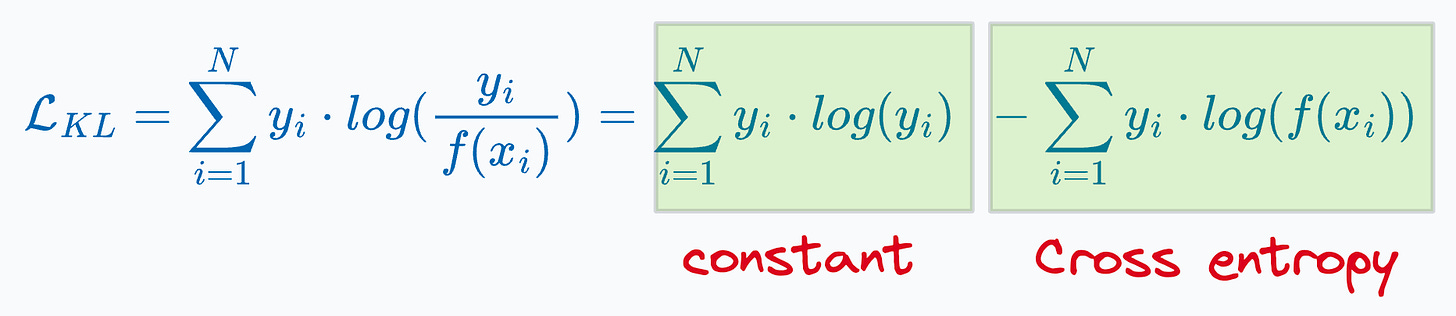

KL Divergence

- Measure information lost when one distribution is approximated using another distribution.

- The more information is lost, the more the KL Divergence.

- For classification, however, using KL divergence is the same as minimizing cross entropy (proved below):

- Thus, it is recommended to use cross-entropy loss directly.

- That said, KL divergence is widely used in many other algorithms:

- As a loss function in the t-SNE algorithm. We discussed it here: t-SNE article.

- For model compression using knowledge distillation. We discussed it here: Model compression article.

TRUSTWORTHY ML

Build confidence in model's predictions with conformal predictions

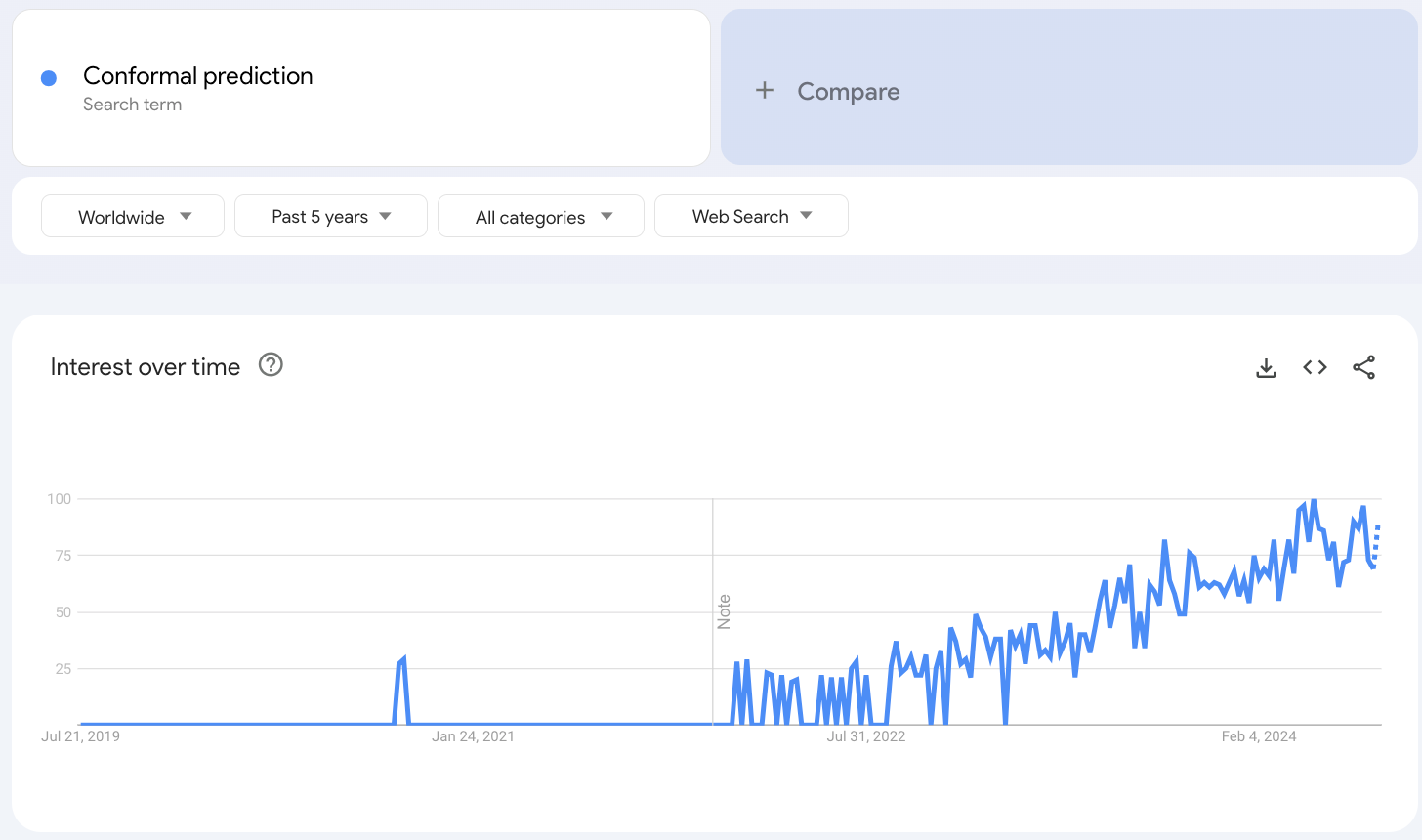

Conformal prediction has gained quite traction in recent years, which is evident from the Google trends results:

The reason is quite obvious.

ML models are becoming increasingly democratized lately. However, not everyone can inspect its predictions, like doctors or financial professionals.

Thus, it is the responsibility of the ML team to provide a handy (and layman-oriented) way to communicate the risk with the prediction.

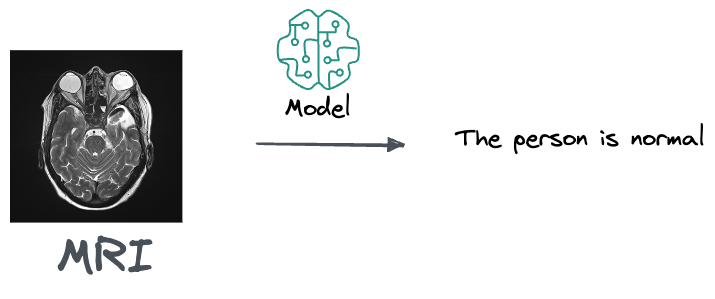

For instance, if you are a doctor and you get this MRI, an output from the model that suggests that the person is normal and doesn’t need any treatment is likely pretty useless to you.

This is because a doctor's job is to do a differential diagnosis. Thus, what they really care about is knowing if there's a 10% percent chance that that person has cancer or 80%, based on that MRI.

Conformal predictions solve this problem.

A somewhat tricky thing about conformal prediction is that it requires a slight shift in making decisions based on model outputs.

Nonetheless, this field is definitely something I would recommend keeping an eye on, no matter where you are in your ML career.

Learn how to practically leverage conformal predictions in your model →

CRASH COURSE

Generate true probabilities with model calibration

Modern neural networks being trained today are highly misleading.

They appear to be heavily overconfident in their predictions.

For instance, if a model predicts an event with a 70% probability, then ideally, out of 100 such predictions, approximately 70 should result in the event occurring.

However, many experiments have revealed that modern neural networks appear to be losing this ability, as depicted below:

- The average confidence of LeNet (an old model) closely matches its accuracy.

- The average confidence of the ResNet (a relatively modern model) is substantially higher than its accuracy.

Calibration solves this.

A model is calibrated if the predicted probabilities align with the actual outcomes.

Handling this is important because the model will be used in decision-making and an overly confident can be fatal.

To exemplify, say a government hospital wants to conduct an expensive medical test on patients.

To ensure that the govt. funding is used optimally, a reliable probability estimate can help the doctors make this decision.

If the model isn't calibrated, it will produce overly confident predictions.

There has been a rising concern in the industry about ensuring that our machine learning models communicate their confidence effectively.

Thus, being able to detect miscalibration and fix is a super skill one can possess.

Learn how to build well-calibrated models in this crash course →

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.