TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

Knowledge Distillation with Teacher Assistant for Model Compression

Knowledge distillation is quite commonly used to compress large ML models after training.

Today, I want to discuss a “Teacher Assistant” method used to improve this technique. I recently read about this in a research paper.

Let’s begin!

What is knowledge distillation?

As discussed earlier in this newsletter, the idea is to train a smaller/simpler model (called the “student” model) that mimics the behavior of a larger/complex model (called the “teacher” model):

This involves two steps:

- Train the teacher model as we typically would.

- Train a student model that matches the output of the teacher model.

If we compare it to an academic teacher-student scenario, the student may not be as performant as the teacher. But with consistent training, a smaller model may get (almost) as good as the larger one.

A classic example of a model developed in this way is DistillBERT. It is a student model of BERT.

- DistilBERT is approximately 40% smaller than BERT, which is a massive difference in size.

- Still, it retains approximately 97% of the BERT’s capabilities.

If you want to get into more detail about the implementation, we covered it here, with 5 more techniques for model compression: Model Compression: A Critical Step Towards Efficient Machine Learning.

An issue with knowledge distillation

Quite apparently, we always desire the student model to be as small as possible while retaining the maximum amount of knowledge, correct?

However, in practice, two things are observed:

- For a given size of the student model, there is a limit on the size of the teacher model it can learn from.

- For a given size of the teacher model, one can effectively transfer knowledge to student models only up to a certain size, not lower.

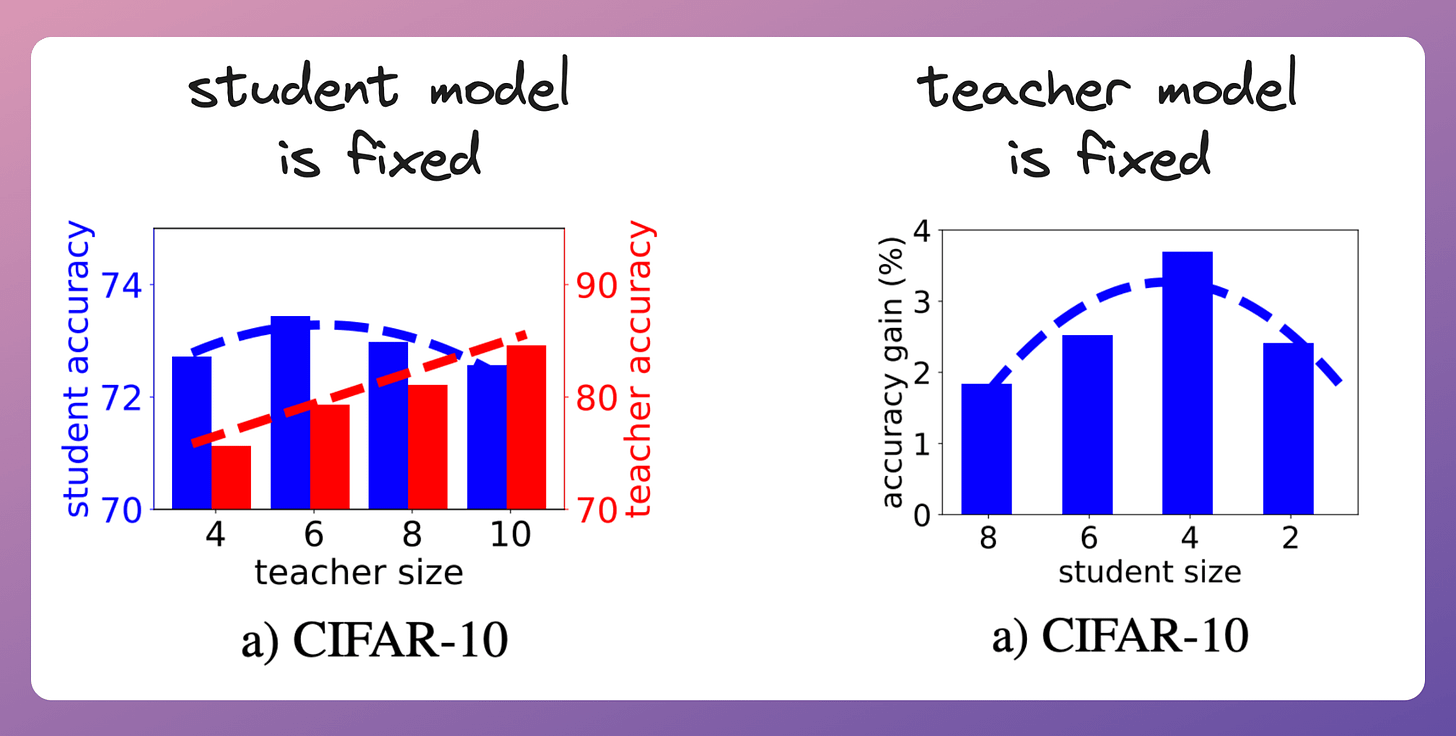

This is evident from the plots below on the CIFAR-10 dataset:

- In the left plot, the student model’s size is fixed (2 CNN layers). Here, we notice that as the size of the teacher model increases, the accuracy of the student model first increases and then decreases.

- In the right plot, the teacher model’s size is fixed (10 CNN layers). As the student model’s size decreases, the student model’s accuracy gain over another student model of the same size trained without knowledge distillation first increases and then decreases.

Both plots suggest that the knowledge distillation can only be effective within a specific range of model sizes.

Solution

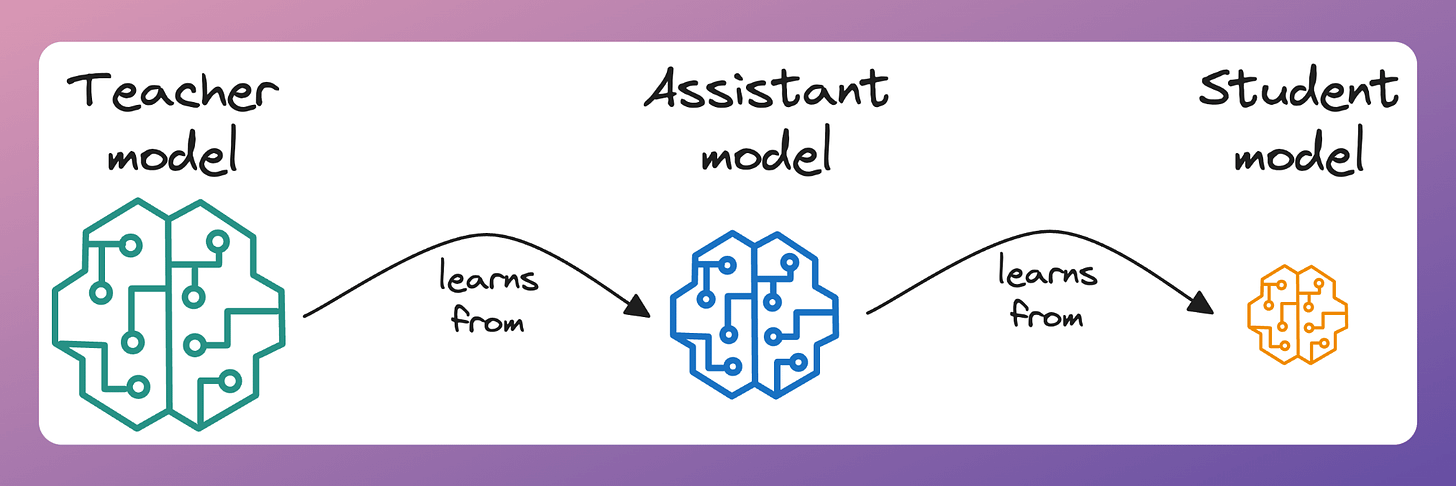

A simple technique to address this is by introducing an intermediate model called the “teacher assistant.”

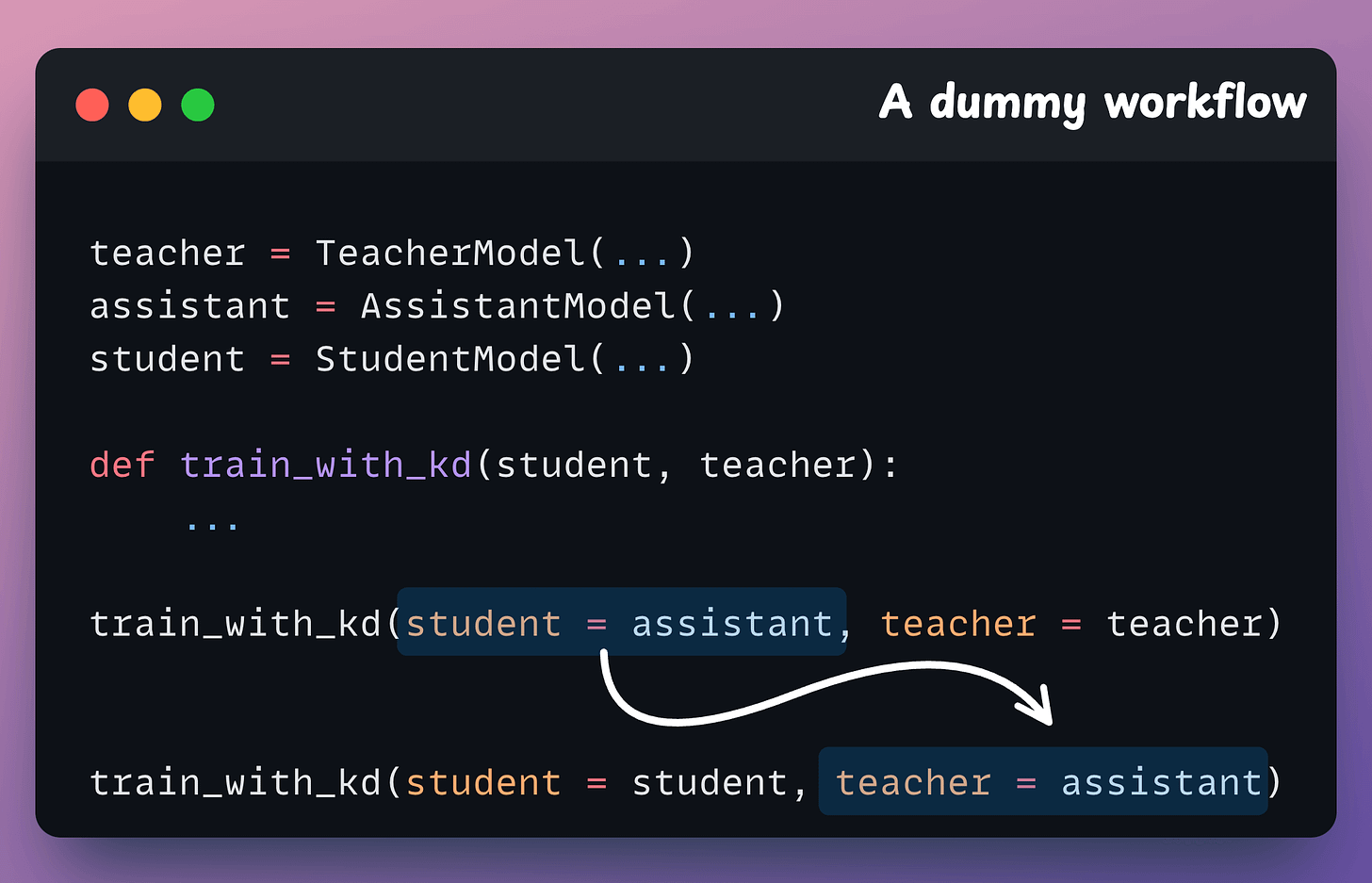

The process is simple, as depicted in the image below:

- Step 1: The assistant model learns from the teacher model using the standard knowledge distillation process.

- Step 2: The student model learns from the assistant model using the standard knowledge distillation process.

Yet again, this resonates with an actual academic setting—a teaching assistant often acts as an intermediary between a highly skilled professor and an amateur junior student.

The teaching assistant has a closer understanding of the professor’s complex material and is simultaneously more familiar with the learning pace of the junior students and their beginner mindset.

Of course, this adds a layer of additional training in the model building process.

However, given that development environments are usually not restricted in terms of the available computing resources, this technique can significantly enhance the performance and efficiency of the final student model.

Moreover, the cost of running the model in production can grow exponentially with demand, but relative to that, training costs still remain low, so the idea is indeed worth pursuing.

Results

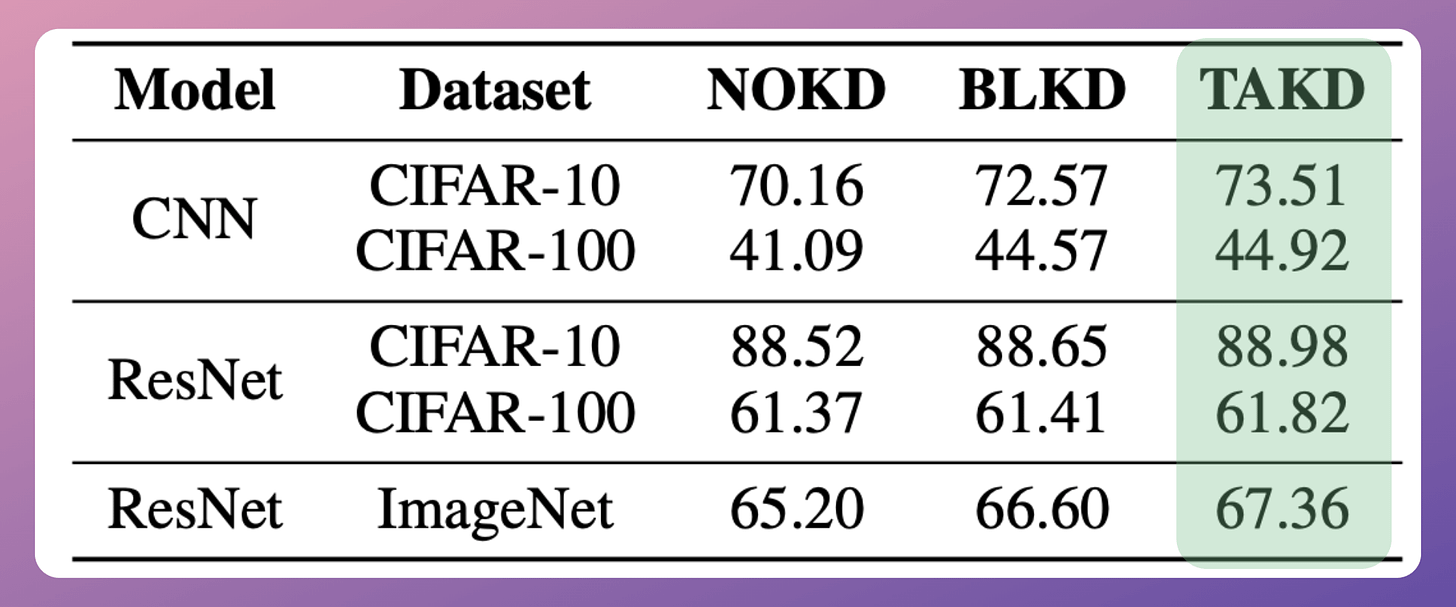

The efficacy is evident from the image below:

- NOKD → Training a model of student’s size directly.

- BLKD → Training the student model from the teacher model.

- TAKD → Training the student model using the teacher assistant.

In all cases, utilizing the teacher assistant works better than the other two approaches.

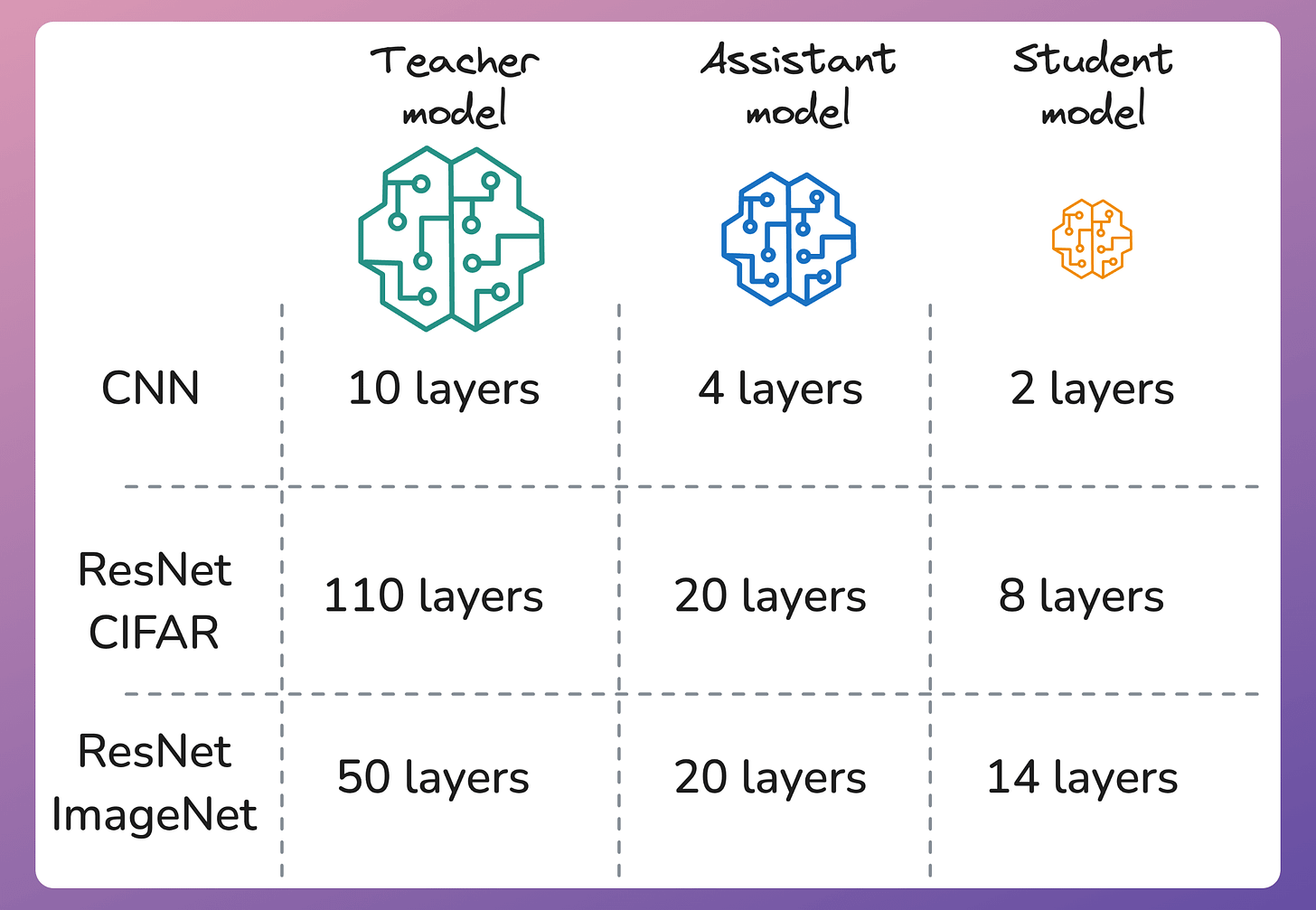

Here are the model configurations in the above case:

One interesting observation in these results is that the assistant model does not have to be significantly similar in size to the teacher model. For instance, in the above image, the assistant model is more than 50% smaller in all cases than the teacher model.

The authors even tested this.

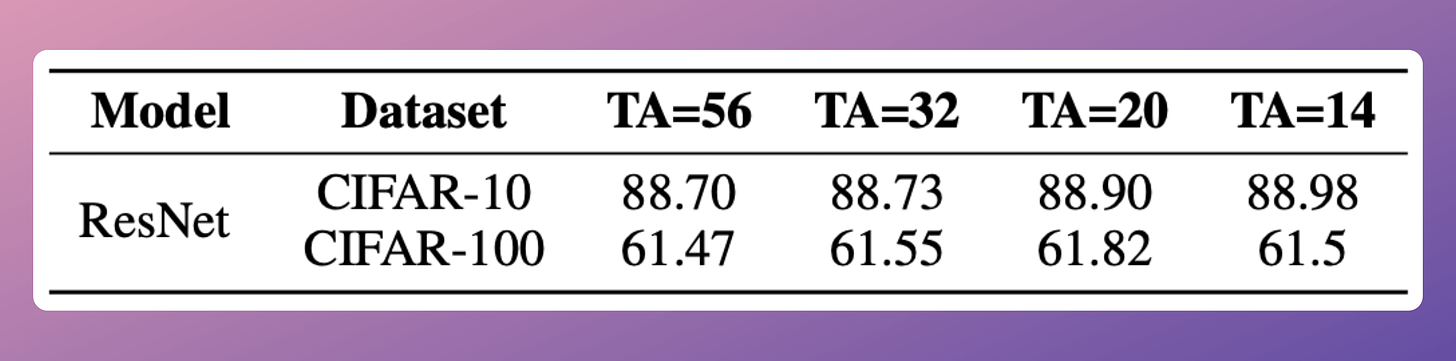

Consider the table below, wherein the size of the assistant model is varied while the size of the student and teacher models is fixed. The numbers reported are the accuracy of the student model in each case.

As depicted above, the difference in accuracy with a 56-layer assistant model is quite close (and even better once) to the accuracy with a 4 times smaller assistant model (14-layers).

Impressive, right?

Since we have already covered the implementation before (read this for more info), here’s a quick summary of what this would look like:

train_with_kd is assumed to be a user-defined function that trains a student model from a teacher model.Hope you learned something new today!

That said, if ideas related to production and deployment intimidate you, below, we have a quick roadmap to upskill you (assuming you know how to train a model).

👉 Over to you: What are some other ways to compress ML models?

ROADMAP

From local ML to production ML

Once a model has been trained, we move to productionizing and deploying it.

If ideas related to production and deployment intimidate you, here’s a quick roadmap for you to upskill (assuming you know how to train a model):

- First, you would have to compress the model and productionize it. Read these guides:

- Reduce their size with Model Compression techniques.

- Supercharge PyTorch Models With TorchScript.

- If you use sklearn, learn how to optimize them with tensor operations.

- Next, you move to deployment. Here’s a beginner-friendly hands-on guide that teaches you how to deploy a model, manage dependencies, set up model registry, etc.

- Although you would have tested the model locally, it is still wise to test it in production. There are risk-free (or low-risk) methods to do that. Learn what they are and how to implement them here.

This roadmap should set you up pretty well, even if you have NEVER deployed a single model before since everything is practical and implementation-driven.

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.