TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

Building a Multi-agent Financial Analyst

Lately, we have done quite a few demos where we built multi-agent systems (we’ll link them towards the end of this issue).

Today, let’s do another demo, wherein we’ll build a multi-agent financial analyst using Microsoft’s Autogen and Llama3-70B:

Here’s our tech stack for this demo:

- Microsoft’s Autogen, which is an open-source framework for building AI agent systems

- Qualcomm’s Cloud AI 100 ultra for serving Llama 3-70B.

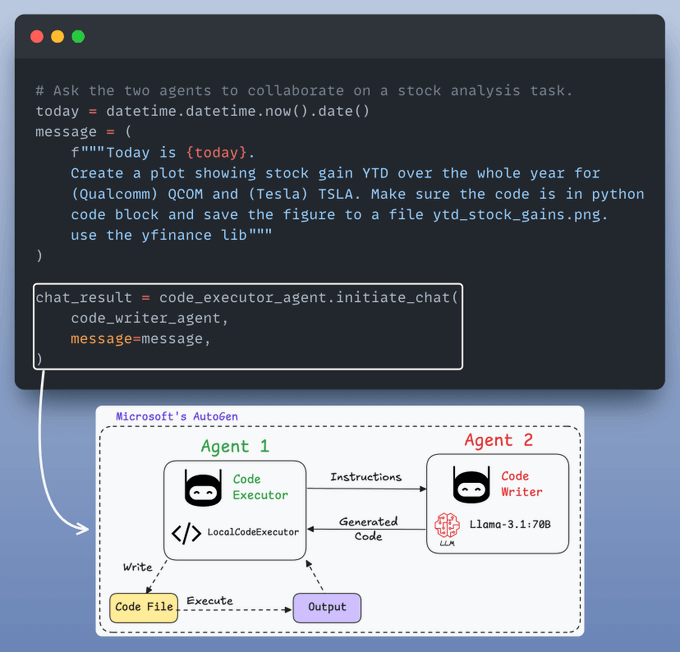

We’ll have two agents in this multi-agent app:

- Code executor agent → Orchestrates code execution as follows:

- Accepts user query

- Collaborates with code writer agent (discussed below)

- Executes the code locally

- Collects final results

- Code writer agent → Uses an LLM to generate code based on user instructions and collaborates with the code executor agent.

If you prefer to watch, we have added a video demo below.

It demonstrates what we’re building today and a quick walkthrough of Qualcomm’s playground to help you get started with everything.

Prerequisites

Get your API keys and free playground access to run Llama 3.1-8B, 70B here →

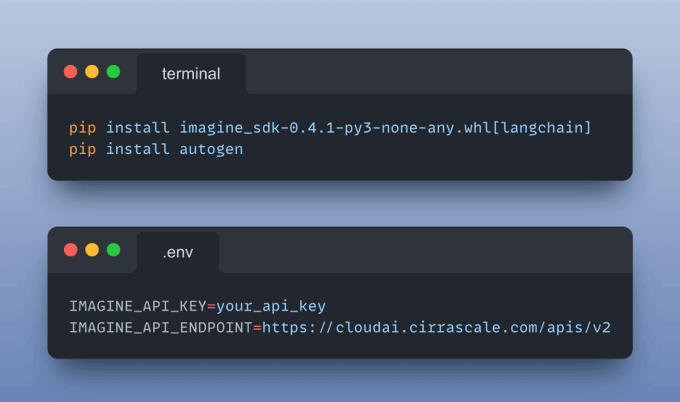

Next, install the following dependencies and add your API keys obtained from the playground to the .env file.

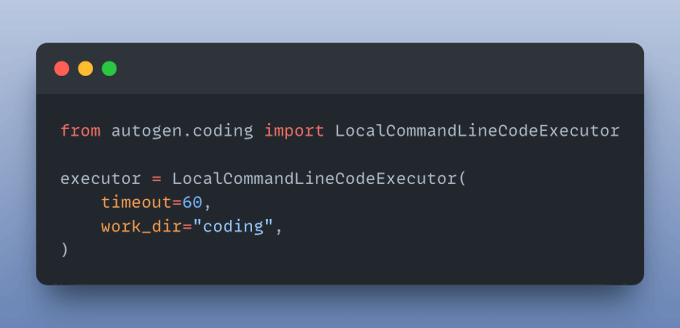

Define code executor

The LocalCommandLineCodeExecutor runs the AI-generated code and saves all files and results in the specified directory.

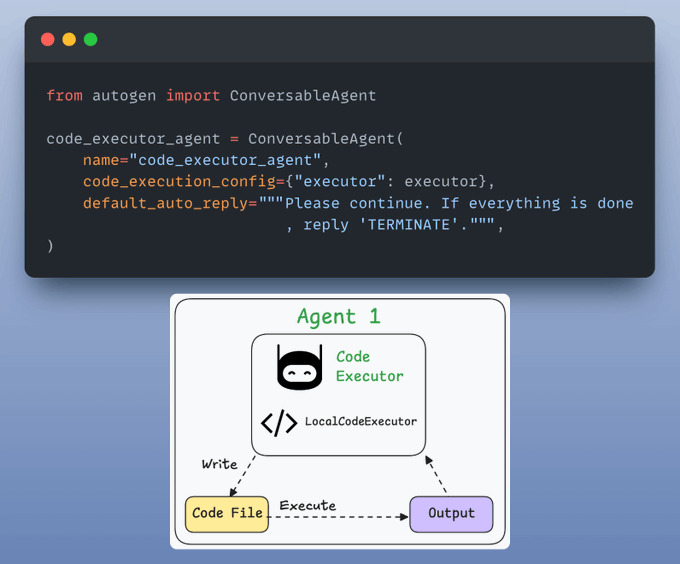

Define code executor agent

Code executor agent orchestrates code execution:

- Accepts user query

- Collaborates with code writer agent (implemented below)

- Executes the code locally using the code executor defined above

Collects final results

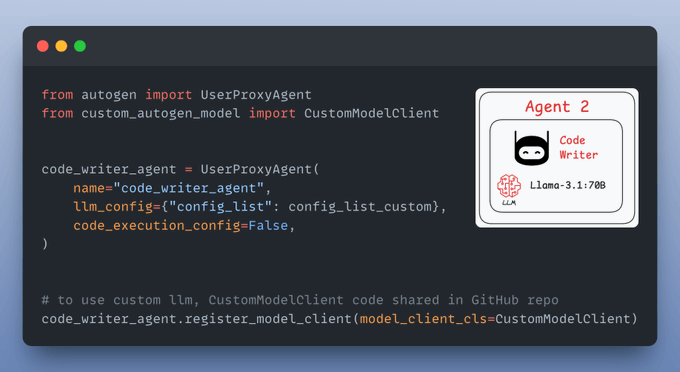

Define code writer agent

It uses an LLM to generate code based on user instructions and collaborates with the code executor agent.

Almost done!

Start stock analysis

Finally, we provide a query and let the agents collaborate:

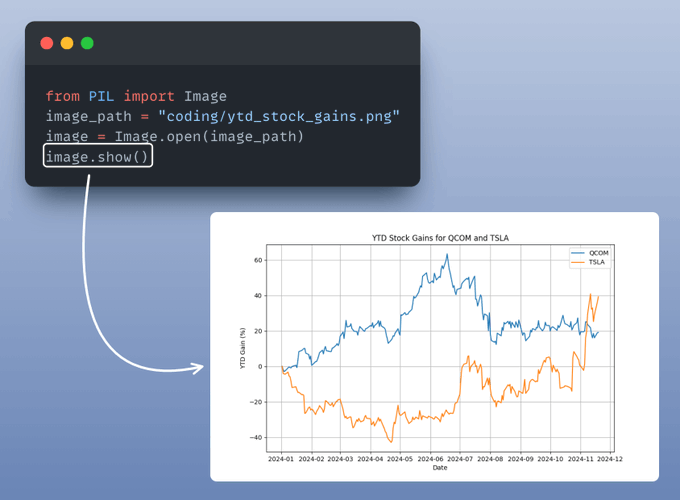

Display final results

Recall that we configured the LocalCommandLineCodeExecutor above to save all files and results in the specified directory.

Let’s display the stock analysis chart:

Perfect!

It produces the desired result.

You can find all the code and instructions to run in this GitHub repo: AI Engineering Hub.

We launched this repo recently, wherein we’ll publish the code for such hands-on AI engineering newsletter issues.

This repository will be dedicated to:

- In-depth tutorials on LLMs and RAGs.

- Real-world AI agent applications.

- Examples to implement, adapt, and scale in your projects.

Find it here: AI Engineering Hub (and do star it).

🙌 Also, a big thanks to Qualcomm for partnering with us and letting us use one of the fastest LLM inference engines they provide for today’s newsletter issue.

👉 Over to you: What other topics would you like to learn about?

IN CASE YOU MISSED IT

Prompting vs. RAG vs. Fine-tuning

If you are building real-world LLM-based apps, it is unlikely you can start using the model right away without adjustments. To maintain high utility, you either need:

- Prompt engineering

- Fine-tuning

- RAG

- Or a hybrid approach (RAG + fine-tuning)

The following visual will help you decide which one is best for you:

Read more in-depth insights into Prompting vs. RAG vs. Fine-tuning here →

ROADMAP

From local ML to production ML

Once a model has been trained, we move to productionizing and deploying it.

If ideas related to production and deployment intimidate you, here’s a quick roadmap for you to upskill (assuming you know how to train a model):

- First, you would have to compress the model and productionize it. Read these guides:

- Reduce their size with Model Compression techniques.

- Supercharge PyTorch Models With TorchScript.

- If you use sklearn, learn how to optimize them with tensor operations.

- Next, you move to deployment. Here’s a beginner-friendly hands-on guide that teaches you how to deploy a model, manage dependencies, set up model registry, etc.

- Although you would have tested the model locally, it is still wise to test it in production. There are risk-free (or low-risk) methods to do that. Learn what they are and how to implement them here.

This roadmap should set you up pretty well, even if you have NEVER deployed a single model before since everything is practical and implementation-driven.

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.