4 Ways to Run LLMs Locally

Llama-3, DeepSeek, Phi, and many many more.

Llama-3, DeepSeek, Phi, and many many more.

TODAY'S ISSUE

Being able to run LLMs also has many upsides:

Here are four ways to run LLMs locally.

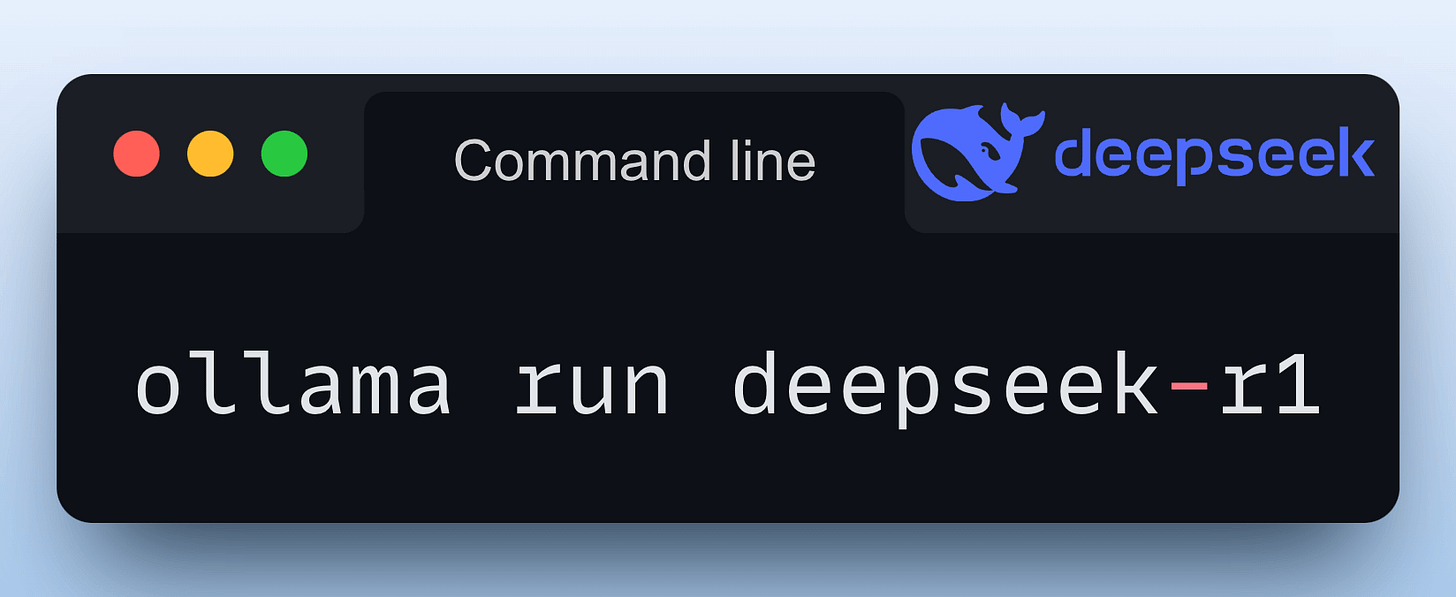

Running a model through Ollama is as simple as executing this command:

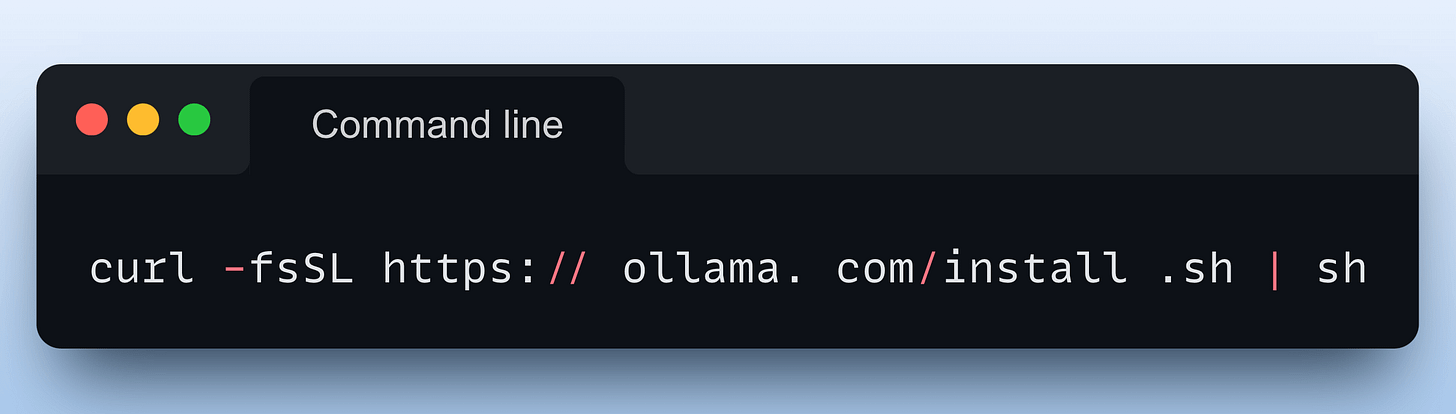

To get started, install Ollama with a single command:

Done!

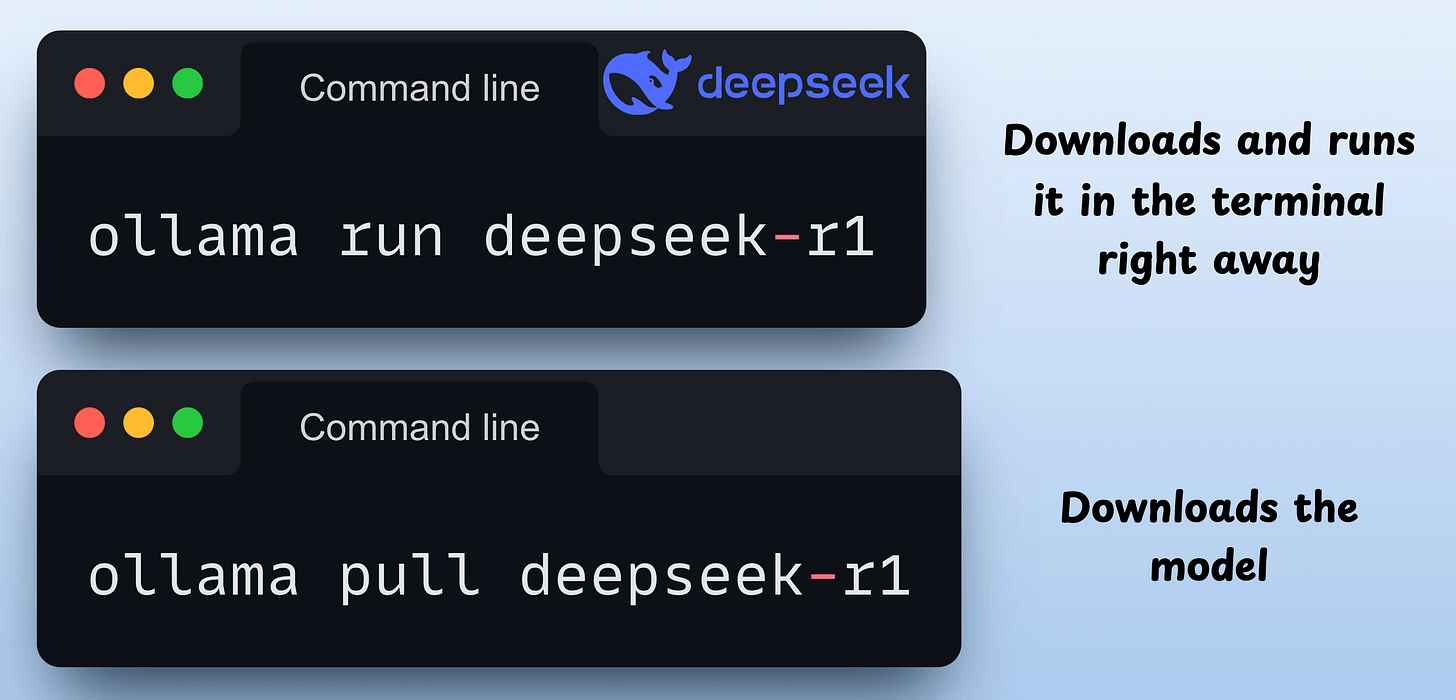

Now, you can download any of the supported models using these commands:

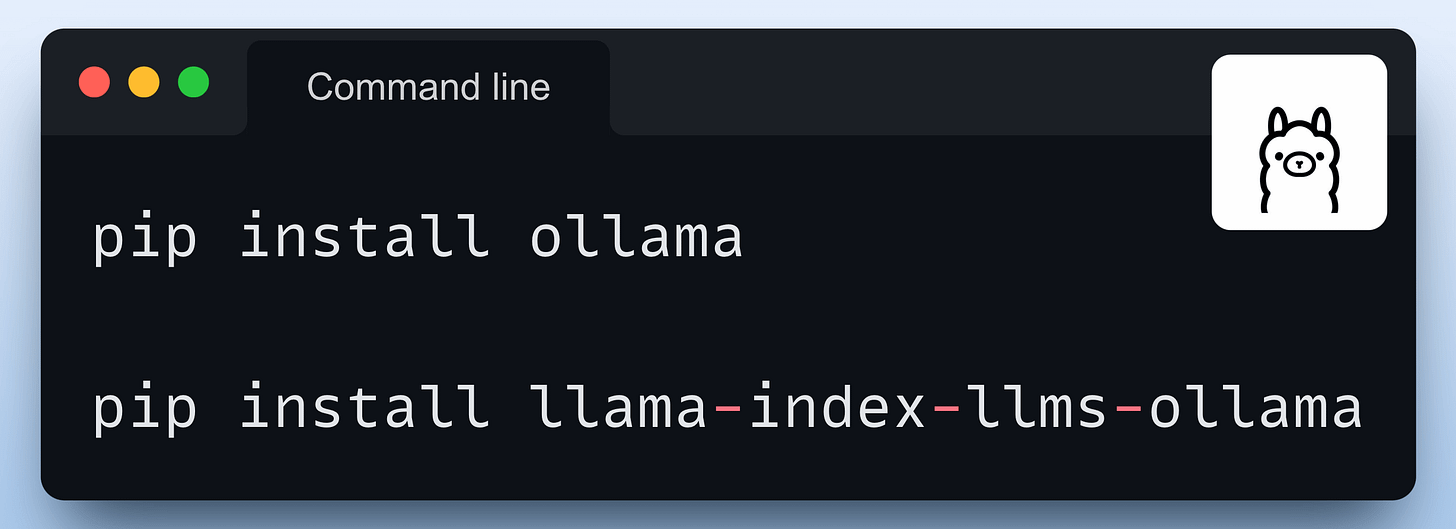

For programmatic usage, you can also install the Python package of Ollama or its integration with orchestration frameworks like Llama Index or CrewAI:

We heavily used Ollama in our RAG crash course if you want to dive deeper.

The video below shows the usage of ollama run deepseek-r1 command:

LMStudio can be installed as an app on your computer.

The app does not collect data or monitor your actions. Your data stays local on your machine. It’s free for personal use.

It offers a ChatGPT-like interface, allowing you to load and eject models as you chat. This video shows its usage:

Just like Ollama, LMStudio supports several LLMs as well.

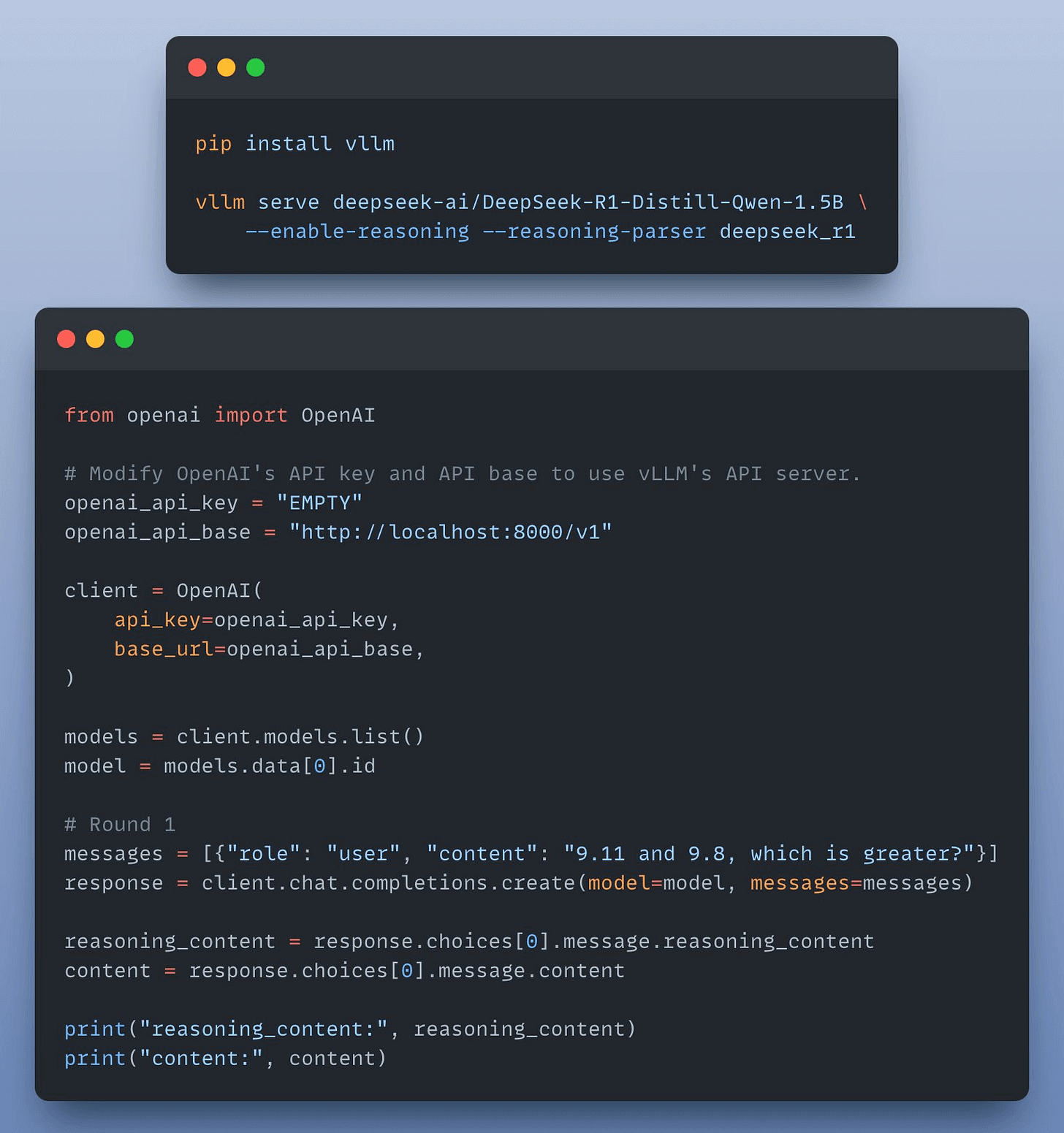

vLLM is a fast and easy-to-use library for LLM inference and serving.

With just a few lines of code, you can locally run LLMs (like DeepSeek) in an OpenAI-compatible format:

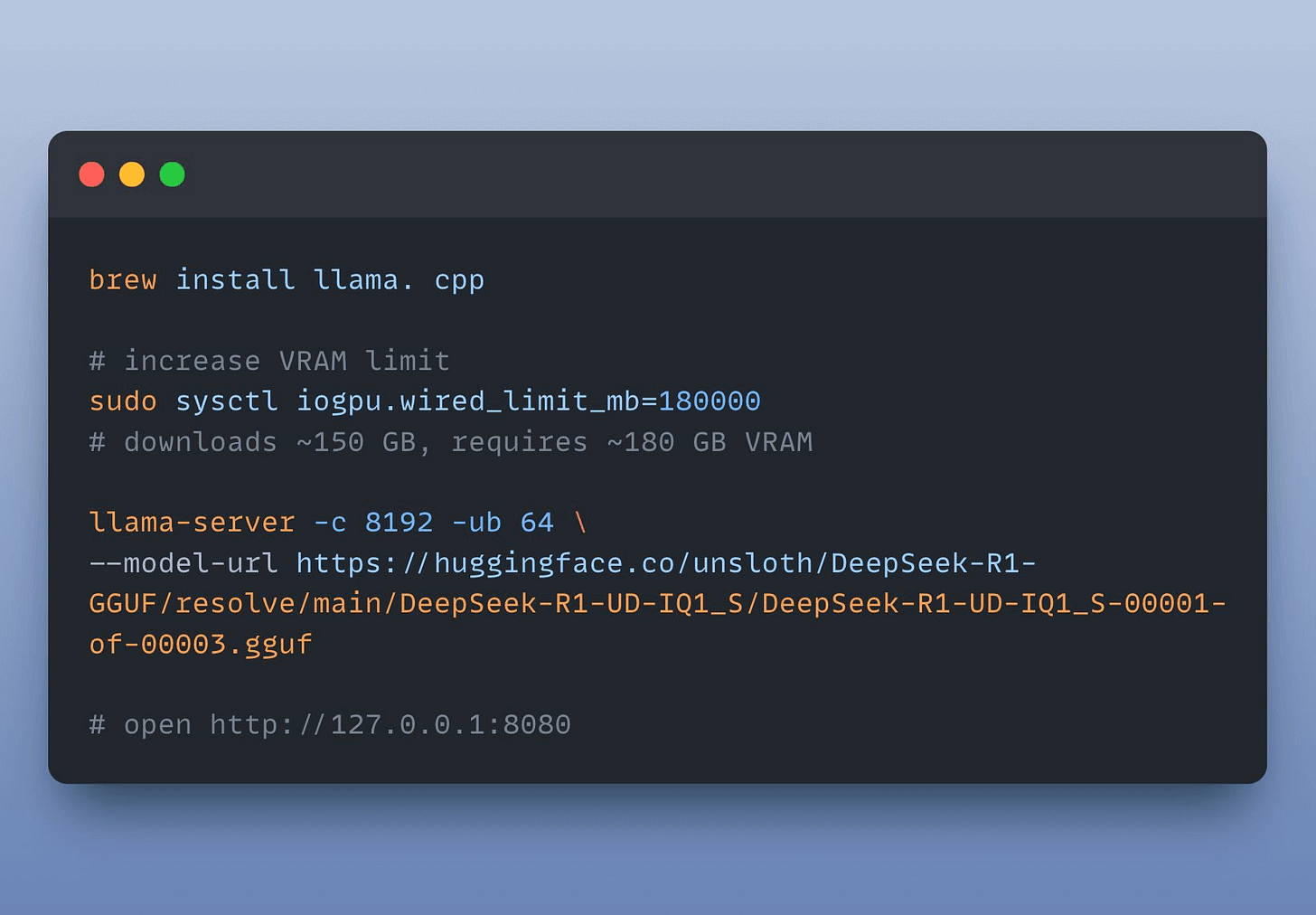

LlamaCPP enables LLM inference with minimal setup and good performance.

Here’s DeepSeek-R1 running on a Mac Studio:

And these were four ways to run LLMs locally on your computer.

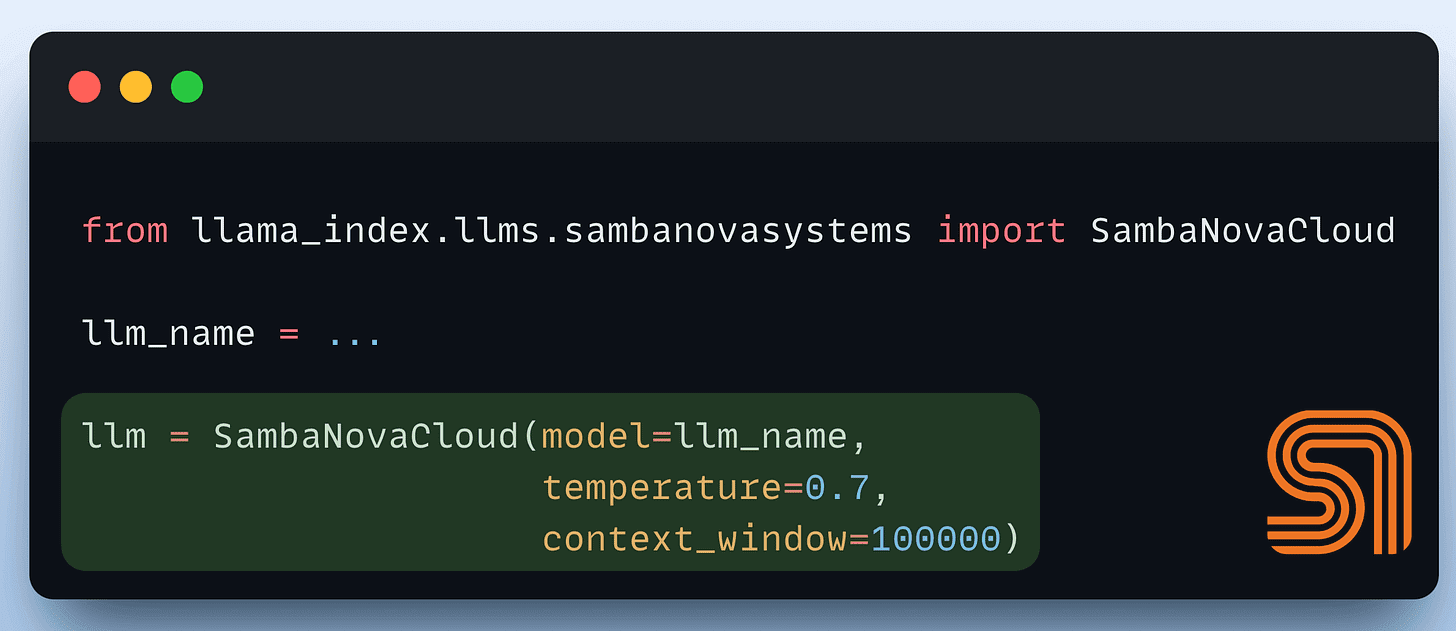

If you don’t want to get into the hassle of local setups, SambaNova’s fastest inference can be integrated into your existing LLM apps in just three lines of code:

Also, if you want to dive into building LLM apps, our full RAG crash course discusses RAG from basics to beyond:

👉 Over to you: Which method do you find the most useful?

Thanks for reading!

Consider the size difference between BERT-large and GPT-3:

I have fine-tuned BERT-large several times on a single GPU using traditional fine-tuning:

But this is impossible with GPT-3, which has 175B parameters. That's 350GB of memory just to store model weights under float16 precision.

This means that if OpenAI used traditional fine-tuning within its fine-tuning API, it would have to maintain one model copy per user:

And the problems don't end there:

LoRA (+ QLoRA and other variants) neatly solved this critical business problem.