TODAY'S ISSUE

TODAY’S DAILY DOSE OF DATA SCIENCE

[Hands-on] Building a Real-Time AI Voice Bot

AssemblyAI has always been my go-to for building speech-driven AI applications.

It’s an AI transcription platform that provides state-of-the-art AI models for any task related to speech & audio understanding.

Today, let’s build the following real-time transcription apps with AssemblyAI.

The workflow is depicted below, and the video above shows the final outcome.

- AssemblyAI for transcribing speech input from the user [speech-to-text].

- OpenAI for generating response [text-to-text].

- ElevenLabs for speech generation [text-to-speech].

The entire code is available here: Voice Bot Demo GitHub.

Let’s build the app!

VOICE BOT APP

Prerequisites and Imports

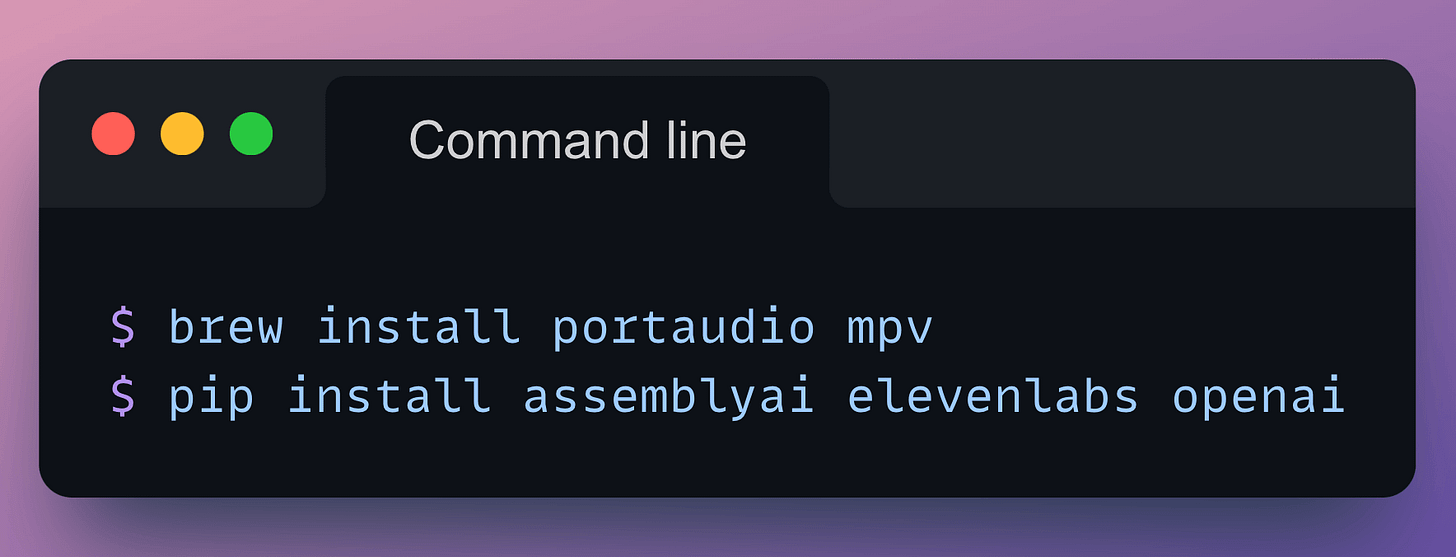

Start by installing the following libraries:

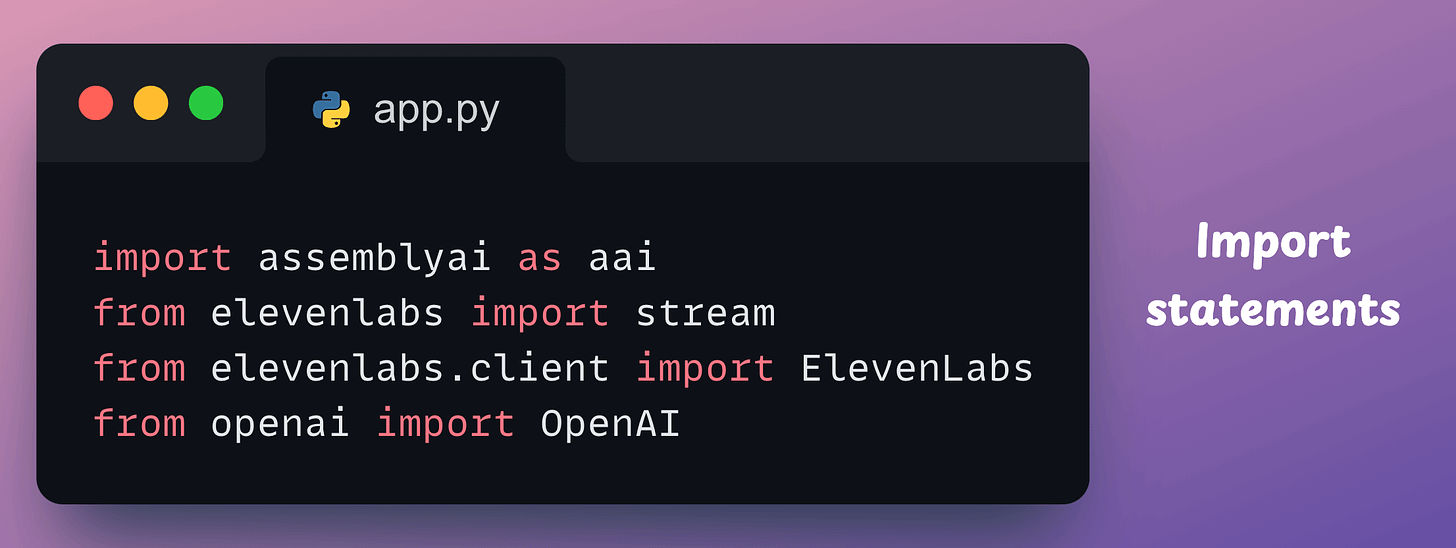

Next, create a file app.py and import the following libraries:

VOICE BOT APP

Implementation

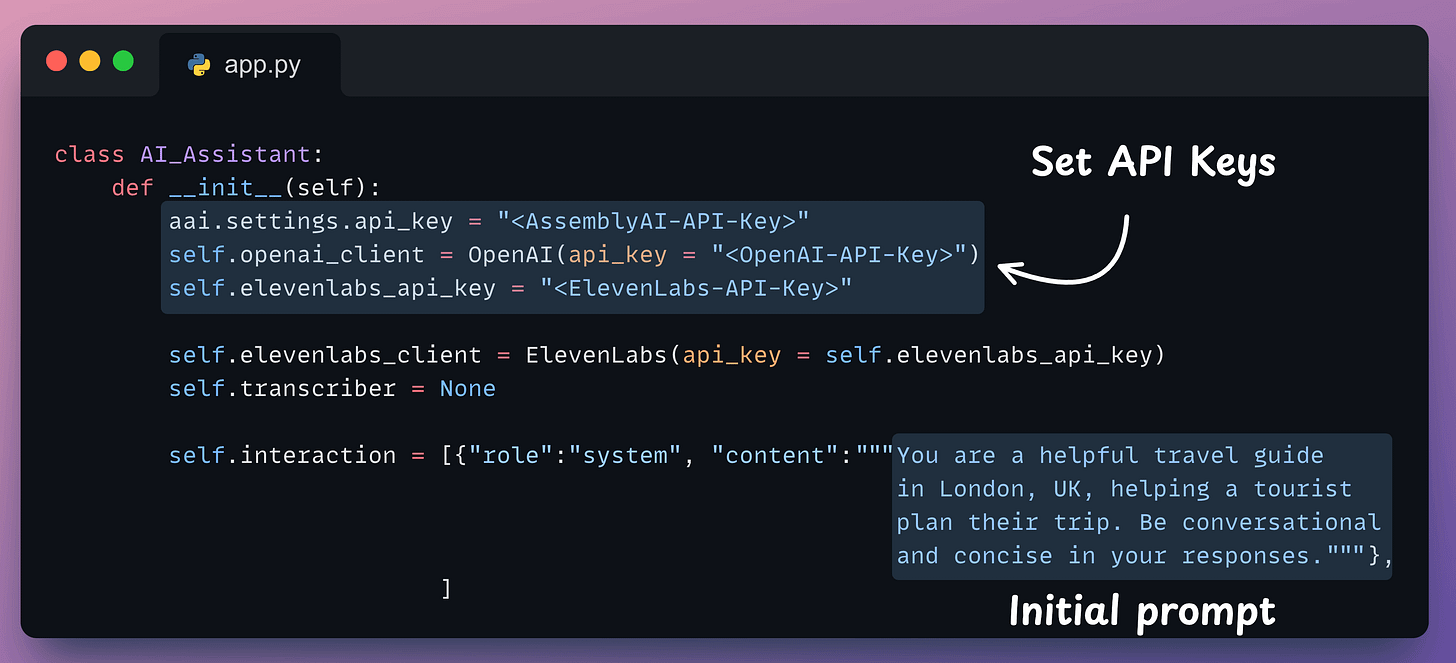

Next, we define a class, initialize the clients involved in our app—AssemblyAI, OpenAI, and ElevenLabs, and the interaction history:

- Get the API key for AssemblyAI here →

- Get the API key for OpenAI here →

- Get the API key for ElevenLabs here →

Now think about the logical steps we would need to implement in this app:

- The voice bot will speak and introduce itself.

- Then, the user will speak, and AssemblyAI will transcribe it in real-time.

- The transcribed input will be sent to OpenAI to generate a text response.

- ElevenLabs will then verbalize the response.

- Back to Step 2, all while maintaining the interaction history in the

self.interactionobject defined in the__init__method so that OpenAI has the entire context while producing a response in Step 3.

Thus, we need at least four more methods in AI_Assistant class:

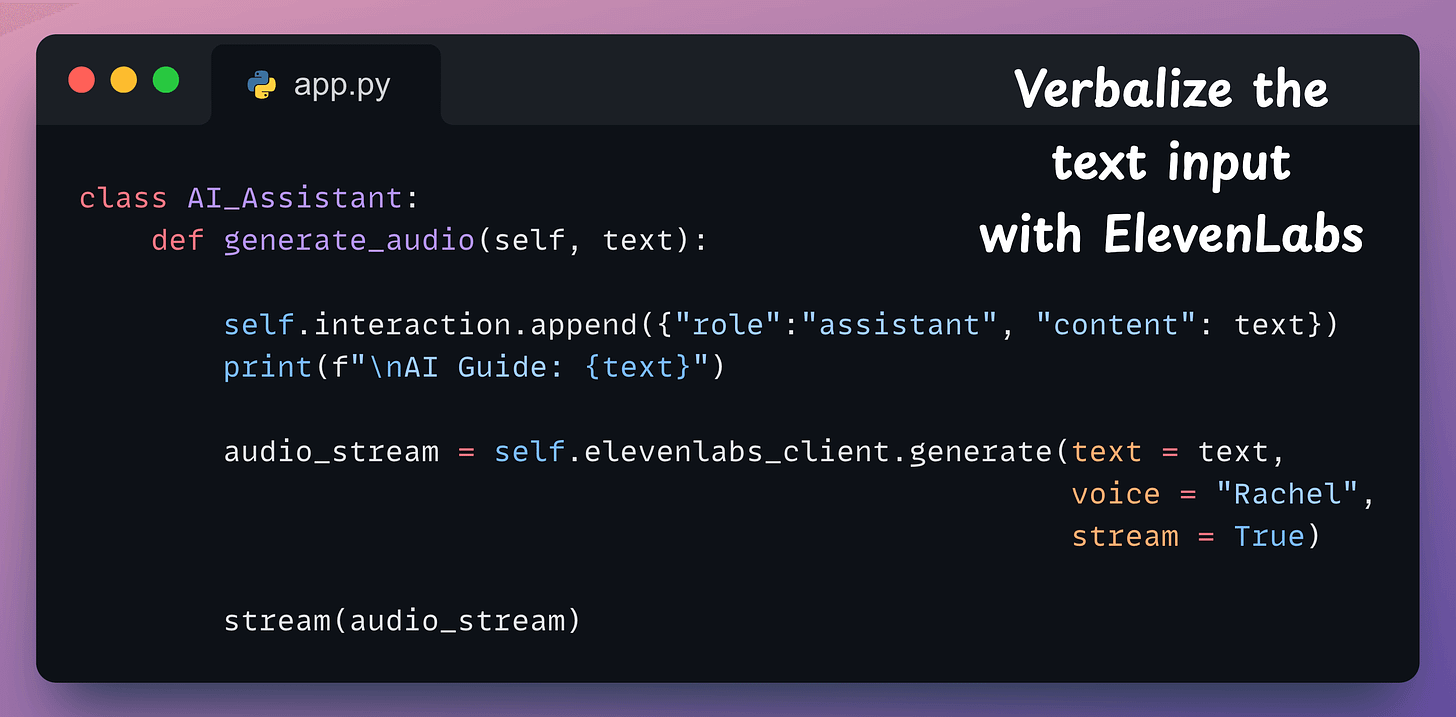

generate_audio→ Accepts some text and uses ElevenLabs to verbalize it:

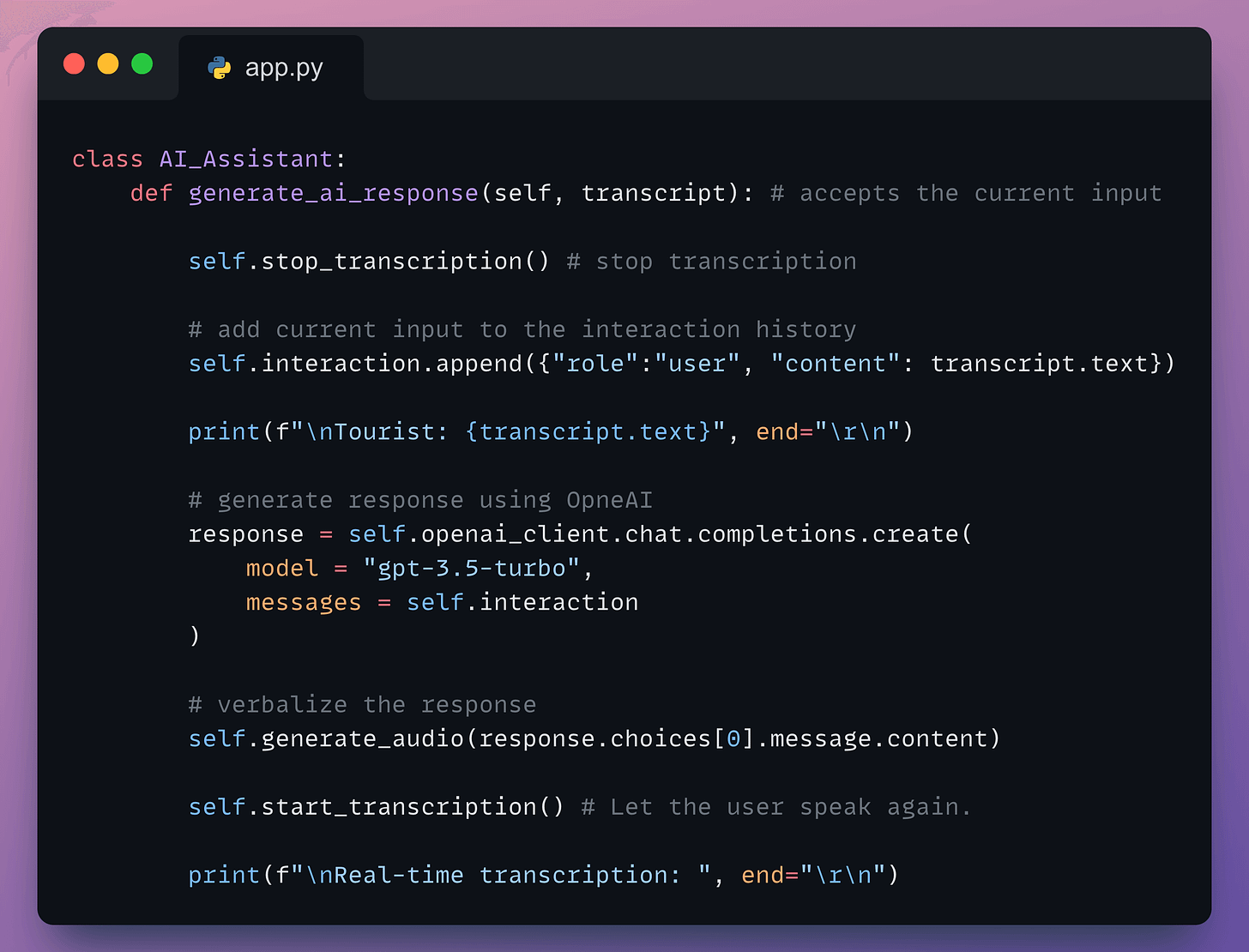

generate_ai_response→ Accepts the transcribed input, adds it to the interaction, and sends it to OpenAI to produce a response. Finally, it should pass this response to thegenerate_audiomethod:

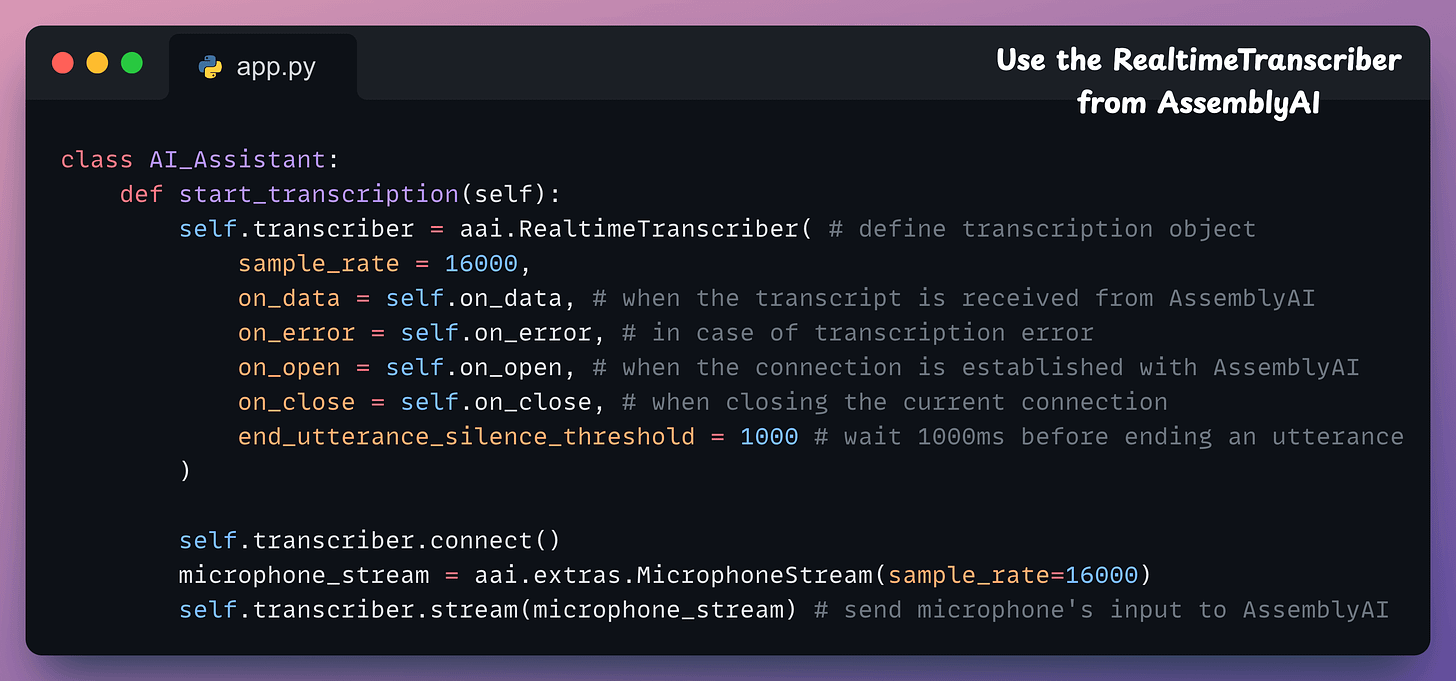

start_transcription→ Starts the microphone to record audio and transcribe it in real-time with AssemblyAI:

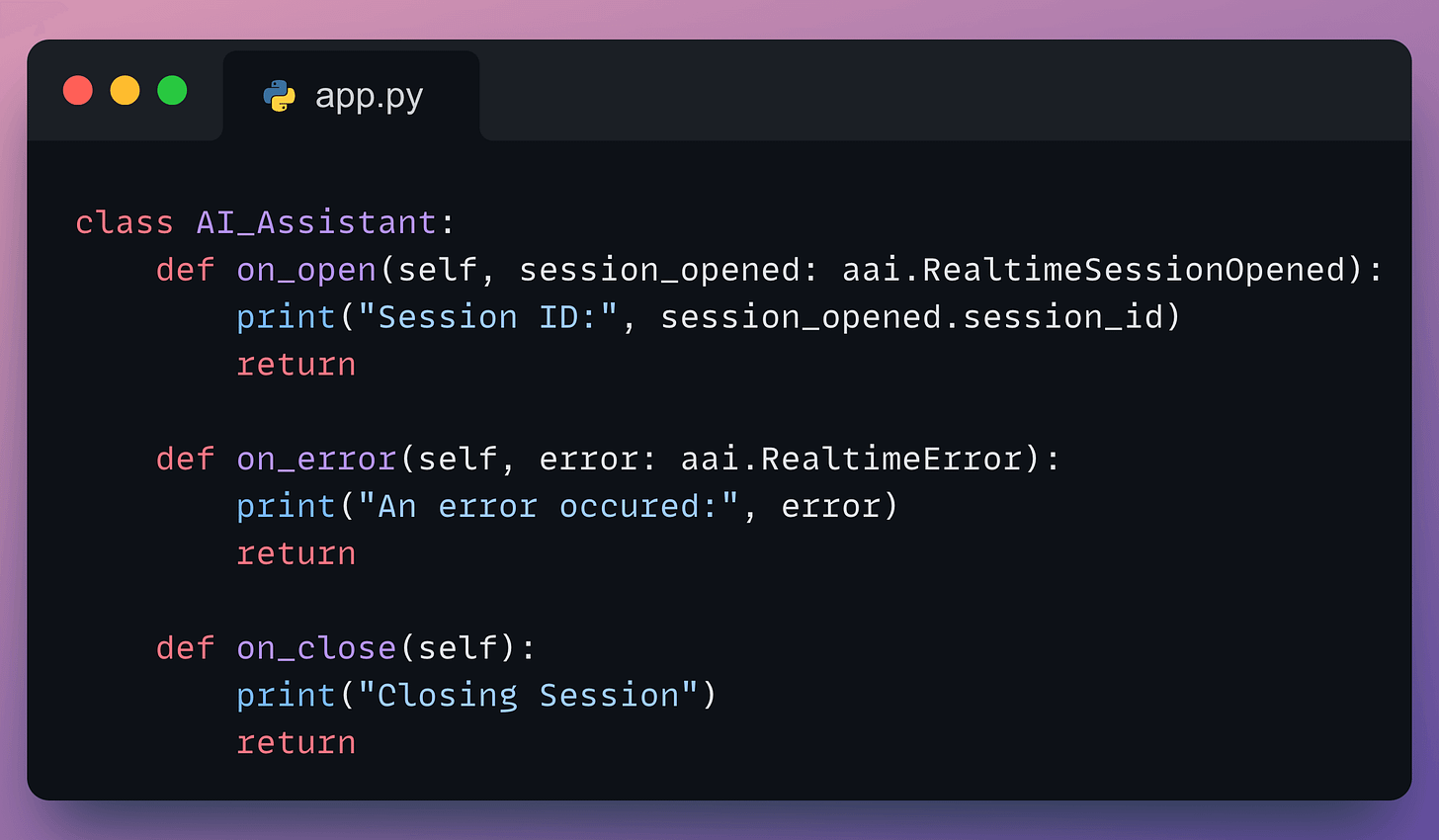

on_data→ the method to invoke upon receiving a transcript from AssemblyAI. Here, we invoke thegenerate_ai_responsemethod:on_error→ the method to invoke in case of an error (you can also reinvoke thestart_transcriptionmethod).on_open→ the method to invoke when a connection has been established with AssemblyAI.on_close→ the method to invoke when closing a connection.- These three methods are implemented below:

- Lastly, we have

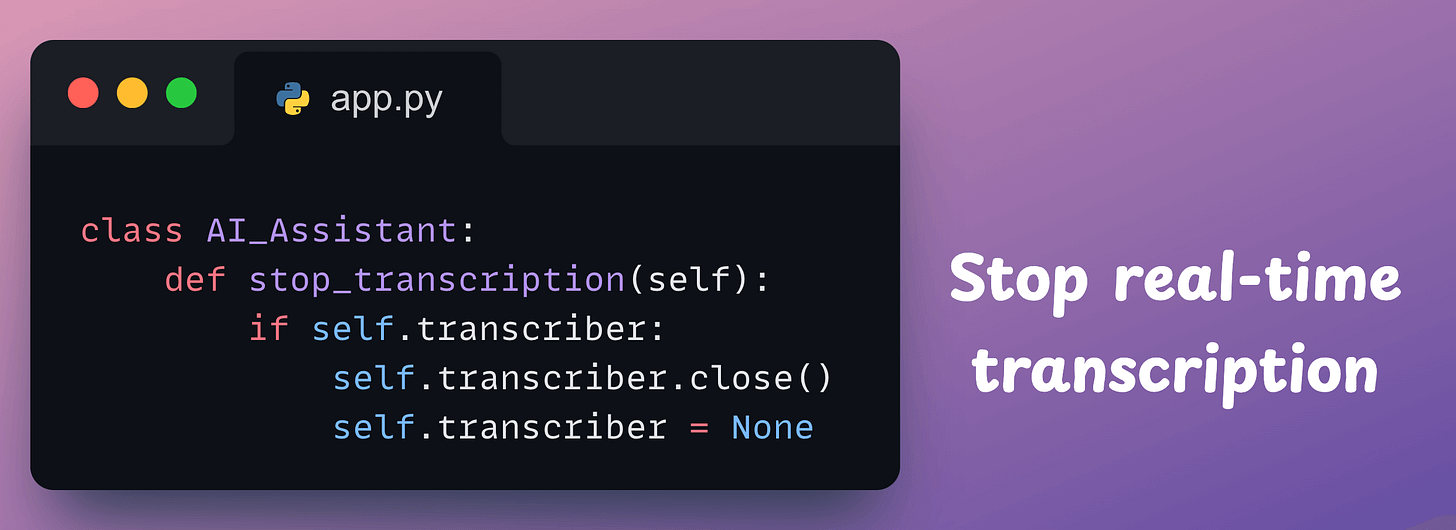

stop_transcription→ Stops the microphone and let the OpenAI generate a response using the method below.

Done!

With that, we have implemented the class.

VOICE BOT APP

Demo

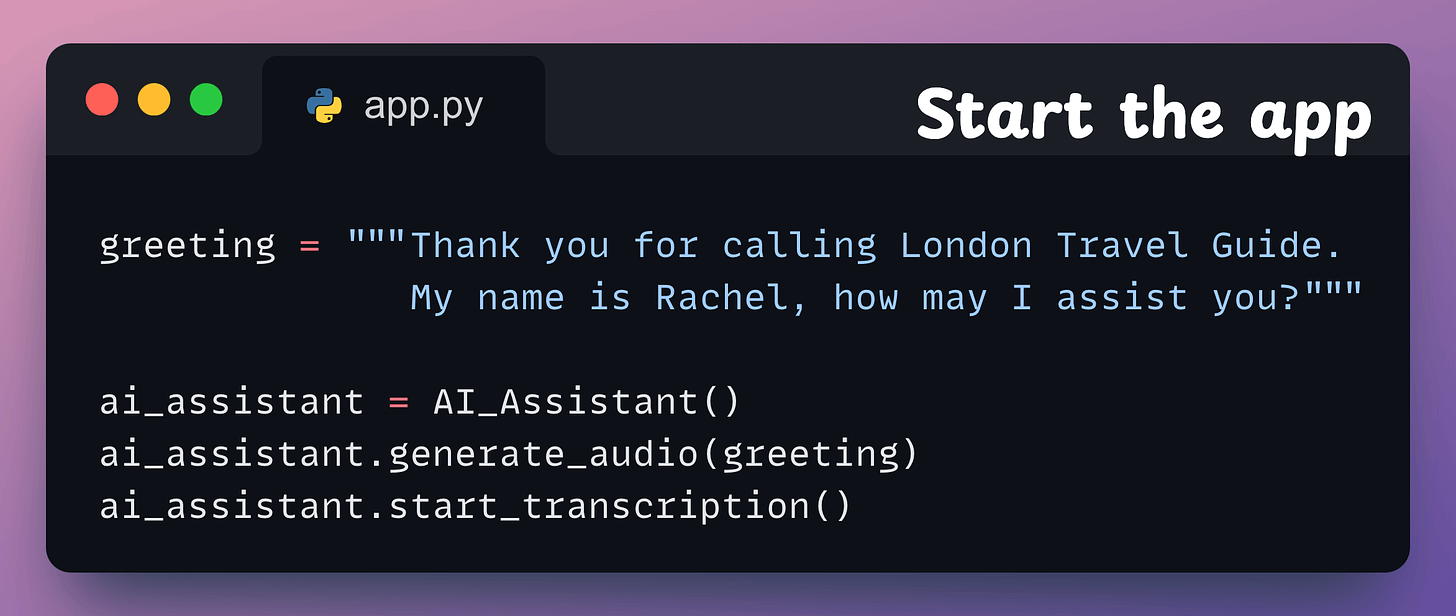

Finally, we instantiate an object of this class and start the app:

Done!

This produces the output shown in the video below:

That was simple, wasn’t it?

You can find all the code and instructions to run in this GitHub repo: Voice Bot Demo GitHub.

VOICE BOT APP

A departing note

I first used AssemblyAI two years ago, and in my experience, it has the most developer-friendly and intuitive SDKs to integrate speech AI into applications.

AssemblyAI first trained Universal-1 on 12.5 million hours of audio, outperforming every other model in the industry (from Google, OpenAI, etc.) across 15+ languages.

Now, they released Universal-2, their most advanced speech-to-text model yet.

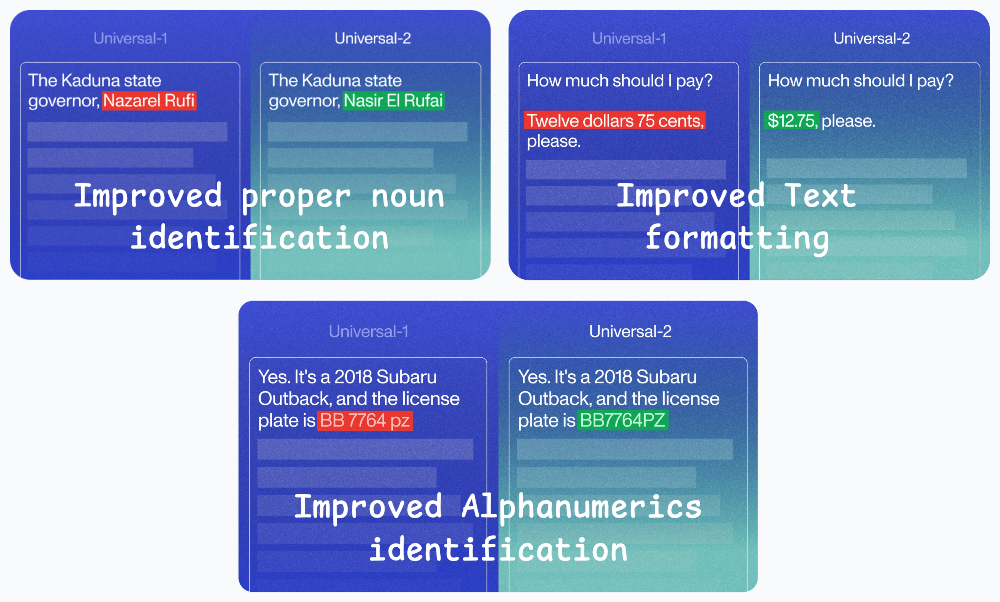

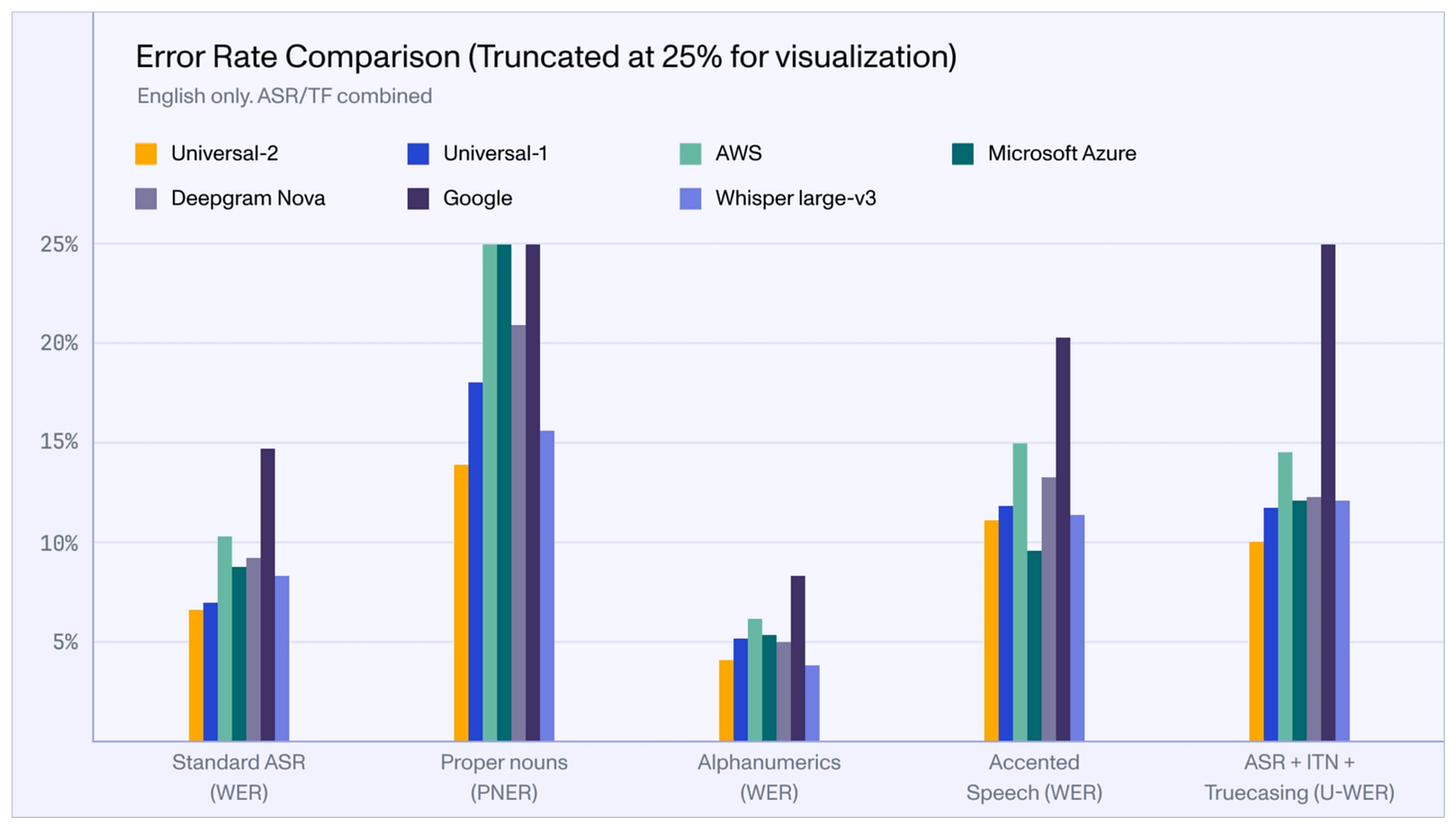

Here’s how Universal-2 compares with Universal-1:

- 24% improvement in proper nouns recognition

- 21% improvement in alphanumeric accuracy

- 15% better text formatting

Its performance compared to other popular models in the industry is shown below:

Isn’t that impressive?

I love AssemblyAI’s mission of supporting developers in building next-gen voice applications in the simplest and most effective way possible.

They have already made a big dent in speech technology, and I’m eager to see how they continue from here.

Get started with:

Their API docs are available here if you want to explore their services: AssemblyAI API docs.

🙌 Also, a big thanks to AssemblyAI, who very kindly partnered with us on this post and let us use their industry-leading AI transcription services.

👉 Over to you: What would you use AssemblyAI for?

THAT'S A WRAP

No-Fluff Industry ML resources to

Succeed in DS/ML roles

At the end of the day, all businesses care about impact. That’s it!

- Can you reduce costs?

- Drive revenue?

- Can you scale ML models?

- Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

- Learn sophisticated graph architectures and how to train them on graph data in this crash course.

- So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

- Run large models on small devices using Quantization techniques.

- Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

- Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

- Learn how to scale and implement ML model training in this practical guide.

- Learn 5 techniques with implementation to reliably test ML models in production.

- Learn how to build and implement privacy-first ML systems using Federated Learning.

- Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Advertise to 600k+ data professionals

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., around the world.