[Hands-on] Make RAG systems 32x memory efficient!

...explained step-by-step with code.

...explained step-by-step with code.

TODAY'S ISSUE

There’s a simple technique that’s commonly used in the industry that makes RAG ~32x memory efficient!

To learn this, we’ll build a RAG system that queries 36M+ vectors in <30ms.

And the technique that will power it is called Binary Quantization.

Tech stack:

Here's the workflow:

Let's build it!

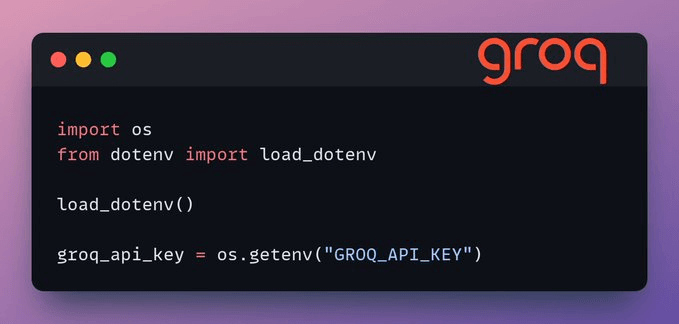

Before we begin, store your Groq API key in a .env file and load it into your environment to leverage the world's fastest AI inference.

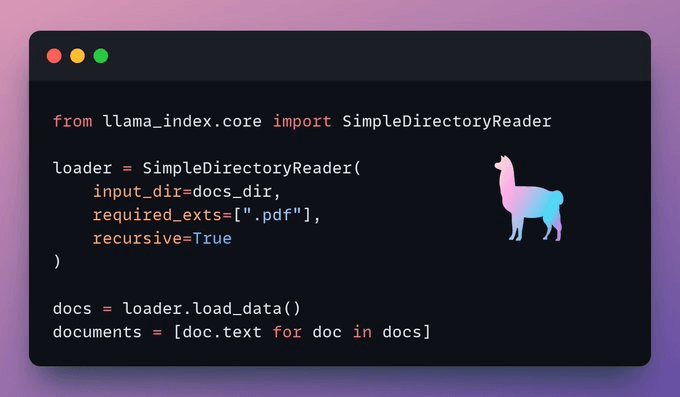

We ingest our documents using LlamaIndex's directory reader tool.

It can read various data formats including Markdown, PDFs, Word documents, PowerPoint decks, images, audio, and video.

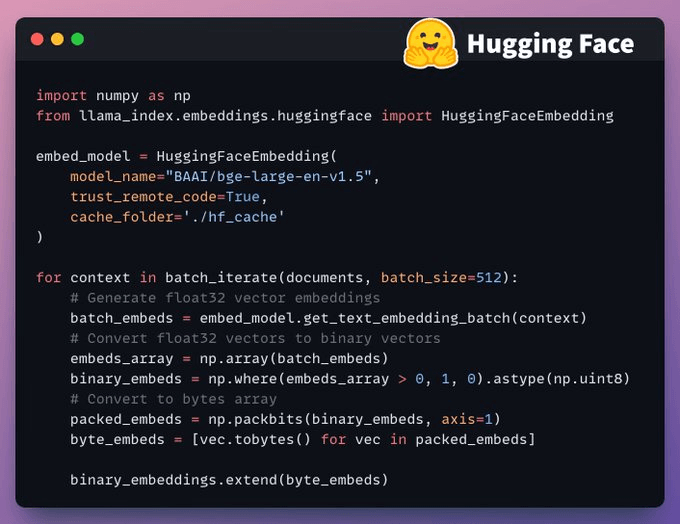

Next, we generate text embeddings (in float32) and convert them to binary vectors, resulting in a 32x reduction in memory and storage.

This is called binary quantization.

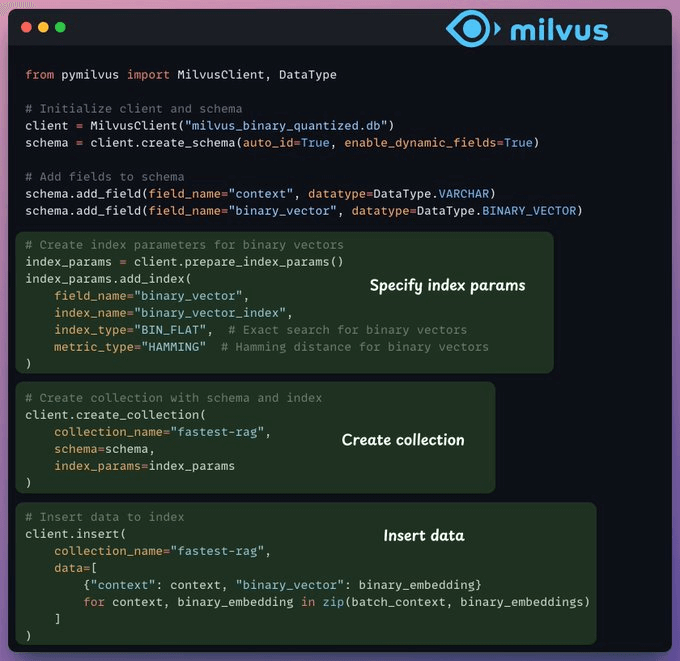

After our binary quantization is done, we store and index the vectors in a Milvus vector database for efficient retrieval.

Indexes are specialized data structures that help optimize the performance of data retrieval operations.

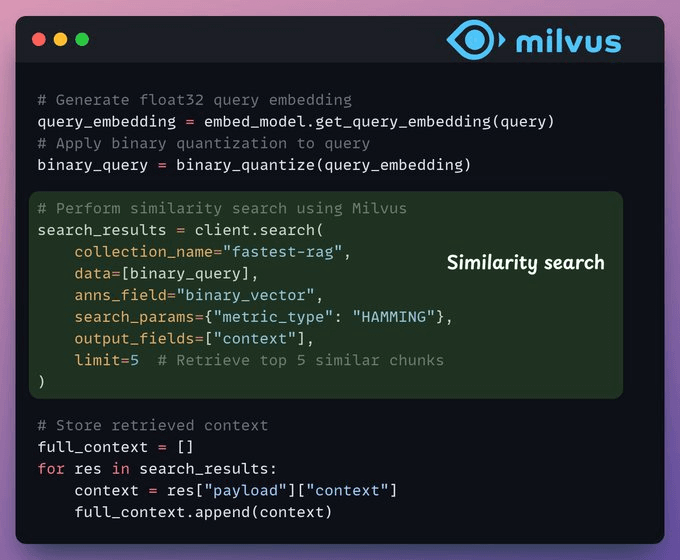

In the retrieval stage, we:

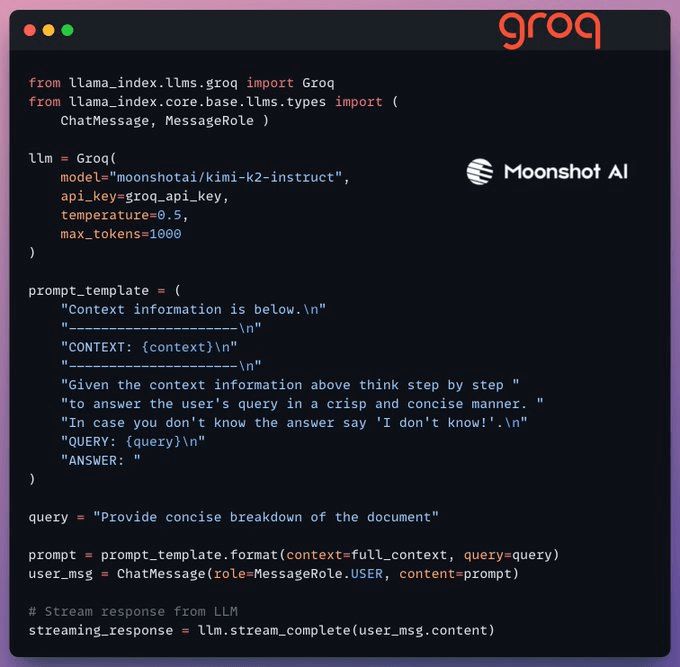

Finally, we build a generation pipeline using the Kimi-K2 instruct model, served on the fastest AI inference by Groq.

We specify both the query and the retrieved context in a prompt template and pass it to the LLM.

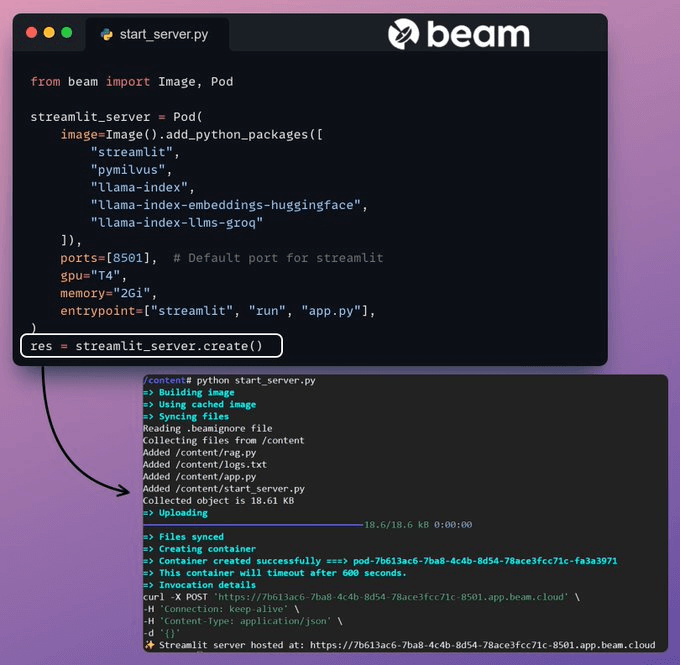

Beam provides ultra-fast serverless deployment of any AI workflow. It is fully open-source, and you can self-host it on your premises (GitHub repo).

Thus, we wrap our app in a Streamlit interface, specify the Python libraries, and the compute specifications for the container.

Finally, we deploy the app in a few lines of code

Beam launches the container and deploys our streamlit app as an HTTPS server that can be easily accessed from a web browser.

Moving on, to truly assess the scale and inference speed, we test the deployed setup over the PubMed dataset (36M+ vectors).

Our app:

Done!

We just built the fastest RAG stack leveraging BQ for efficient retrieval and using ultra-fast serverless deployment of our AI workflow.

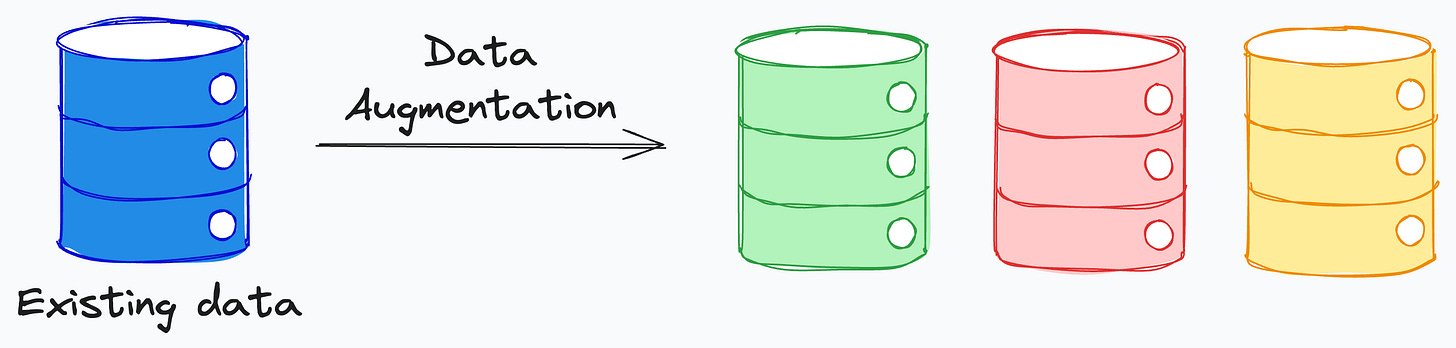

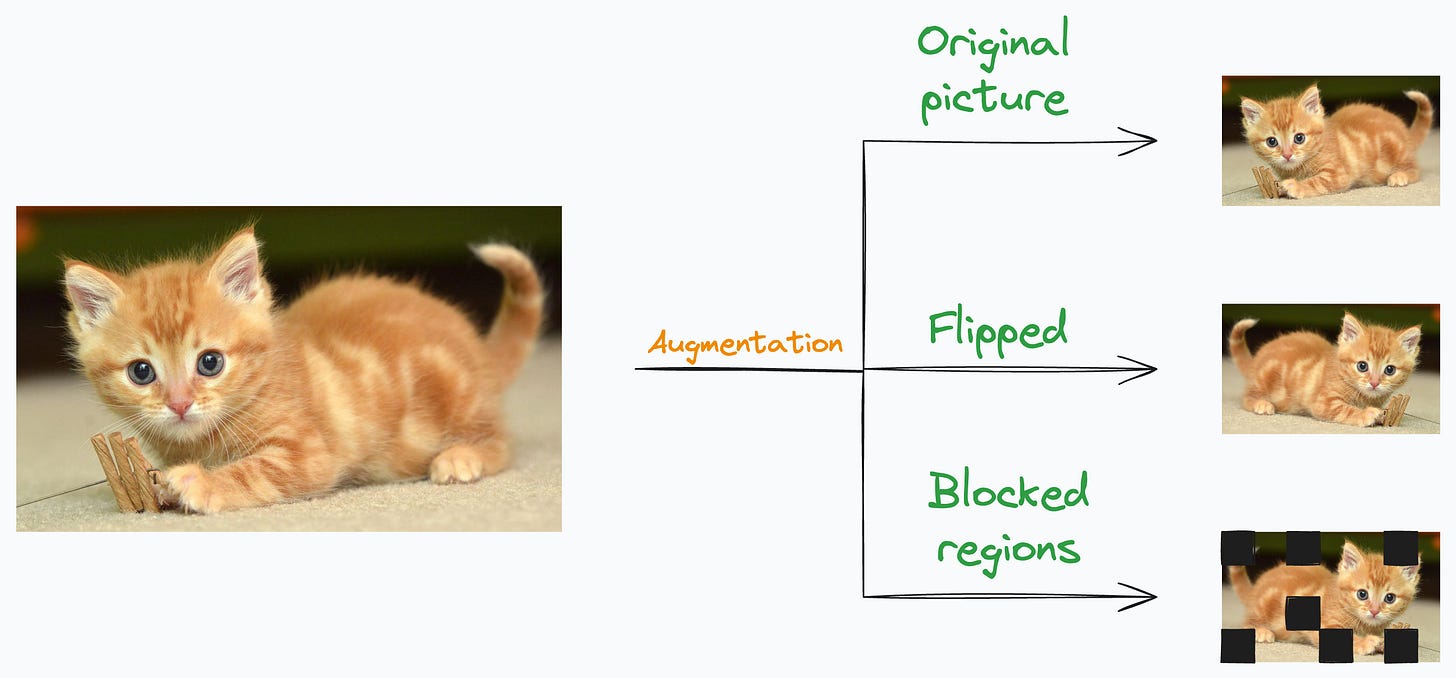

Data augmentation strategies are typically used during training time. The idea is to use clever techniques to create more data from existing data:

One example is an image classifier where rotating/flipping images will most likely not alter the true label.

While this is train-time augmentation (TTA), but there’s also test-time augmentation, where we apply data augmentation during testing.

More specifically, instead of showing just one test example to the model, we show multiple versions of the test example by applying different operations.

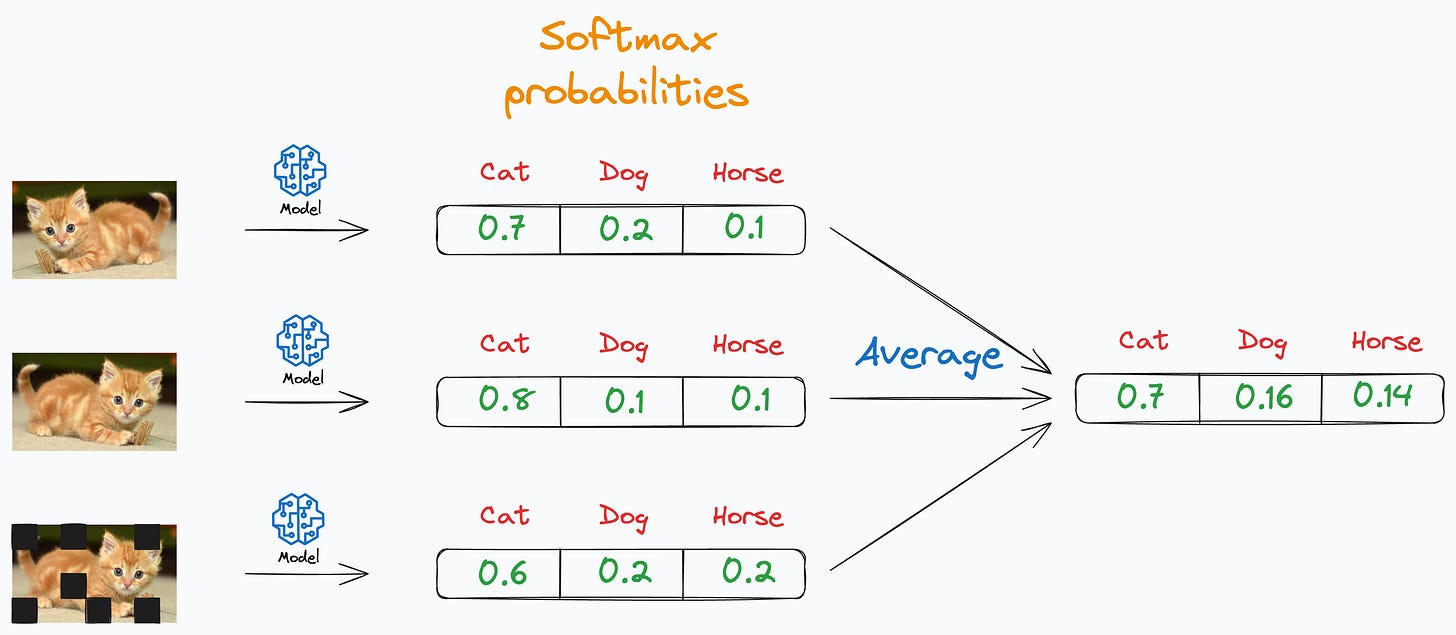

The model makes probability predictions for every version of the test example, which are then averaged/voted to generate the final prediction:

This way, it creates an ensemble of predictions by considering multiple augmented versions of the input, which leads to a more robust final prediction.

It has also been proved that the average model error with TTA never exceeds the average error of the original model, which is great.

The only catch is that it increases the inference time.

So, when a low inference time is important to you, think twice about TTA.

To summarize, if you can compromise a bit on inference time, TTA can be a powerful way to improve predictions from an existing model without having to engineer a better model.

We covered 11 powerful techniques to supercharge ML models here →