A Free Mini Crash Course on AI Agents!

..powered with MCP + Tools + Memory + Observability.

..powered with MCP + Tools + Memory + Observability.

TODAY'S ISSUE

We just released a free mini crash course on building AI Agents, which is a good starting point for anyone to learn about Agents and use them in real-world projects.

Here's what it covers:

Everything is done with a 100% open-source tool stack:

It builds Agents based on the following definition:

An AI agent uses an LLM as its brain, has memory to retain context, and can take real-world actions through tools, like browsing the web, running code, etc.

In short, it thinks, remembers, and acts.

Here's an overview of the system we're building!

The video above explains everything in detail.

You can find the entire code in this GitHub repo →

Let's learn more about LLM app evaluation below.

Standard metrics are usually not that helpful since LLMs can produce varying outputs while conveying the same message.

In fact, in many cases, it is also difficult to formalize an evaluation metric as a deterministic code.

G-Eval is a task-agnostic LLM as a Judge metric in Opik that solves this.

The concept of LLM-as-a-judge involves using LLMs to evaluate and score various tasks and applications.

It allows you to specify a set of criteria for your metric (in English), after which it will use a Chain of Thought prompting technique to create evaluation steps and return a score.

Let’s look at a demo below.

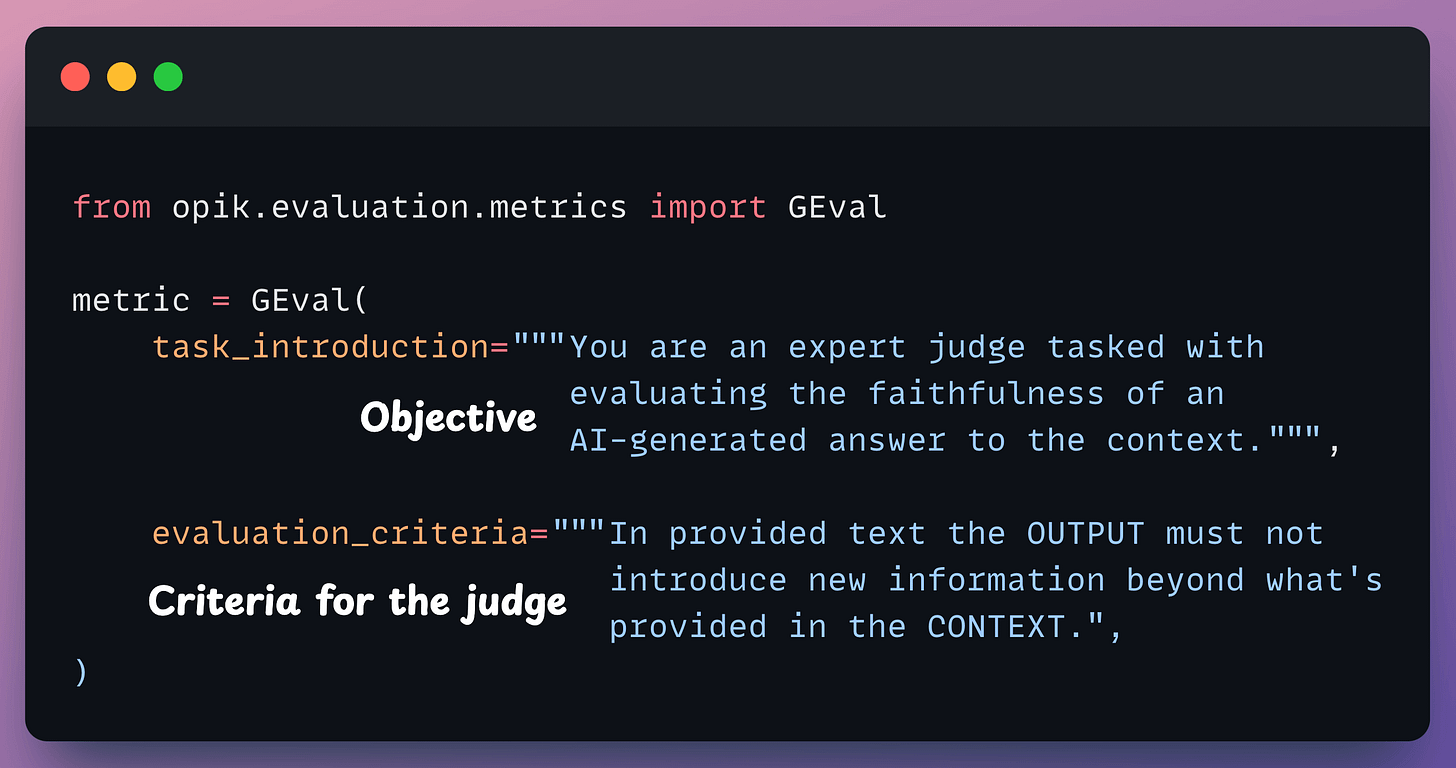

First, import the GEval class and define a metric in natural language:

Done!

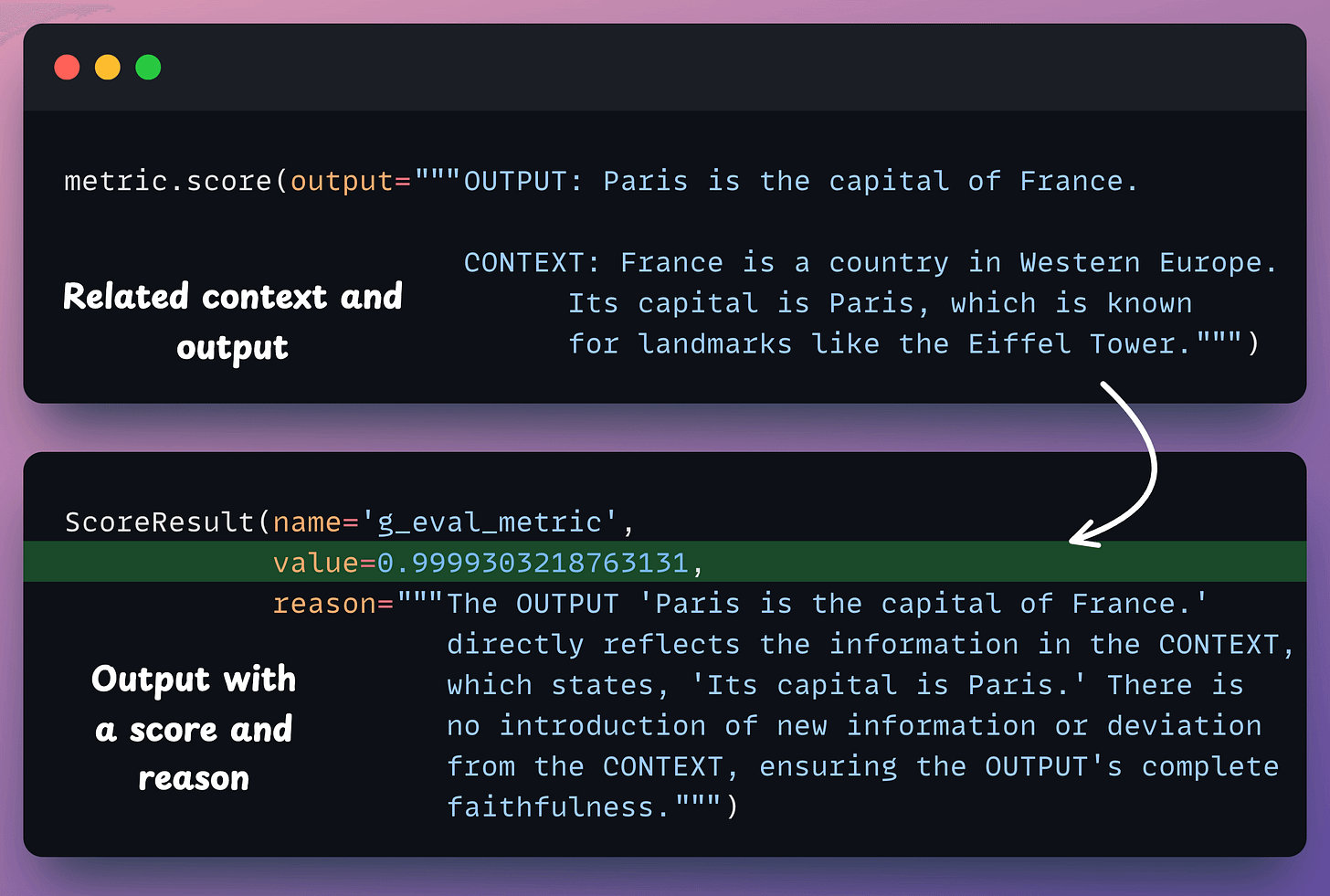

Next, invoke the score method to generate a score and a reason for that score. Below, we have a related context and output, which leads to a high score:

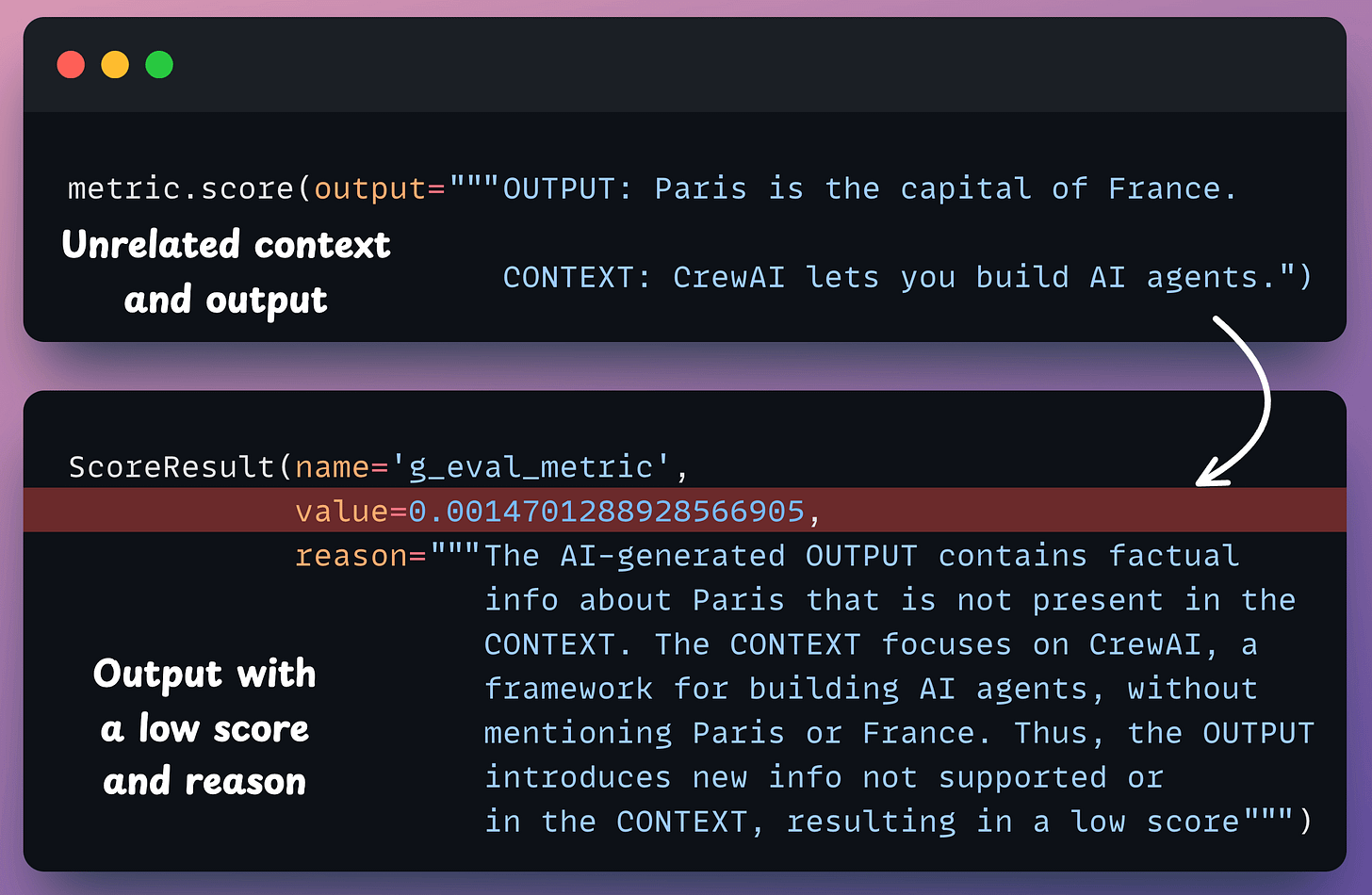

However, with unrelated context and output, we get a low score as expected:

Under the hood, G-Eval first uses the task introduction and evaluation criteria to outline an evaluation step.

Next, these evaluation steps are combined with the task to return a single score.

That said, you can easily self-host Opik, so your data stays where you want.

It integrates with nearly all popular frameworks, including CrewAI, LlamaIndex, LangChain, and HayStack.

If you want to dive further, we also published a practical guide on Opik to help you integrate evaluation and observability into your LLM apps (with implementation).

It has open access to all readers.

Start here: A Practical Guide to Integrate Evaluation and Observability into LLM Apps.

Jupyter MCP Server lets you interact with Jupyter notebooks.

As shown in the video below, it lets you:

The server currently offers 2 tools:

You can use this for several complex use cases where you can analyze full datasets by just telling Claude Desktop the file path.

It will use the MCP server to control the Jupyter Notebook and analyze the dataset.

One really good thing about this is that during this interaction, the cells are executed immediately.

Thus, Claude knows if a cell ran successfully. If not, it can self-adjust.