4 Stages of Training LLMs from Scratch

...explained visually.

...explained visually.

TODAY'S ISSUE

Today, we are covering the 4 stages of building LLMs from scratch that are used to make them applicable for real-world use cases.

We’ll cover:

The visual summarizes these techniques.

Let's dive in!

At this point, the model knows nothing.

You ask it “What is an LLM?” and get gibberish like “try peter hand and hello 448Sn”.

It hasn’t seen any data yet and possesses just random weights.

This stage teaches the LLM the basics of language by training it on massive corpora to predict the next token. This way, it absorbs grammar, world facts, etc.

But it’s not good at conversation because when prompted, it just continues the text.

We implemented pre-training of Llama 4 from scratch here →It covers:- Character-level tokenization- Multi-head self-attention with rotary positional embeddings (RoPE)- Sparse routing with multiple expert MLPs- RMSNorm, residuals, and causal masking- And finally, training and generation.

To make it conversational, we do Instruction Fine-tuning by training on instruction-response pairs. This helps it learn how to follow prompts and format replies.

Now it can:

At this point, we have likely:

So what can we do to further improve the model?

We enter into the territory of Reinforcement Learning (RL).

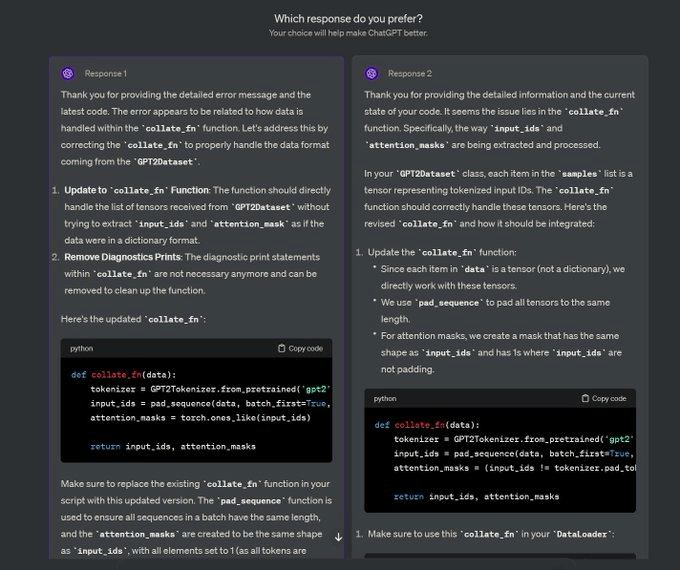

You must have seen this screen on ChatGPT where it asks: Which response do you prefer?

That’s not just for feedback, but it’s valuable human preference data.

OpenAI uses this to fine-tune their models using preference fine-tuning.

In PFT:

The user chooses between 2 responses to produce human preference data.

A reward model is then trained to predict human preference and the LLM is updated using RL.

The above process is called RLHF (Reinforcement Learning with Human Feedback), and the algorithm used to update model weights is called PPO.

It teaches the LLM to align with humans even when there’s no "correct" answer.

But we can improve the LLM even more.

In reasoning tasks (maths, logic, etc.), there's usually just one correct response and a defined series of steps to obtain the answer.

So we don’t need human preferences, and we can use correctness as the signal.

This is called reasoning fine-tuning

Steps:

This is called Reinforcement Learning with Verifiable Rewards. GRPO by DeepSeek is a popular technique for this.

Those were the 4 stages of training an LLM.

In a future issue, we shall dive into the specific implementation of these.

In the meantime, read this where we implemented pre-training of Llama 4 from scratch here →

It covers:

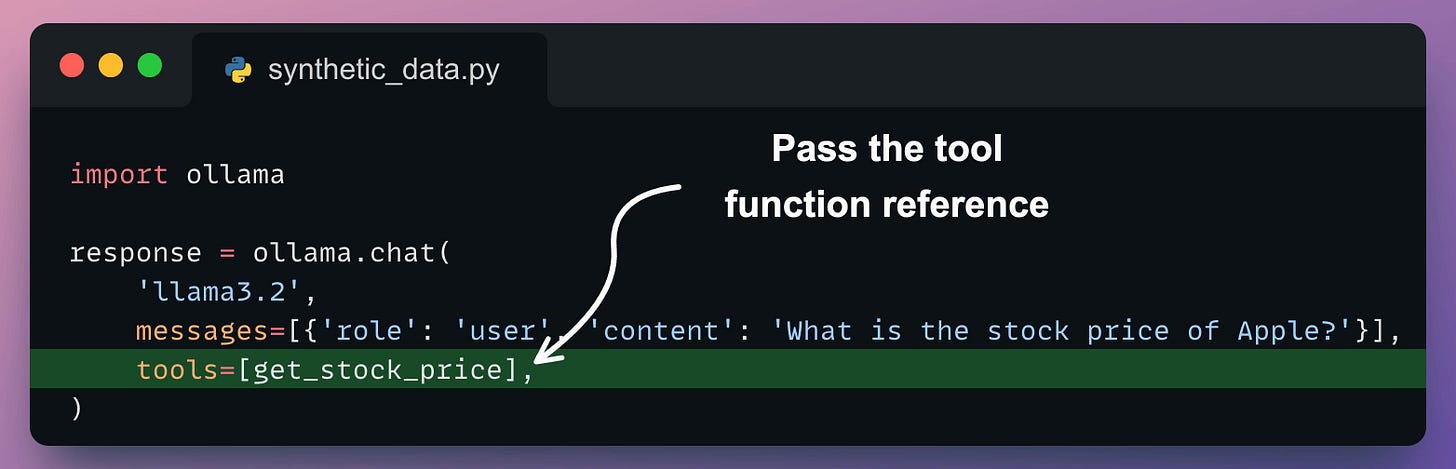

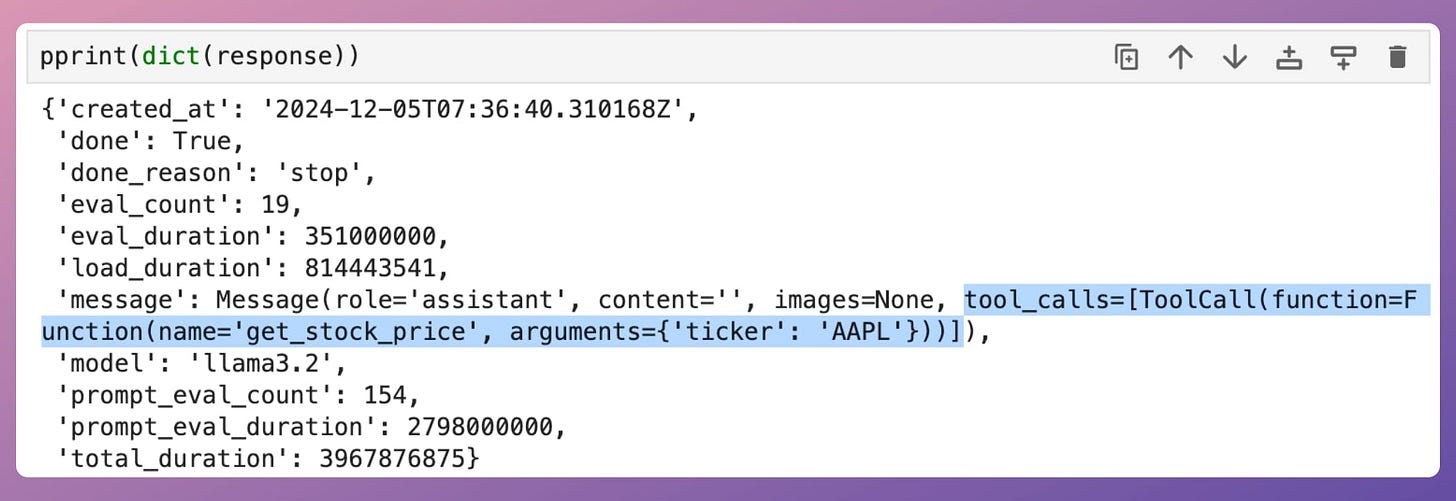

Printing the response, we get:

Notice that the message key in the above response object has tool_calls, which includes relevant details, such as:

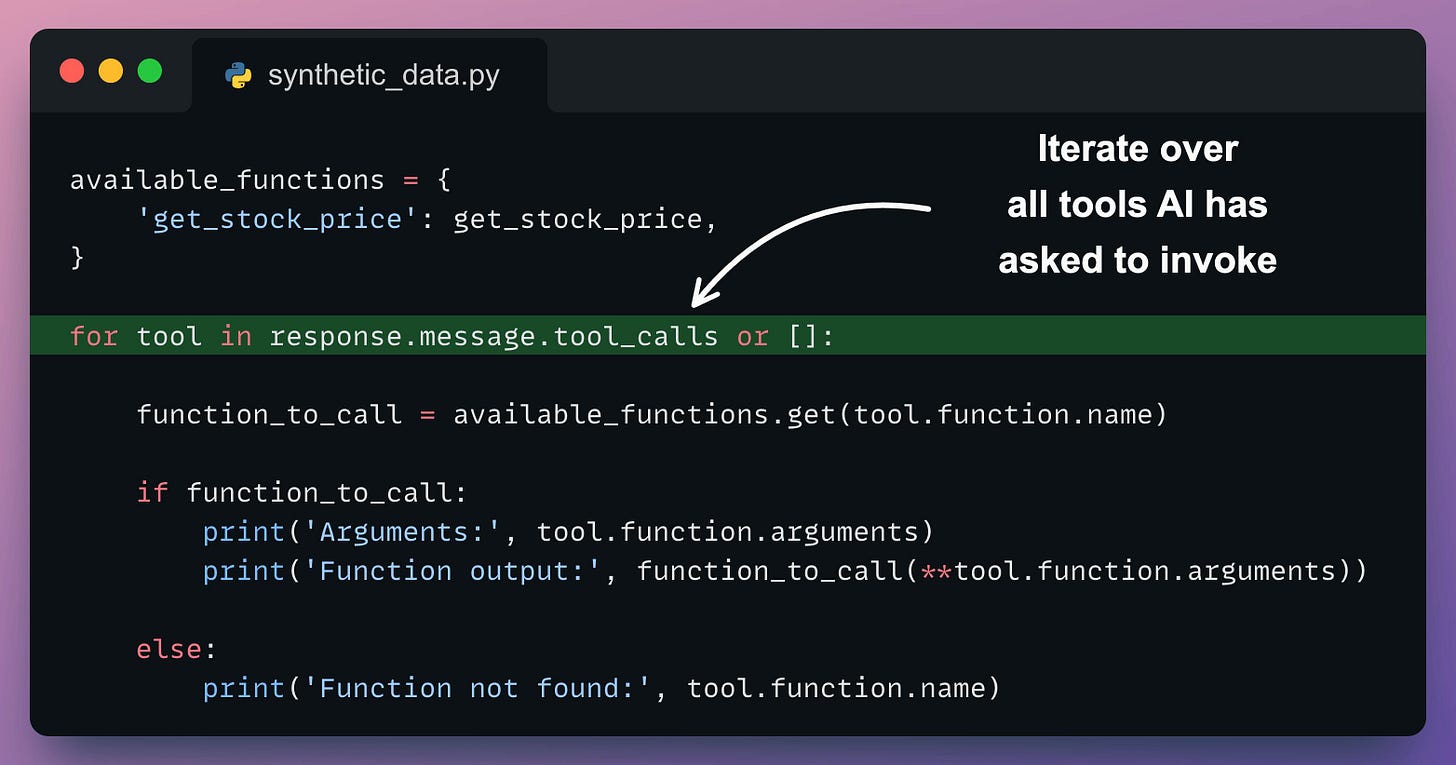

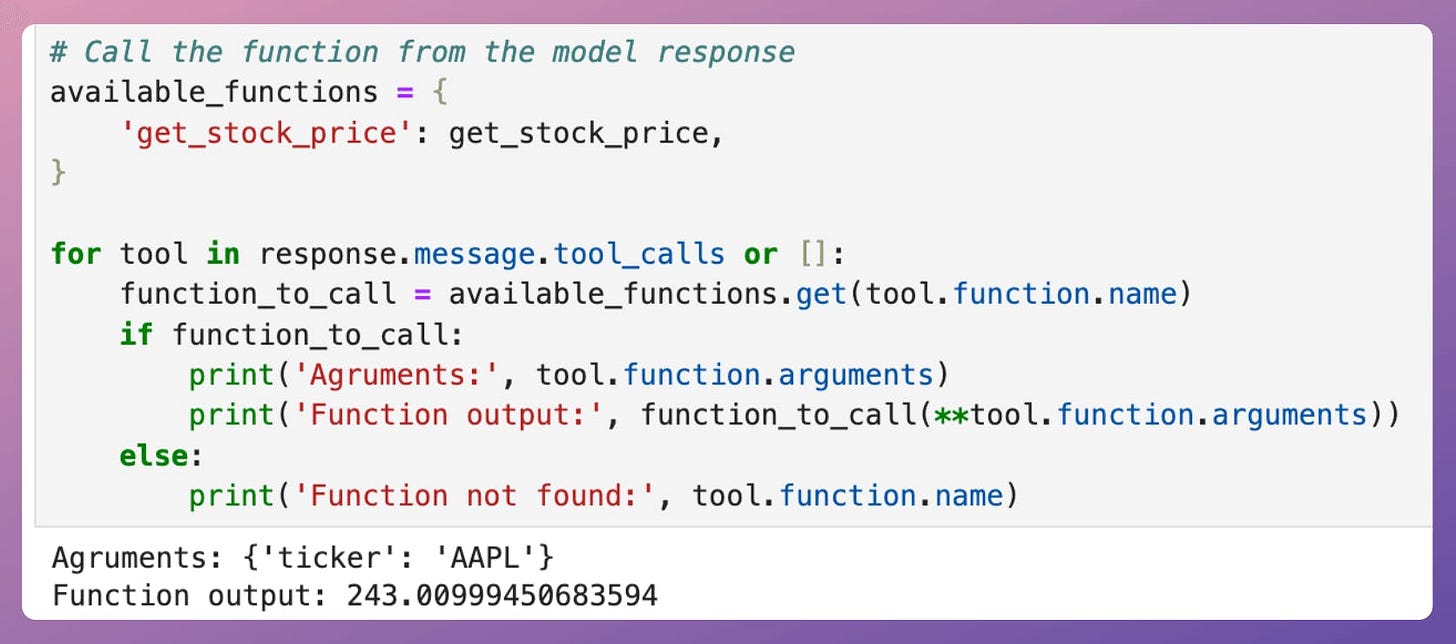

tool.function.name: The name of the tool to be called.tool.function.arguments: The arguments required by the tool.Thus, we can utilize this info to produce a response as follows:

This produces the following output.

This produces the expected output.

Of course, the above output can also be passed back to the AI to generate a more vivid response, which we haven't shown here.

But this simple demo shows that with tool calling, the assistant can be made more flexible and powerful to handle diverse user needs.

👉 Over to you: What else would you like to learn about in LLMs?

Model accuracy alone (or an equivalent performance metric) rarely determines which model will be deployed.

Much of the engineering effort goes into making the model production-friendly.

Because typically, the model that gets shipped is NEVER solely determined by performance — a misconception that many have.

Instead, we also consider several operational and feasibility metrics, such as:

For instance, consider the image below. It compares the accuracy and size of a large neural network I developed to its pruned (or reduced/compressed) version:

Looking at these results, don’t you strongly prefer deploying the model that is 72% smaller, but is still (almost) as accurate as the large model?

Of course, this depends on the task but in most cases, it might not make any sense to deploy the large model when one of its largely pruned versions performs equally well.

We discussed and implemented 6 model compression techniques in the article here, which ML teams regularly use to save 1000s of dollars in running ML models in production.

Learn how to compress models before deployment with implementation →