Course content

- Background, Foundations and Architecture

- Primitives, Communication and Practical Usage

- Building a Custom MCP Client

- Building a Full-Fledged MCP Workflow using Tools, Resources, and Prompts

- Integrating Sampling into MCP Workflows

- Testing and Security in MCP

- Sandboxing in MCP

- Practical MCP Integration with 4 Popular Agentic Frameworks

- Building with MCP and LangGraph

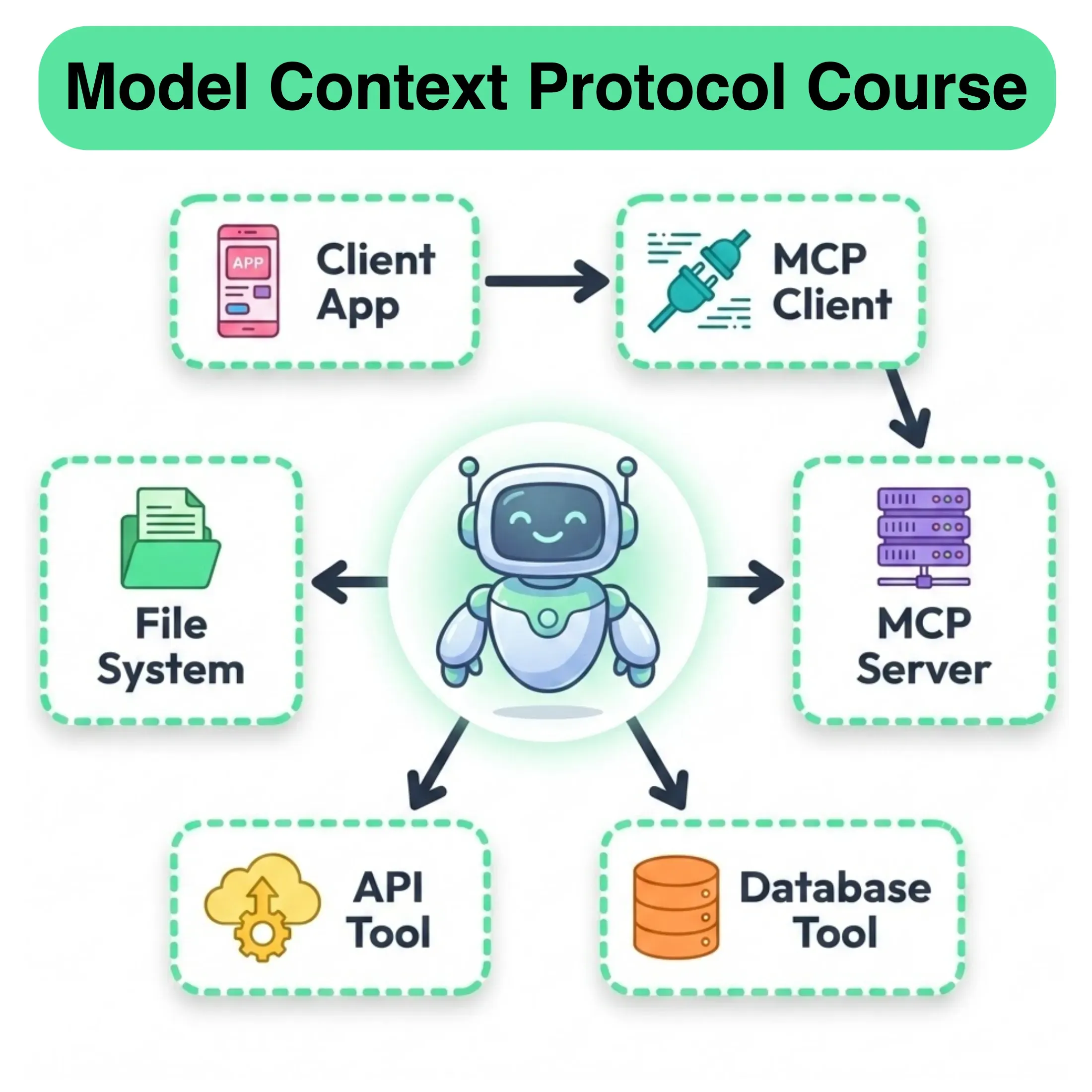

Most LLM workflows today rely on hardcoded tool integrations. But these setups don’t scale, they don’t adapt, and they severely limit what your AI system can actually do.

MCP solves this.

Think of it as the missing layer between LLMs and tools, a standard way for any model to interact with any capability: APIs, data sources, memory stores, custom functions, and even other agents.

Without MCP:

- You’re stuck gluing every tool manually to every model.

- Context sharing is messy and brittle.

- Scaling beyond prototypes is painful.

With MCP:

- Models can dynamically discover and invoke tools at runtime.

- You get plug-and-play interoperability between systems like Claude, Cursor, LlamaIndex, CrewAI, and beyond.

- You move from prompt engineering to systems engineering, where LLMs become orchestrators in modular, reusable, and extensible pipelines.

This protocol is already powering real-world agentic systems.

And in this crash course, you’ll learn exactly how to implement and extend it, from first principles to production use.