FireDucks vs. Pandas vs. DuckDB vs. Polars

Performance comparison.

Performance comparison.

TODAY'S ISSUE

I have been using FireDucks quite extensively lately.

For starters, FireDucks is a heavily optimized alternative to Pandas with exactly the same API as Pandas.

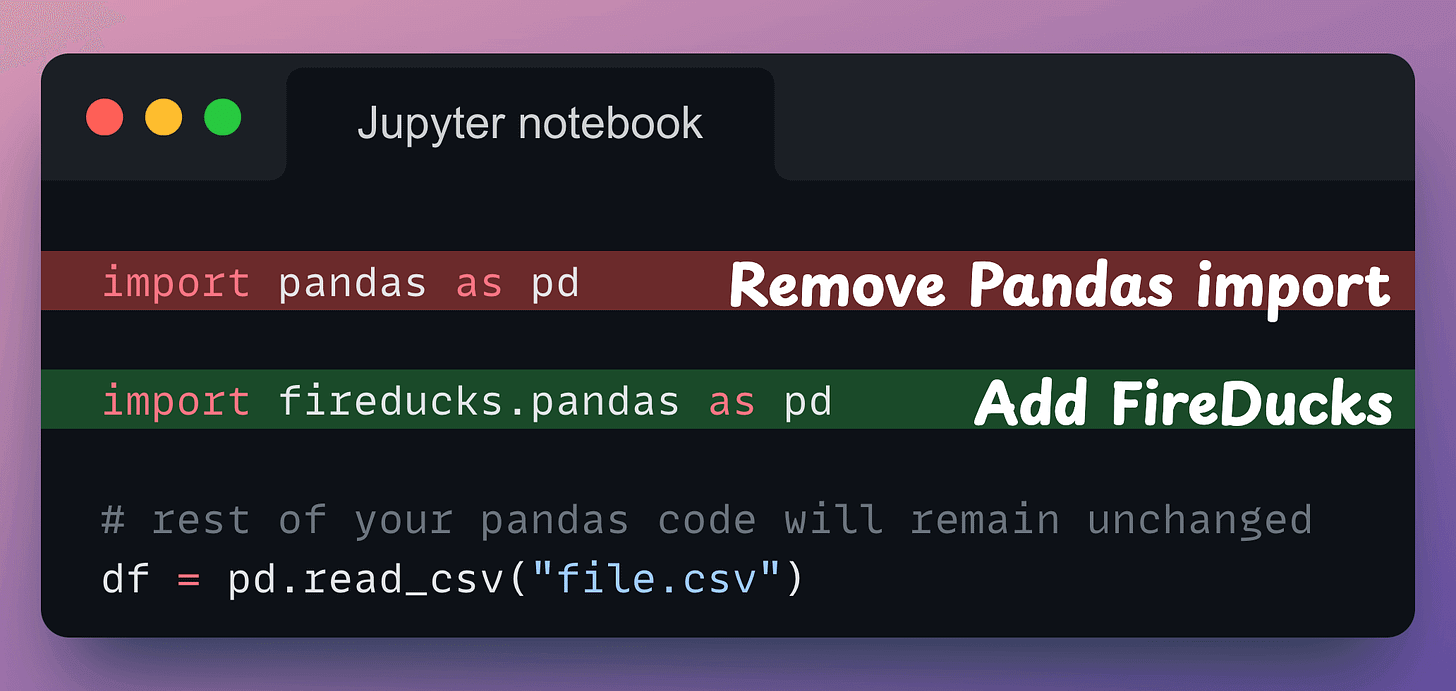

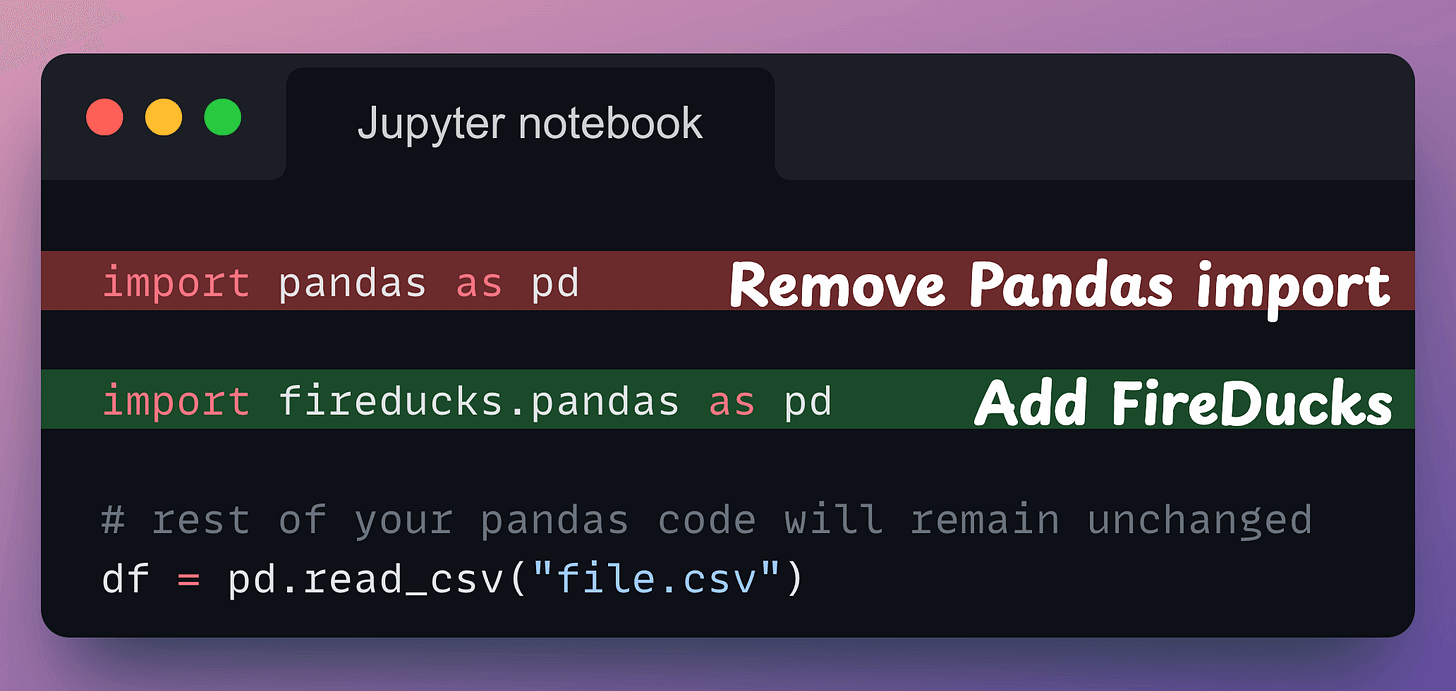

All you need to do is replace the Pandas import with the FireDucks import. That’s it.

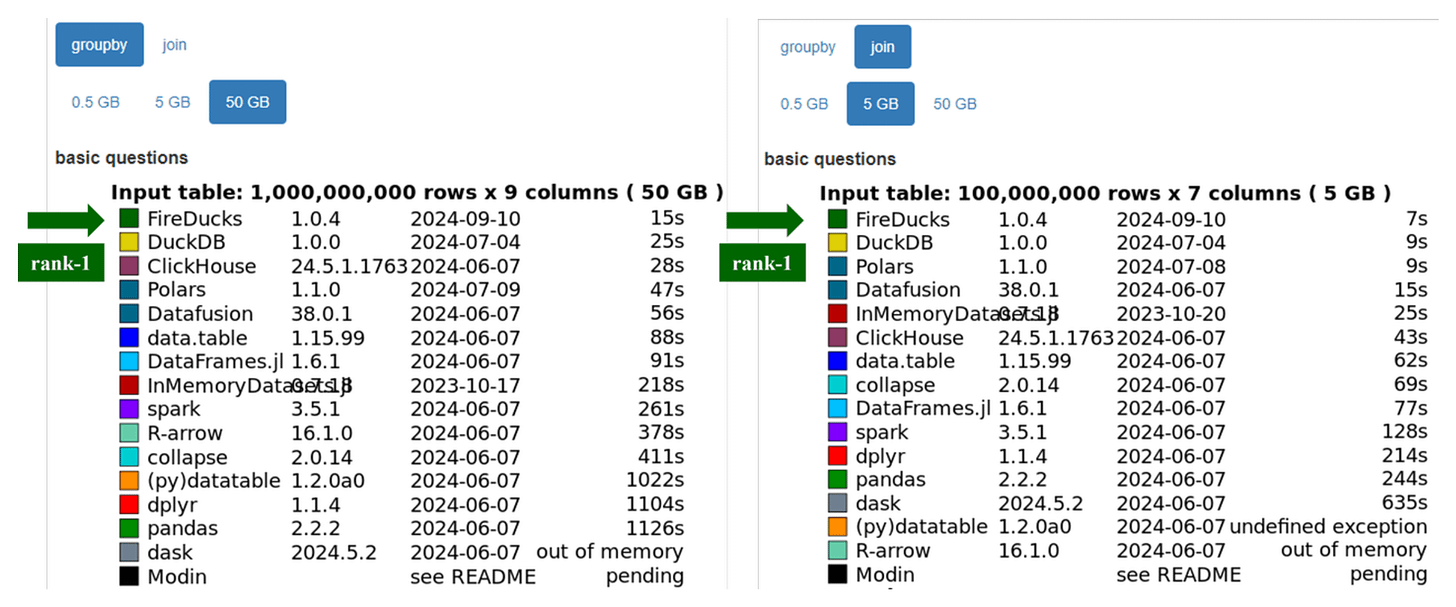

The db-benchmark includes scenarios that execute fundamental data science operations across multiple datasets. FireDucks appears to be the fastest DataFrame library for common big data operations under this benchmark:

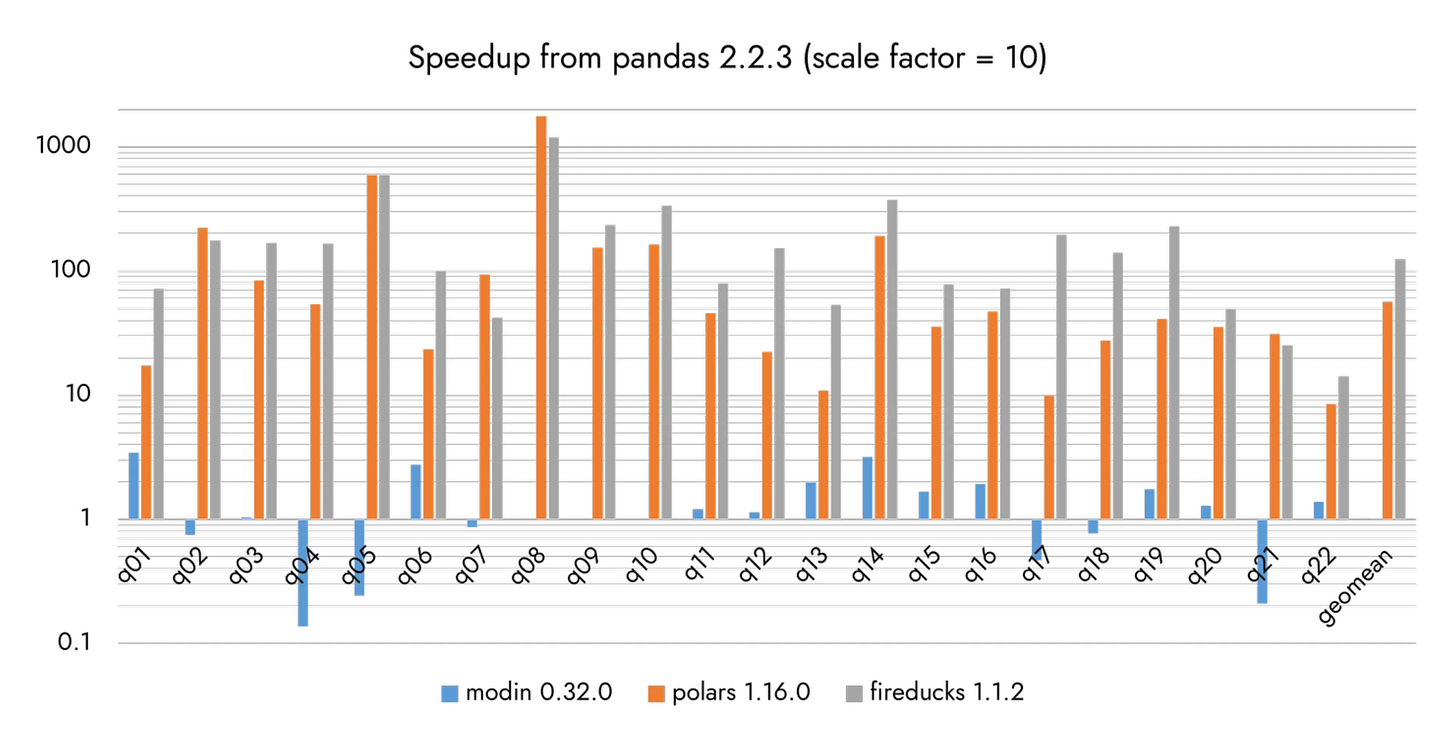

Moreover, as per TPC-H benchmarks across 22 queries:

A demo of this speed-up comparison between DuckDB, Pandas and Polars is shown in the video above.

At its core, FireDucks is heavily driven by lazy execution, unlike Pandas, which executes right away.

This allows FireDucks to build a logical execution plan and apply possible optimizations.

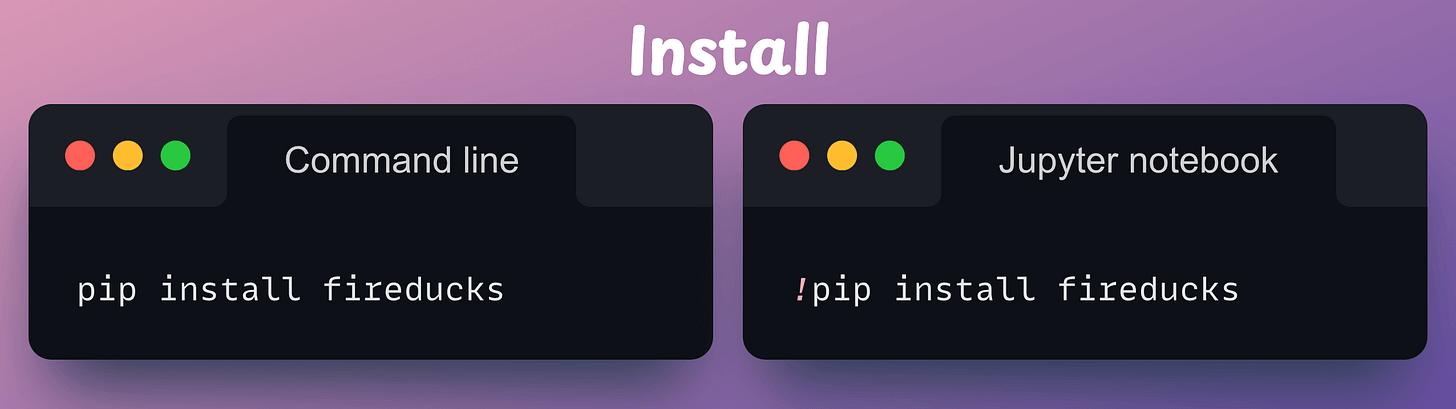

First, install the library:

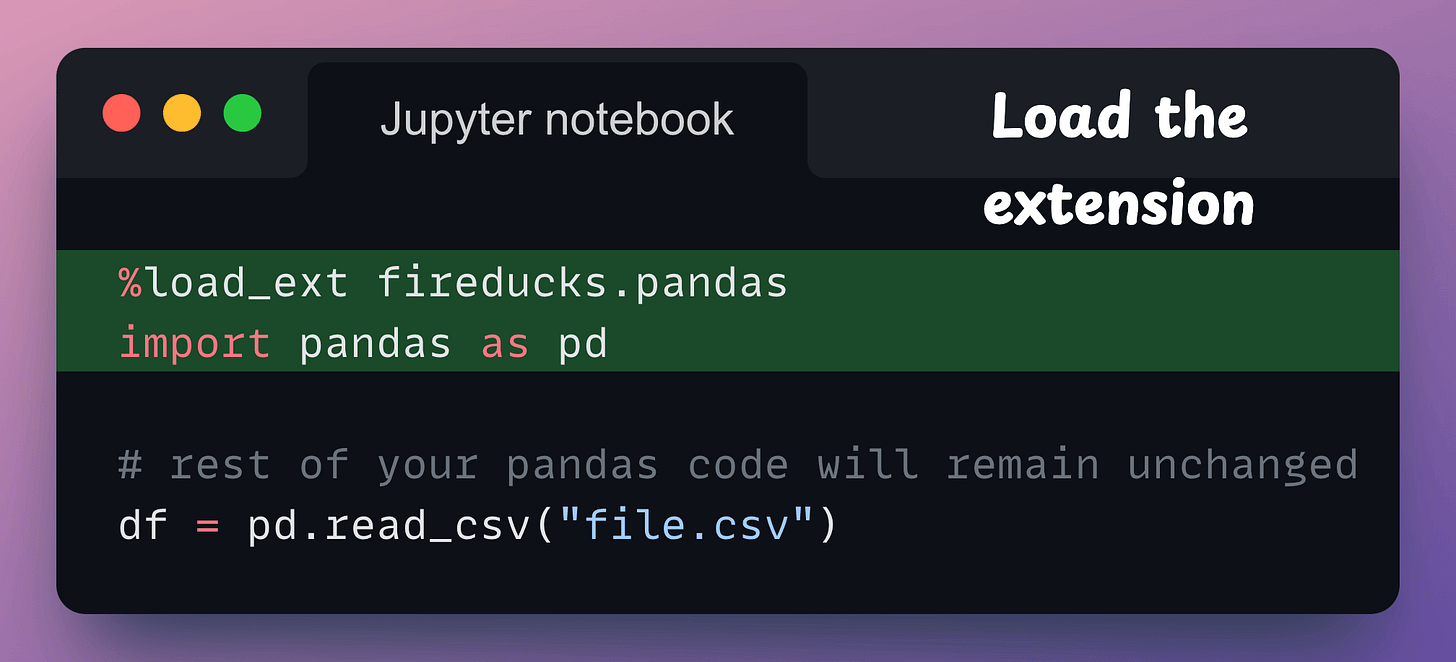

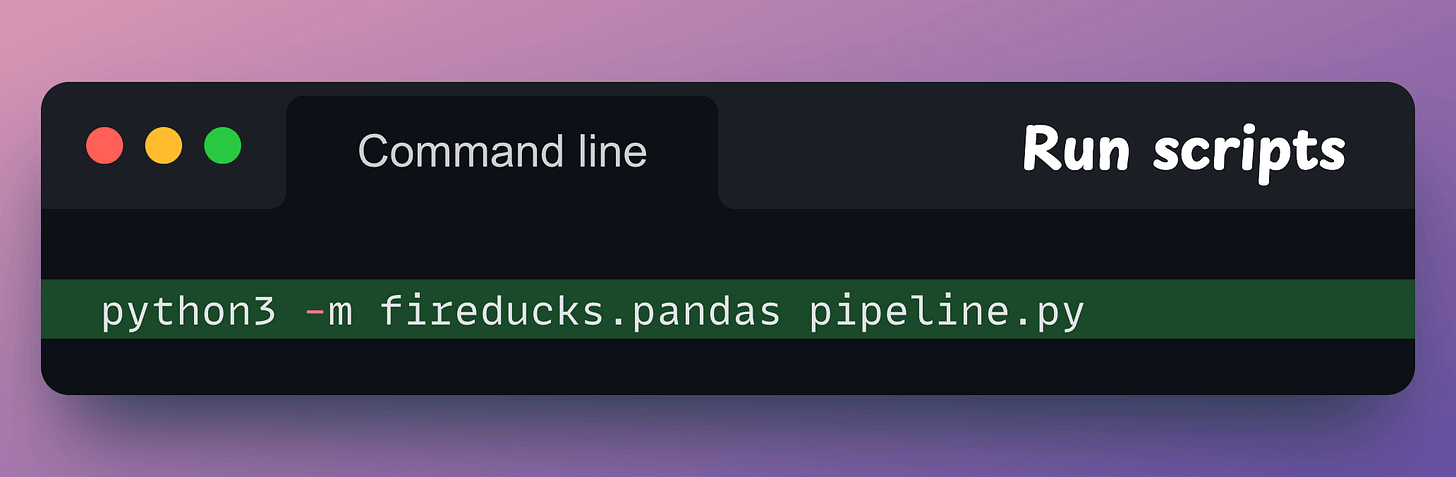

Next, there are three ways to use it:

fireducks.pandas), which can be imported instead of using Pandas. Thus, to use FireDucks in an existing Pandas pipeline, replace the standard import statement with the one from FireDucks:

Done!

It’s that simple to use FireDucks.

The code for the above benchmarks is available in this colab notebook.

👉 Over to you: What are some other ways to accelerate Pandas operations in general?

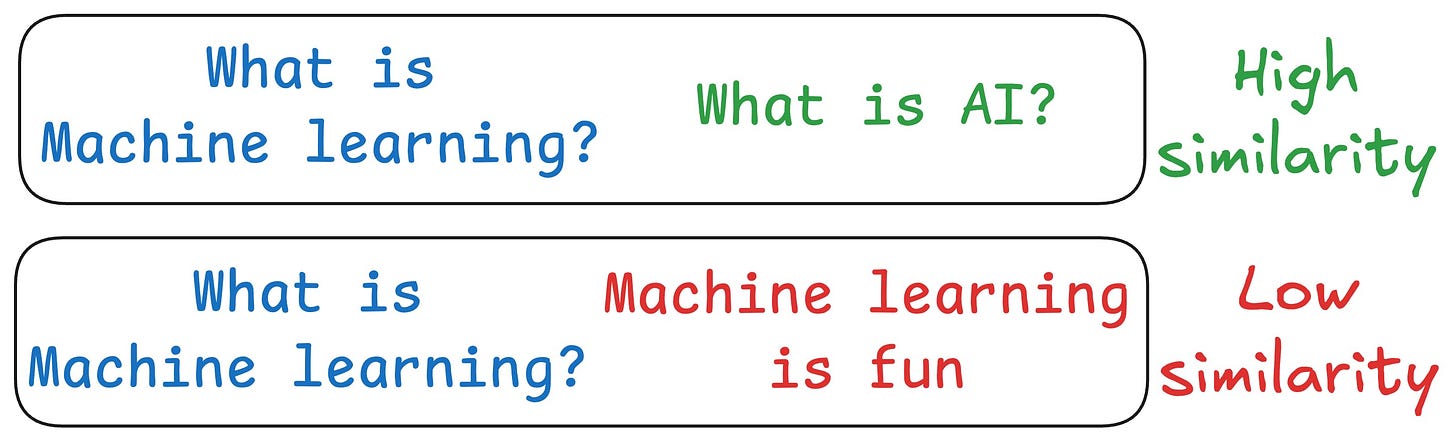

One critical problem with the traditional RAG system is that questions are not semantically similar to their answers.

As a result, several irrelevant contexts get retrieved during the retrieval step due to a higher cosine similarity than the documents actually containing the answer.

HyDE solves this.

The following visual depicts how it differs from traditional RAG and HyDE.

We covered this in detail in a newsletter issue published last week →

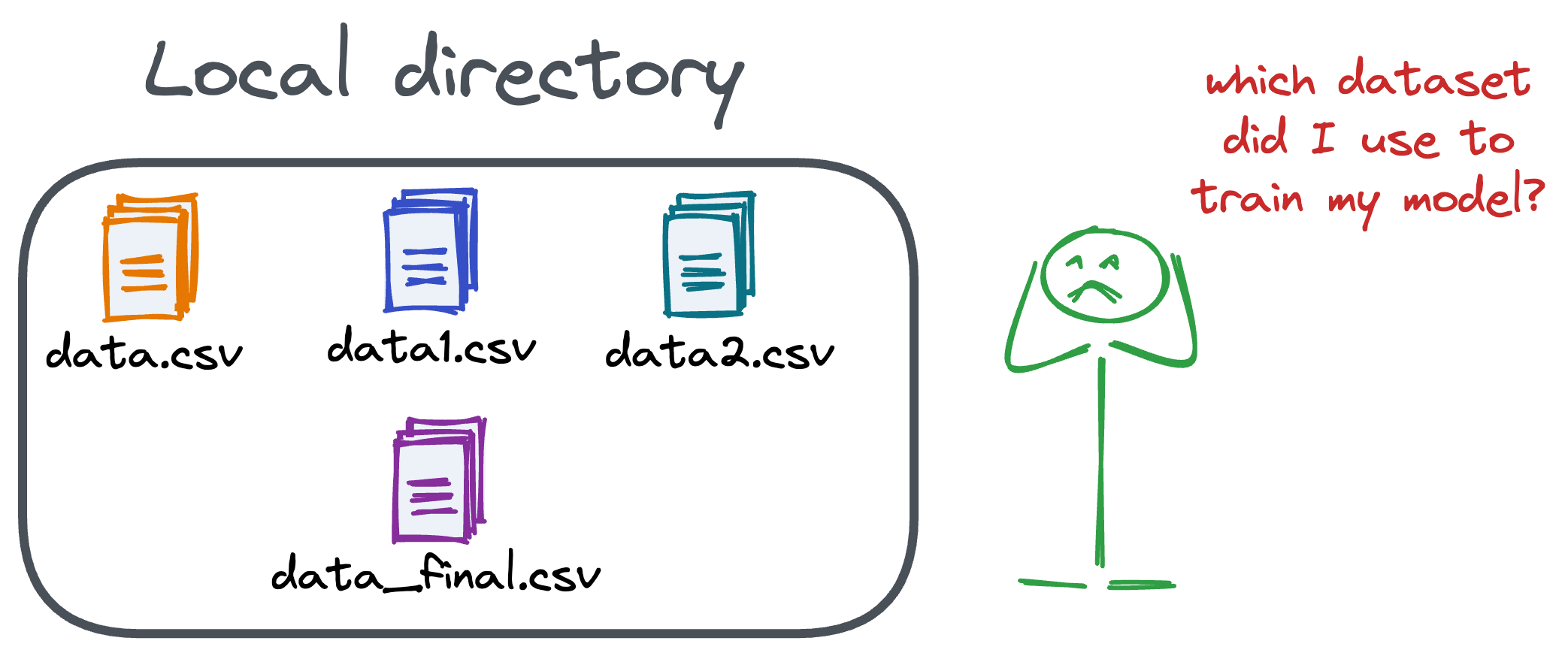

Versioning GBs of datasets is practically impossible with GitHub because it imposes an upper limit on the file size we can push to its remote repositories.

That is why Git is best suited for versioning codebase, which is primarily composed of lightweight files.

However, ML projects are not solely driven by code.

Instead, they also involve large data files, and across experiments, these datasets can vastly vary.

To ensure proper reproducibility and experiment traceability, it is also necessary to version datasets.

Data version control (DVC) solves this problem.

The core idea is to integrate another version controlling system with Git, specifically used for large files.

Here's everything you need to know (with implementation) about building 100% reproducible ML projects →