Advanced Techniques to Build Robust Agentic Systems (Part B)

AI Agents Crash Course—Part 6 (with implementation).

Introduction

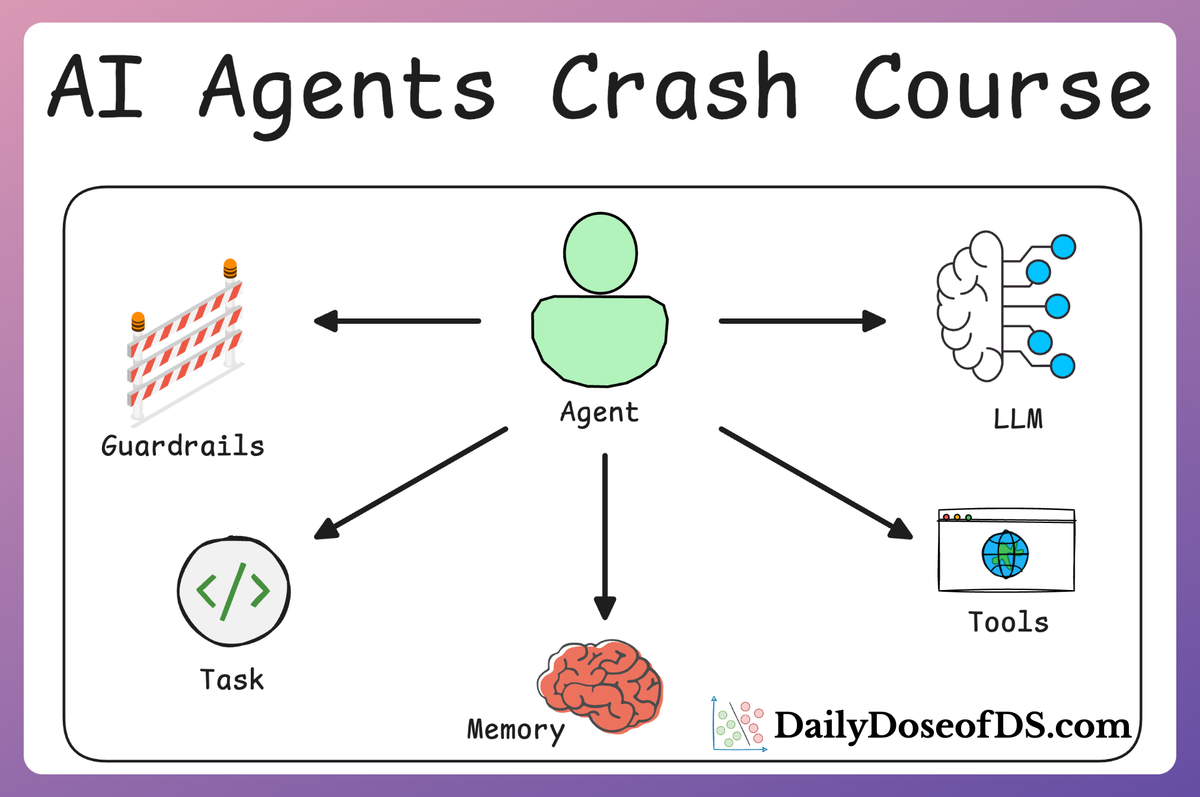

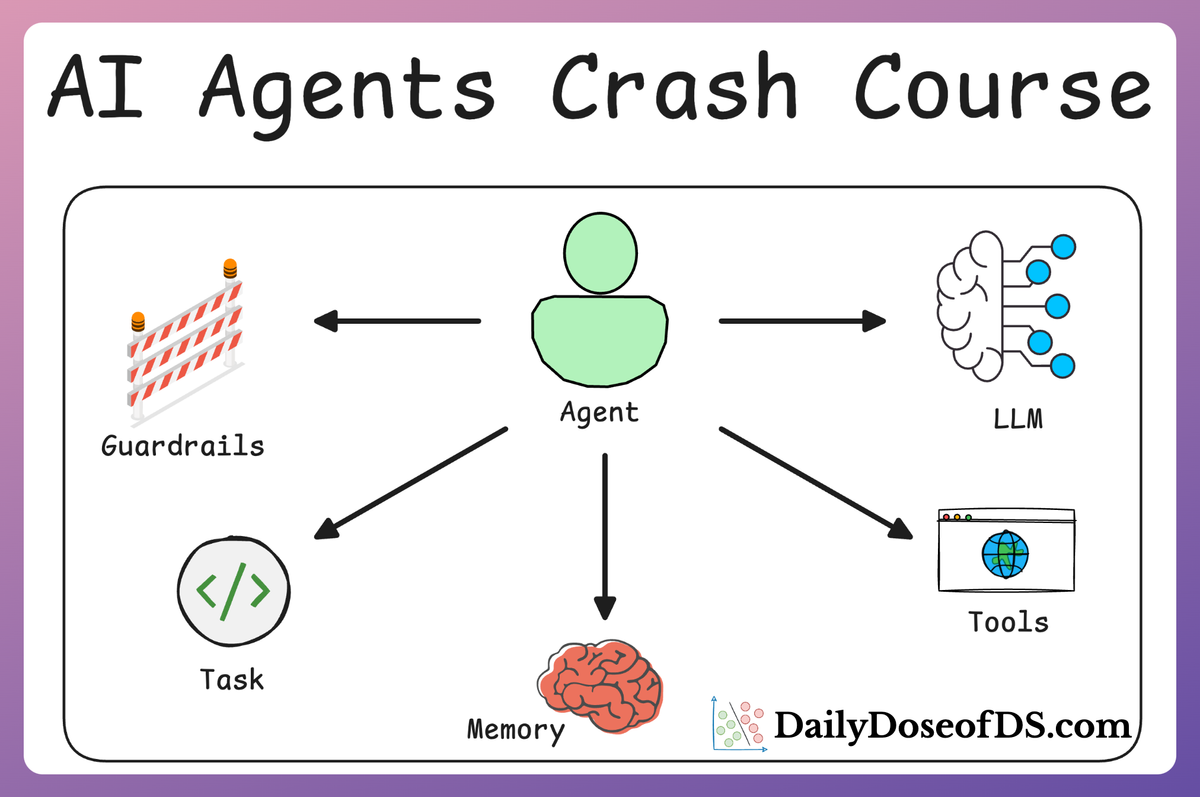

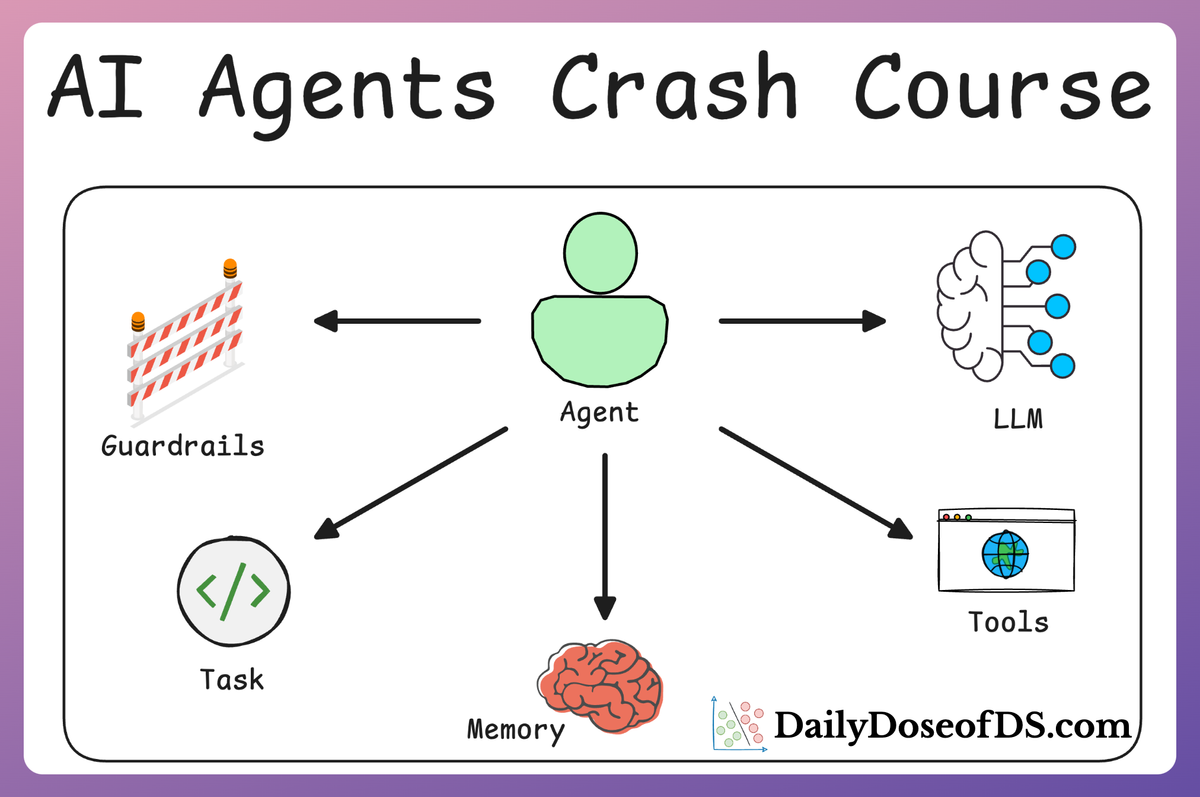

In Part 5, we moved into build learning advanced techniques that make AI agents more robust, dynamic, and adaptable.

- Guardrails → Enforcing constraints to ensure agents produce reliable and expected outputs.

- Referencing other Tasks and their outputs → Allowing agents to dynamically use previous task results.

- Executing tasks async → Running agent tasks concurrently to optimize performance.

- Adding callbacks → Allowing post-processing or monitoring of task completions.

- Introduce human-in-the-loop during execution → Introducing human-in-the-loop mechanisms for validation and control.

- Hierarchical Agentic processes → Structuring agents into sub-agents and multi-level execution trees for more complex workflows.

- Multimodal Agents → Extending CrewAI agents to handle text, images, audio, and beyond.

- And more.

We split these into two parts to improve the reading experience and ensure that you do not feel overwhelmed with several details.

We have already covered half of this in Part 5 and in Part 6 (this article), we shall be covering the rest.

Let's dive in!

On a side note:

If you haven't read Part 1 to Part 5 yet, we highly recommend doing so before moving ahead.

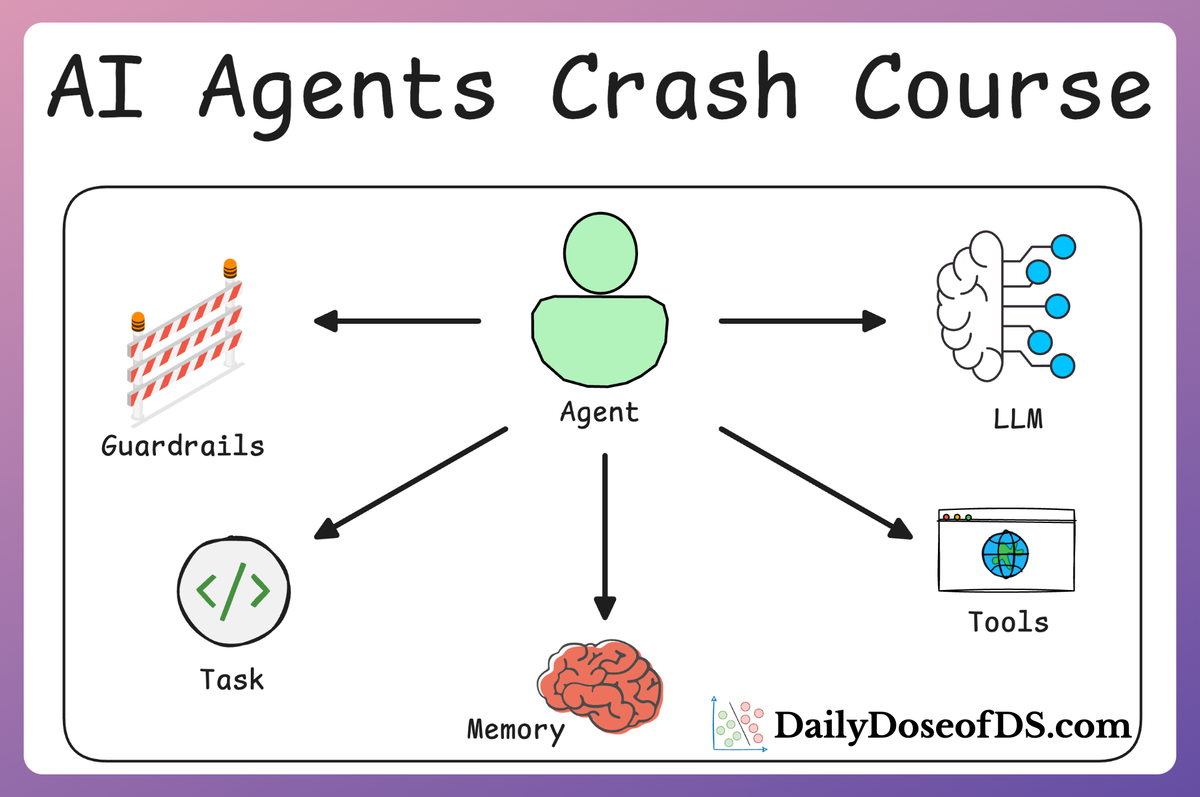

- In Part 1, we covered the fundamentals of Agentic systems, understanding how AI agents can act autonomously to perform structured tasks.

- In Part 2, we explored how to extend Agent capabilities by integrating custom tools, using structured tools, and building modular Crews to compartmentalize responsibilities.

- In Part 3, we focused on Flows, learning about state management, flow control, and integrating a Crew into a Flow. As discussed last time, with Flows, you can create structured, event-driven workflows that seamlessly connect multiple tasks, manage state, and control the flow of execution in your AI applications.

- In Part 4, we extended these concepts into real-world multi-agent, multi-crew Flow projects, demonstrating how to automate complex workflows such as content planning and book writing.

Now, in Part 5 and 6, we shall move into advanced techniques that make AI agents more robust, dynamic, and adaptable.

Installation and setup

Throughout this crash course, we have been using CrewAI, an open-source framework that makes it seamless to orchestrate role-playing, set goals, integrate tools, bring any of the popular LLMs, etc., to build autonomous AI agents.

To highlight more, CrewAI is a standalone independent framework without any dependencies on Langchain or other agent frameworks.

Let's dive in!

To get started, install CrewAI as follows:

Like the RAG crash course, we shall be using Ollama to serve LLMs locally. That said, CrewAI integrates with several LLM providers like:

- OpenAI

- Gemini

- Groq

- Azure

- Fireworks AI

- Cerebras

- SambaNova

- and many more.

To set up OpenAI, create a .env file in the current directory and specify your OpenAI API key as follows:

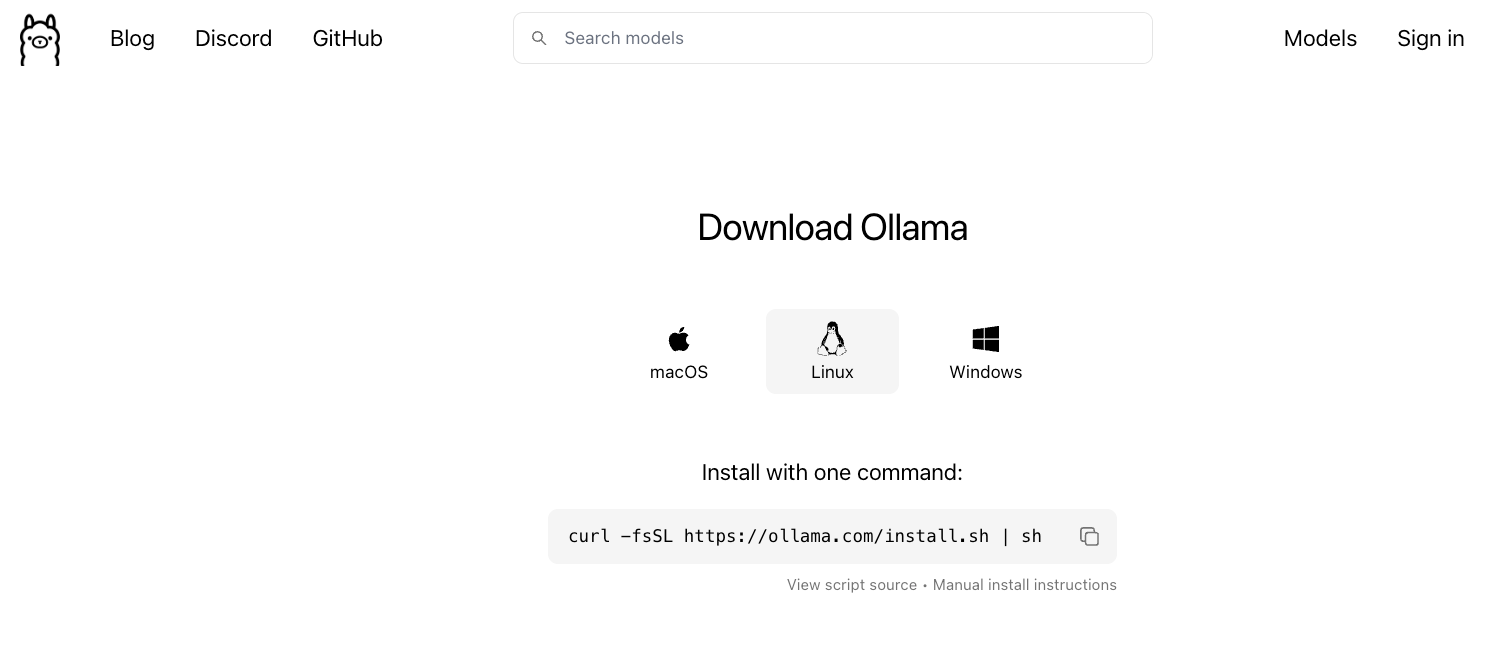

Also, here's a step-by-step guide on using Ollama:

- Go to Ollama.com, select your operating system, and follow the instructions.

- If you are using Linux, you can run the following command:

- Ollama supports a bunch of models that are also listed in the model library:

Once you've found the model you're looking for, run this command in your terminal:

The above command will download the model locally, so give it some time to complete. But once it's done, you'll have Llama 3.2 3B running locally, as shown below, which depicts Microsoft's Phi-3 served locally through Ollama:

That said, for our demo, we would be running Llama 3.2 1B model instead since it's smaller and will not take much memory:

Done!

Everything is set up now and we can move on to building robust agentic workflows.

You can download the code for this article below: