A Practical Deep Dive Into Memory for Agentic Systems (Part A)

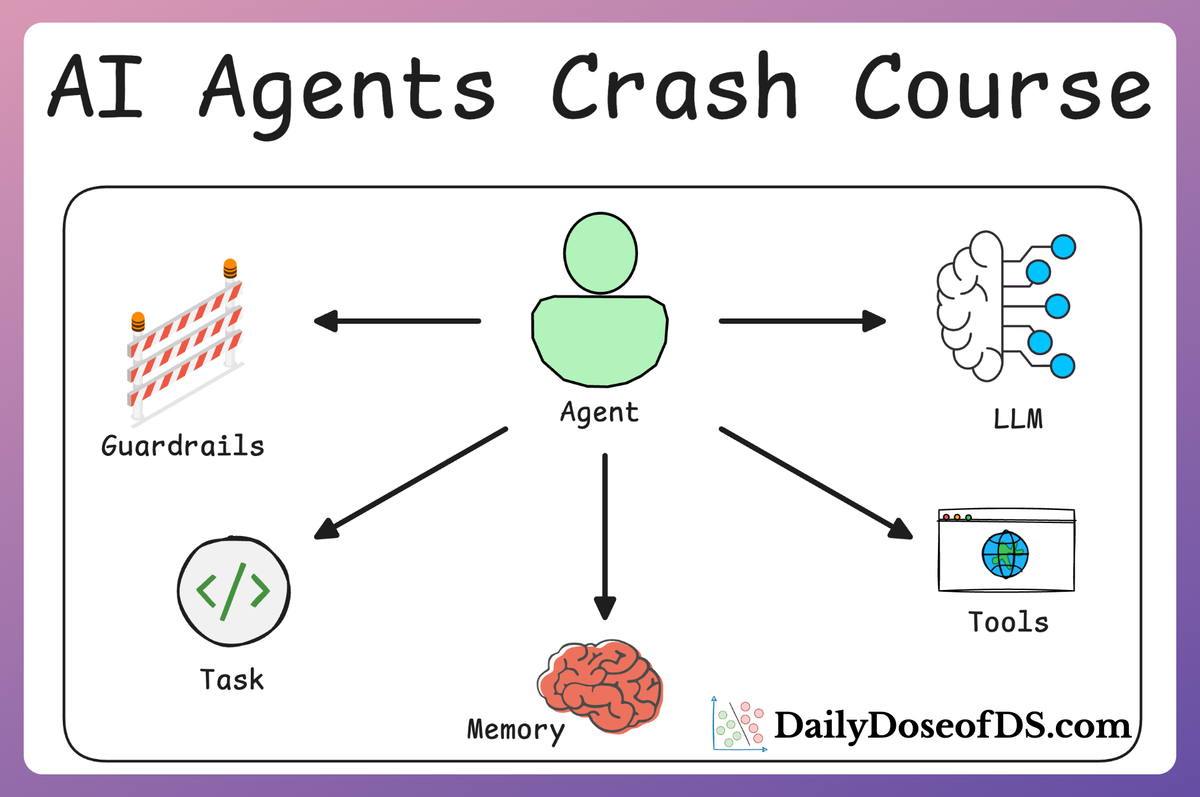

AI Agents Crash Course—Part 8 (with implementation).

Introduction

So far in this AI Agent crash course series, we’ve built systems that can:

- Collaborate across multiple crews and tasks.

- Use guardrails, callbacks, and structured outputs.

- Handle multimodal inputs like images.

- Reference outputs of previous tasks.

- Work asynchronously and under human supervision.

We’ve also explored how to equip agents with Knowledge, allowing them to access internal documentation, structured datasets, or any domain-specific reference material needed to complete their tasks.

However, all of this still leaves one major gap…

So far, our agents have mostly been stateless. They could access tools, reference documents, or perform tasks—but they didn’t remember anything unless we passed it into their context explicitly.

What if you want your agents to:

- Recall something a user told them earlier in the conversation.

- Accumulate learnings across different sessions?

- Personalize answers for individual users based on their past behavior?

- Maintain facts about entities (like customers, projects, or teams) across workflows?

Memory solves this!

In this part, we’ll explore how to:

- Use Short-Term Memory to retain and retrieve recent interaction context.

- Use Long-Term Memory to accumulate experience across sessions.

- Use Entity Memory to track and recall facts about specific people or objects.

- Use User Memory to personalize interactions.

- Reset, share, and manage memory across sessions and crews.

Everything will be demonstrated using real examples—so you can not only understand how memory works in CrewAI but also learn how to use it to make your agents actually intelligent.

Let’s dive in.

A quick note

In case you are new here...

Over the past seven parts of this crash course, we have progressively built our understanding of Agentic Systems and multi-agent workflows with CrewAI.

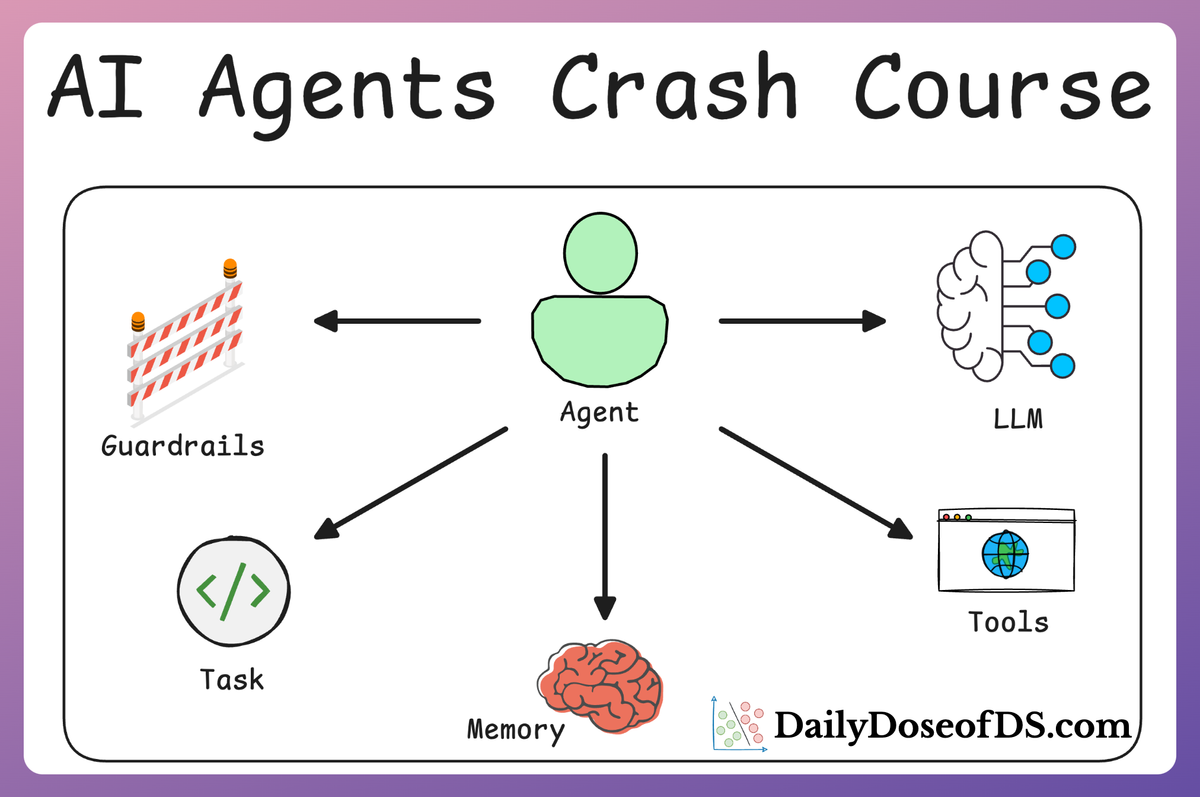

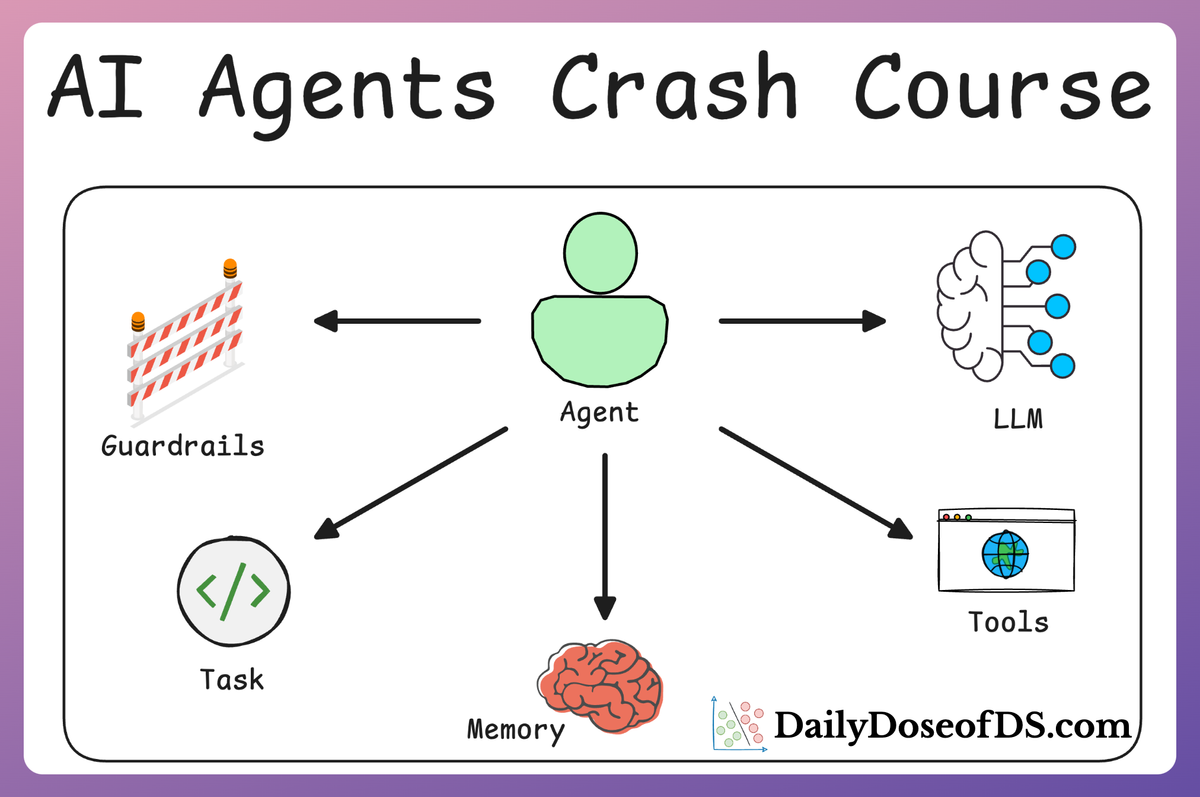

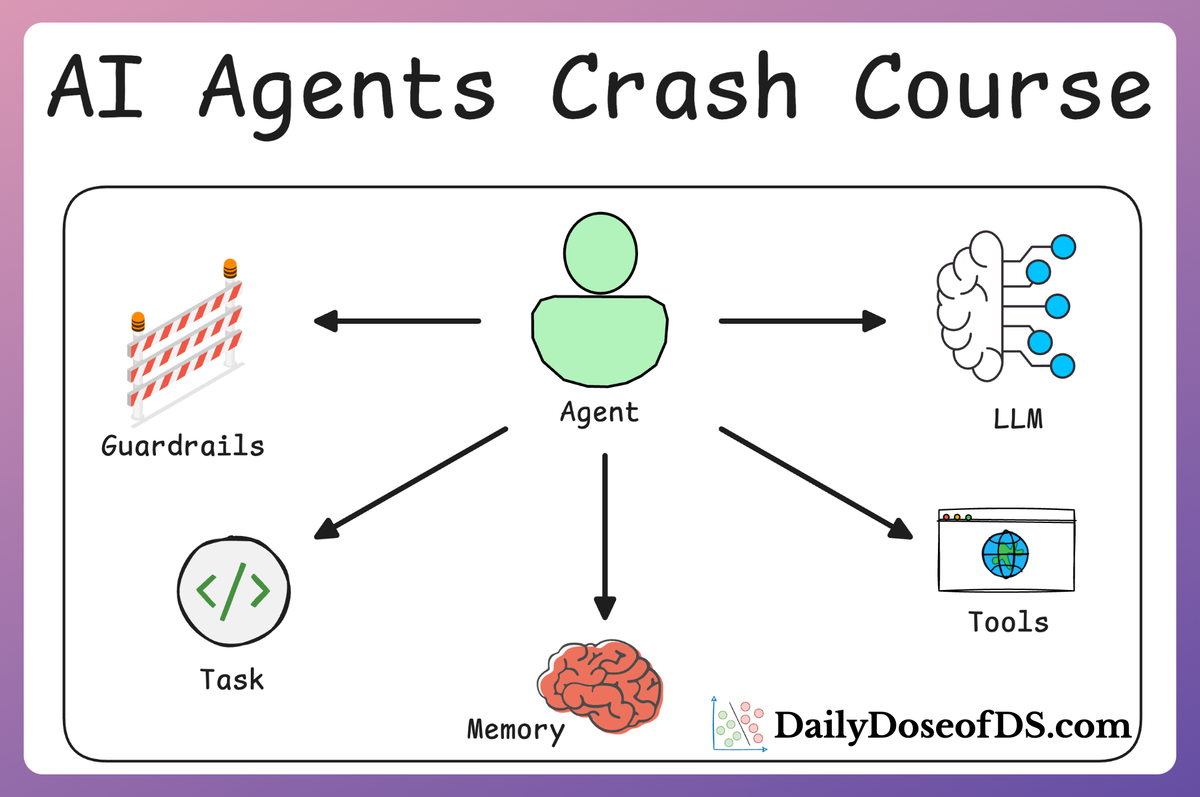

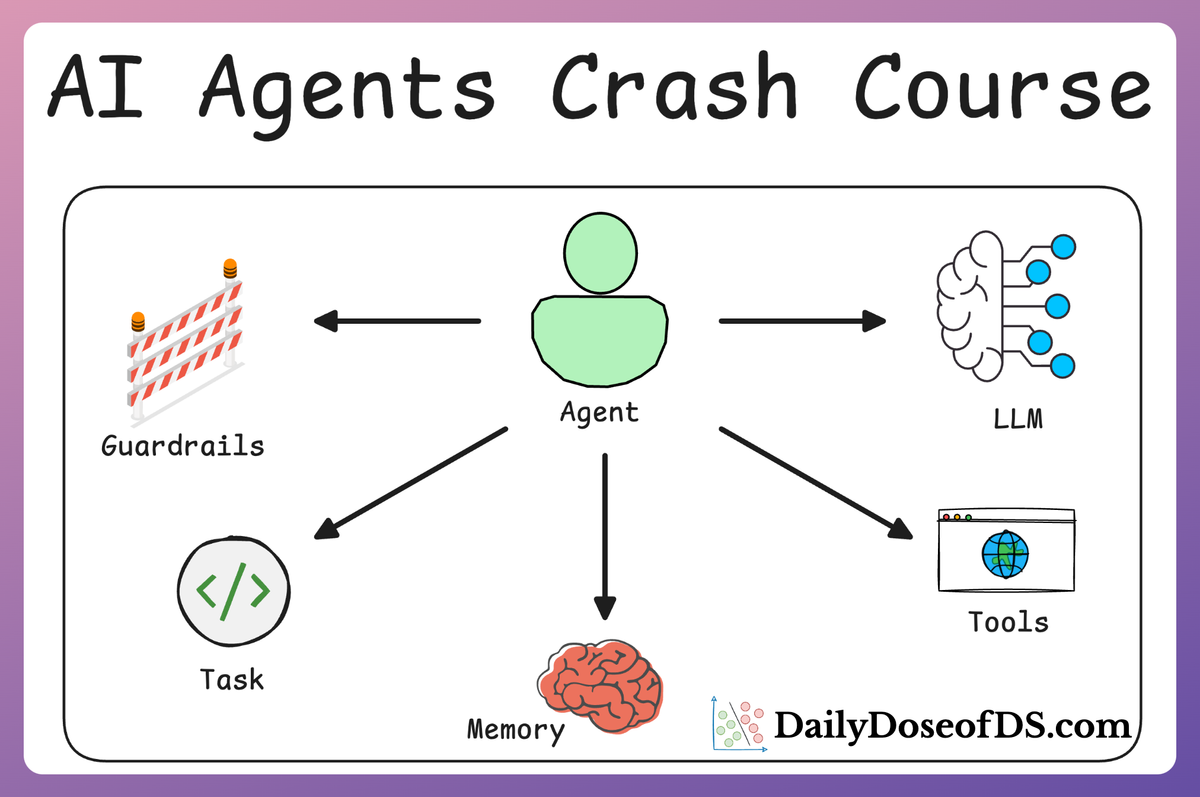

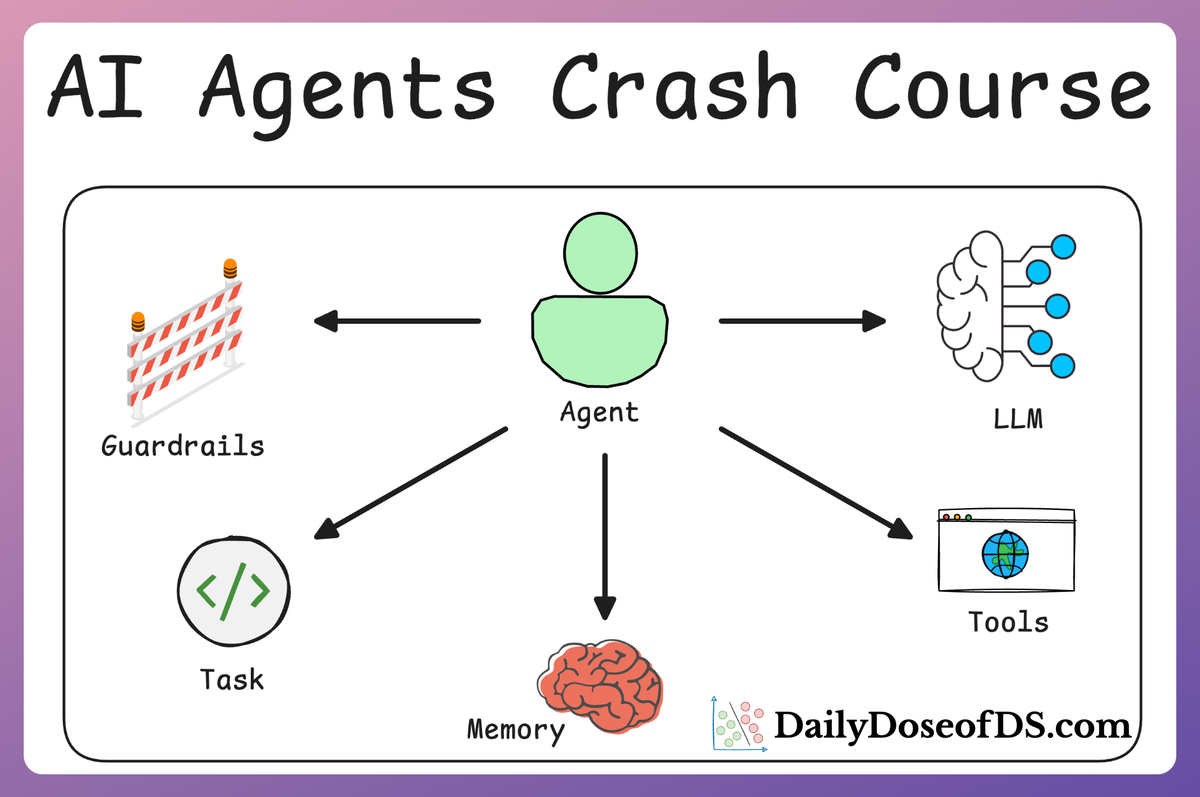

- In Part 1, we covered the fundamentals of Agentic systems, understanding how AI agents can act autonomously to perform structured tasks.

- In Part 2, we explored how to extend Agent capabilities by integrating custom tools, using structured tools, and building modular Crews to compartmentalize responsibilities.

- In Part 3, we focused on Flows, learning about state management, flow control, and integrating a Crew into a Flow. As discussed last time, with Flows, you can create structured, event-driven workflows that seamlessly connect multiple tasks, manage state, and control the flow of execution in your AI applications.

- In Part 4, we extended these concepts into real-world multi-agent, multi-crew Flow projects, demonstrating how to automate complex workflows such as content planning and book writing.

- In Part 5 and 6, we moved into advanced techniques that make AI agents more robust, dynamic, and adaptable.

- Guardrails → Enforcing constraints to ensure agents produce reliable and expected outputs.

- Referencing other Tasks and their outputs → Allowing agents to dynamically use previous task results.

- Executing tasks async → Running agent tasks concurrently to optimize performance.

- Adding callbacks → Allowing post-processing or monitoring of task completions.

- Introduce human-in-the-loop during execution → Introducing human-in-the-loop mechanisms for validation and control.

- Hierarchical Agentic processes → Structuring agents into sub-agents and multi-level execution trees for more complex workflows.

- Multimodal Agents → Extending CrewAI agents to handle text, images, audio, and beyond.

- and more.

- In Part 7, we learned about embedding Knowledge into agentic systems to augment them with external reference material. This was like giving an agent a library of context they can search and use while performing a task.

What is memory?

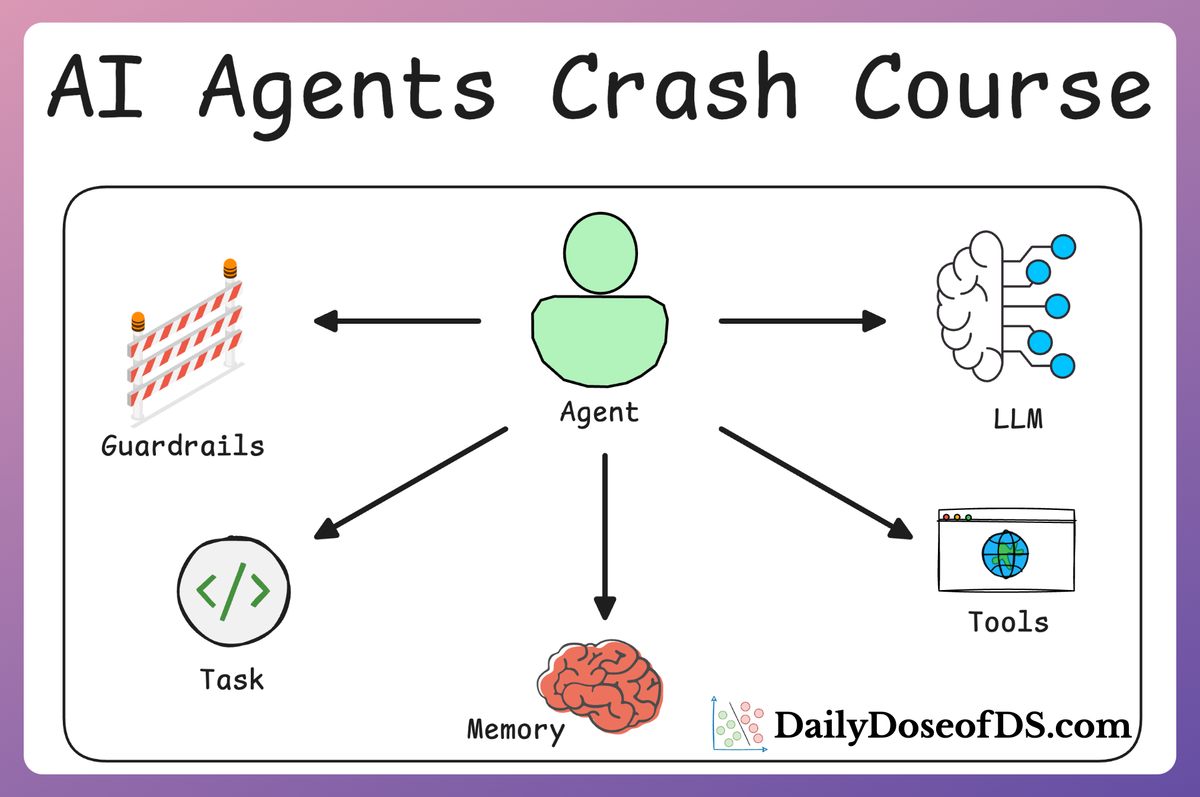

In an agentic system like CrewAI, memory is the mechanism that allows an AI agent to remember information from past interactions, ensuring continuity and learning over time.

This differs from an agent’s knowledge and tools, which we have already discussed in previous parts.

- Knowledge usually refers to general or static information the agent has access to (like a knowledge base or facts from training data), whereas memory is contextual and dynamic—it’s the data an agent stores during its operations (e.g. conversation history, user preferences).

- Tools, on the other hand, let an agent fetch or calculate information on the fly (e.g. web search or calculators) but do not inherently remember those results for future queries. Memory fills that gap by retaining relevant details the agent can draw upon later, beyond what’s in its static knowledge.

But why does memory even matter?

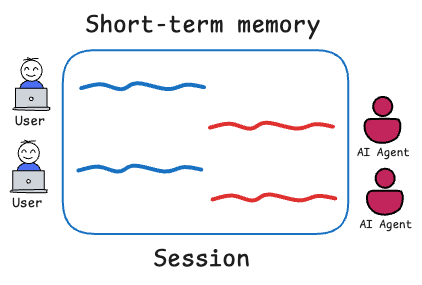

Memory enables agents to maintain continuity across multi-step tasks or conversations, personalize responses based on user-specific details, and adapt by learning from experience.

Think of it this way.

Imagine you have an agentic system deployed in production.

If this system is running without memory, every interaction is a blank slate.

It doesn’t matter if the user told the agent their name five seconds ago—it’s forgotten.

If the agent helped troubleshoot an issue in the last session, it won’t remember any of it now.

This leads to several problems:

- Users have to repeat themselves constantly.

- Agents lose context between steps of a multi-turn task.

- Personalization becomes impossible.

- Agents can't "learn" from their past experiences, even within the same session.

In contrast, with memory, your agent becomes context-aware.

It can remember facts like:

- “You like to eat Pizza without capsicum.”

- “This ticket is about login errors in the mobile app.”

- “We’ve already generated a draft for this topic.”

- “The user rejected the last suggestion. Don’t propose it again.”

In other words, an agent with memory can recall what you asked or told it earlier, or remember your name and preferences in a subsequent session.

These are several key benefits of integrating a robust memory system into Agents:

- Context retention: The agent can carry on a coherent dialogue or workflow, referring back to earlier parts of the conversation without re-providing all details. This makes multi-turn interactions more natural and consistent.

- Personalization: The agent can store user-specific information (like a user’s name, past queries, or preferences) and use it to tailor future responses for that particular user.

- Continuous learning: By remembering outcomes and facts from previous runs, the agent accumulates experience. Over time, it can improve decision-making or avoid repeating mistakes by referencing what it learned earlier.

- Collaboration: In a multi-agent “crew,” memory allows one agent to leverage information discovered by another agent in a prior step, improving teamwork efficiency.

Talking specifically about CrewAI, it provides a structured memory architecture with several built-in types of memory (we'll discuss each of them shortly in detail):

- Short-Term Memory for maintaining immediate context and coherence within a session.

- Long-Term Memory for accumulating knowledge and experience across sessions.

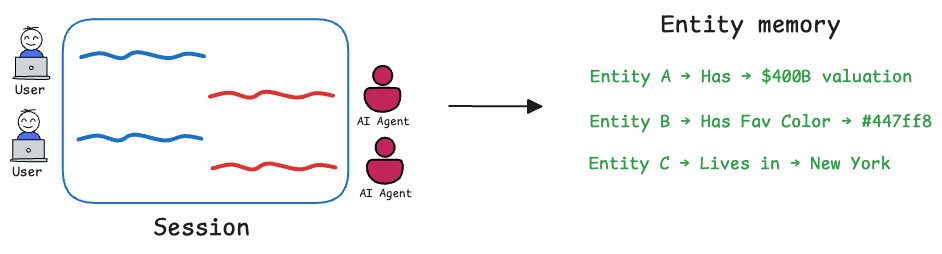

- Entity Memory for tracking information about specific entities and ensuring consistency when those entities are referenced later.

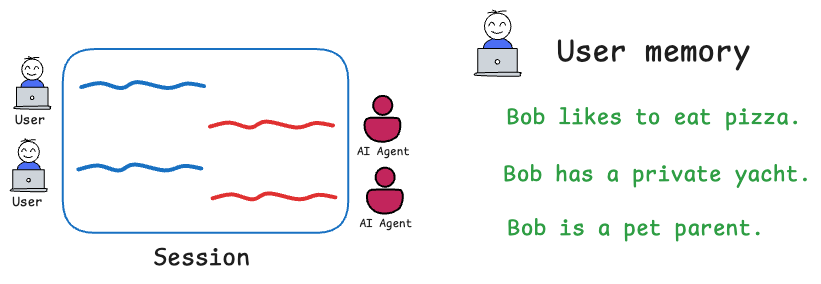

- User Memory for personalization, keeping track of individual users’ details so interactions can be tailored.

Each of these serves a unique purpose in helping agents “remember” and utilize past information.

Unlike simply increasing an LLM’s context window, these memory systems use efficient storage and retrieval mechanisms to extend an agent’s effective recall.

Below, we’ll explore each memory type in CrewAI, learn how to enable and use them in your Agents and walk through code examples with step-by-step explanations.

By the end, you should understand how to structure memory-aware agents for production use, and how to choose the right type of memory for your needs.

Installation and setup

Throughout this crash course, we have been using CrewAI, an open-source framework that makes it seamless to orchestrate role-playing, set goals, integrate tools, bring any of the popular LLMs, etc., to build autonomous AI agents.

To highlight more, CrewAI is a standalone independent framework without any dependencies on Langchain or other agent frameworks.

Let's dive in!

To get started, install CrewAI as follows:

Like the RAG crash course, we shall be using Ollama to serve LLMs locally. That said, CrewAI integrates with several LLM providers like:

- OpenAI

- Gemini

- Groq

- Azure

- Fireworks AI

- Cerebras

- SambaNova

- and many more.

To set up OpenAI, create a .env file in the current directory and specify your OpenAI API key as follows:

Memory demo

Now that we understand the use of memory and what it does, let's start with a simple demo to build practical foundations as well.