Prompting vs. RAG vs. Fine-tuning

Which one is best for you?

Which one is best for you?

TODAY'S ISSUE

Vanilla RAGs work as long as your external docs. look like the image on the left, but real-world documents are like the image on the right:

They have images, text, tables, flowcharts, and whatnot!

No vanilla RAG system can handle this complexity.

EyeLevel's GroundX is solving this.

They are developing systems that can intuitively chunk relevant content and understand what’s inside each chunk, whether it's text, images, or diagrams as shown below:

As depicted above, the system takes an unstructured (text, tables, images, flow charts) input and parses it into a JSON format that LLMs can easily process to build RAGs over.

Try EyeLevel's GroundX to build real-world robust RAG systems:

Thanks to EyeLevel for sponsoring today's issue.

Continuing the discussion on RAGs from EyeLevel...

If you are building real-world LLM-based apps, it is unlikely you can start using the model right away without adjustments. To maintain high utility, you either need:

The following visual will help you decide which one is best for you:

Two important parameters guide this decision:

For instance, an LLM might find it challenging to summarize the transcripts of company meetings because speakers might be using some internal vocabulary in their discussions.

So here's the simple takeaway:

That's it!

If RAG is your solution, check out EyeLevel's GroundX for building robust RAG systems on complex real-world documents.

👉 Over to you: How do you decide between prompting, RAG, and fine-tuning?

Thanks for reading!

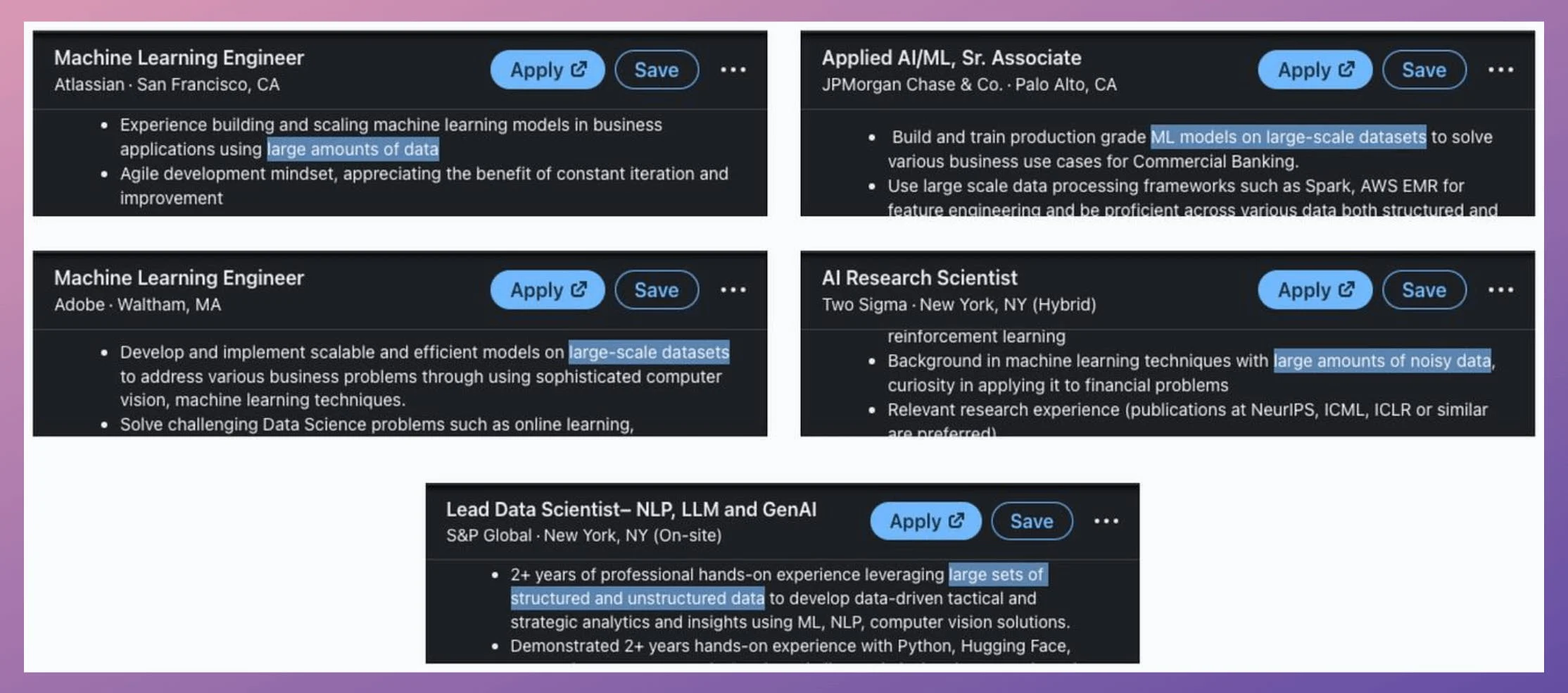

If you look at job descriptions for Applied ML or ML engineer roles on LinkedIn, most of them demand skills like the ability to train models on large datasets:

Of course, this is not something new or emerging.

But the reason they explicitly mention “large datasets” is quite simple to understand.

Businesses have more data than ever before.

Traditional single-node model training just doesn’t work because one cannot wait months to train a model.

Distributed (or multi-GPU) training is one of the most essential ways to address this.

Here, we covered the core technicalities behind multi-GPU training, how it works under the hood, and implementation details.

We also look at the key considerations for multi-GPU (or distributed) training, which, if not addressed appropriately, may lead to suboptimal performance or slow training.

The list could go on since almost every major tech company I know employs graph ML in some capacity.

Becoming proficient in graph ML now seems to be far more critical than traditional deep learning to differentiate your profile and aim for these positions.

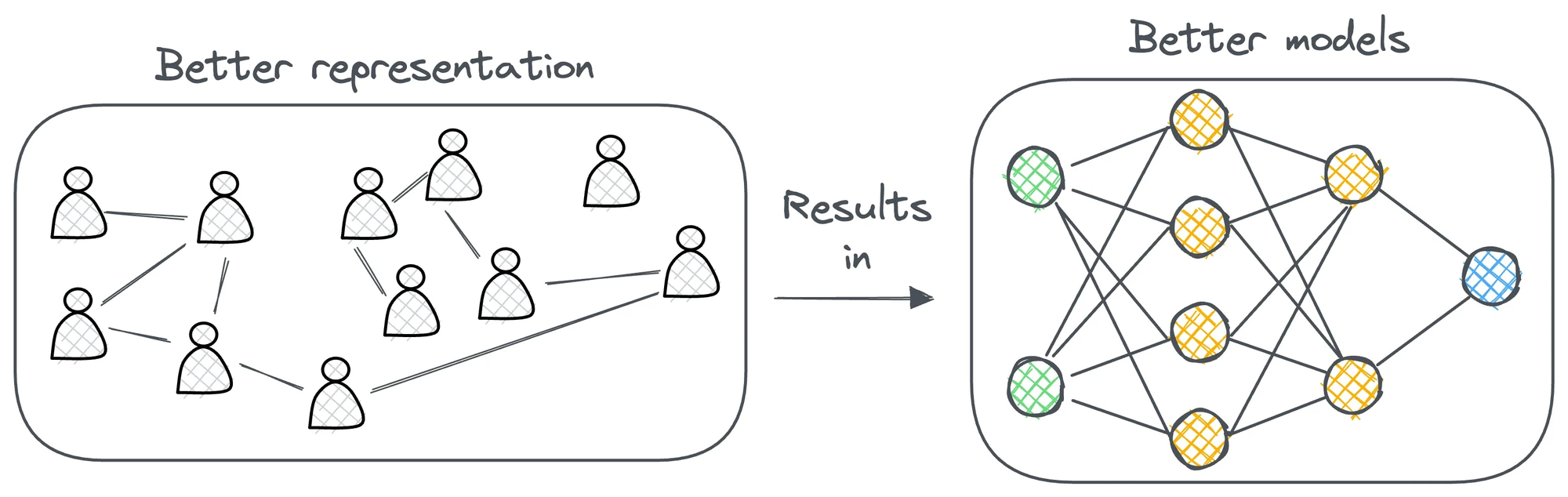

A significant proportion of our real-world data often exists (or can be represented) as graphs:

The field of graph neural networks (GNNs) intends to fill this gap by extending deep learning techniques to graph data.

Learn sophisticated graph architectures and how to train them on graph data in this crash course →