10 Practical Steps to Improve Agentic Systems (Part A)

AI Agents Crash Course—Part 13 (with implementation).

Introduction

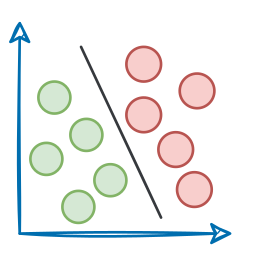

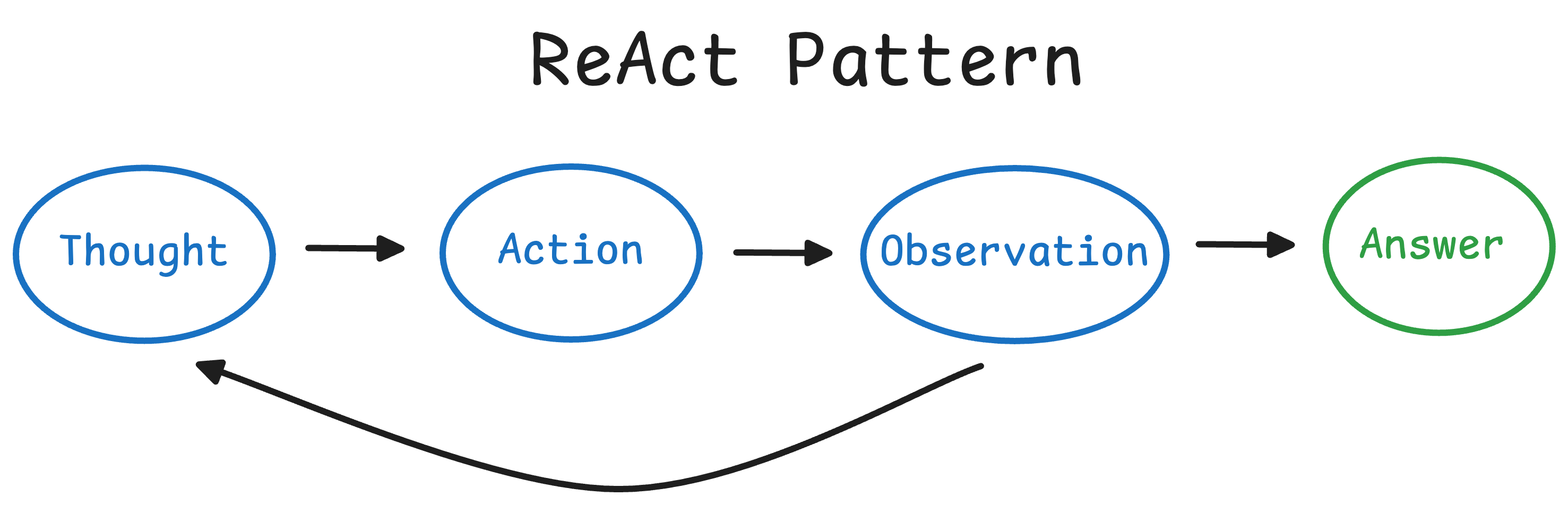

In the previous parts of this crash course, we explored the foundations of agentic systems, learned how to structure custom tools, experimented with reasoning strategies like ReAct and Planning, and even built our own autonomous agents from scratch using nothing but Python and a local or hosted LLM.

We’ve seen how an agent can reason step-by-step (ReAct), how it can pause and plan before execution (Planning), how to introduce memory, integrate knowledge sources, enforce guardrails, inject human oversight, and handle structured outputs using Pydantic.

Now, it’s time to bring everything together.

In the final parts of this AI Agents crash course series, we’re going to incrementally build a complete, end-to-end agentic system for content writing—the kind you’d deploy in a real project.

Instead of jumping directly into a complex system, we’ll build up gradually:

- Starting from a basic single-agent writer...

- Enhancing it with web search capabilities...

- Expanding into multi-agent collaboration...

- And layering on precision, structure, control, and feedback loops—everything you’ve learned so far in this crash course.

And of course, everything will be supported with proper implementations and explanations.

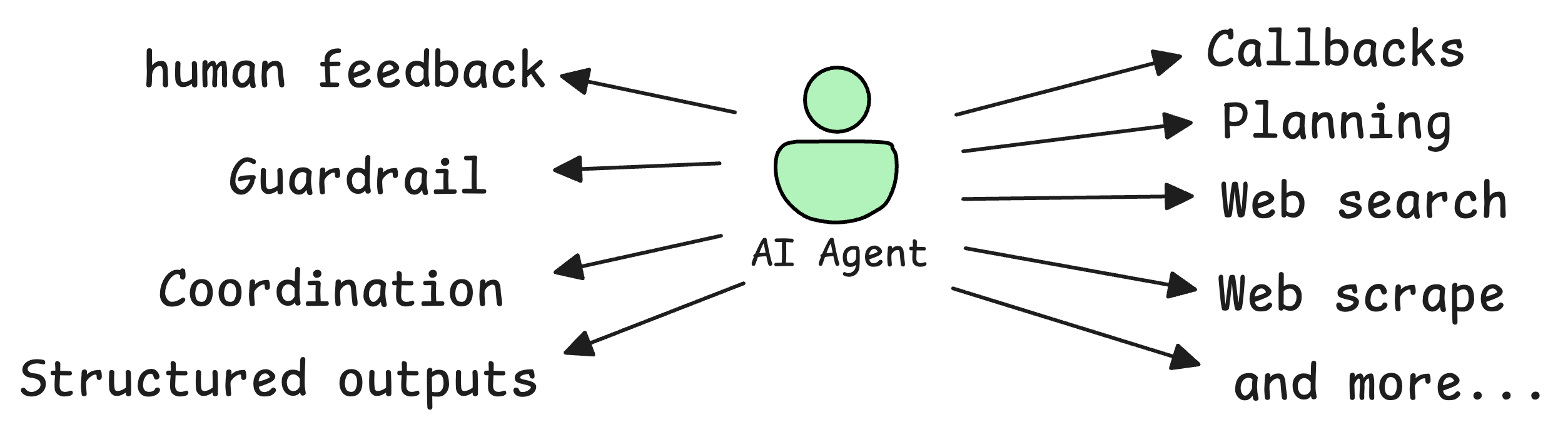

By the end, you’ll have a high-functioning content writing crew of agents that:

- Plans its tasks intelligently.

- Gathers external information in real-time.

- Coordinates between roles like writer, editor, and researcher.

- Structures its outputs with typed models.

- Invokes guardrails to catch errors.

- Requests human feedback when uncertain.

- Can trigger automated actions through callbacks.

- And much.

This article is about synthesis, where everything you’ve studied over 10+ parts will turn into a real-world implementation and improve with each layer of agentic intelligence.

Let’s begin building our end-to-end agentic content writing system!

For simplicity, we have split these into two parts. You will find the first five steps of improvements here, and the next five steps in the next article.

A quick recap

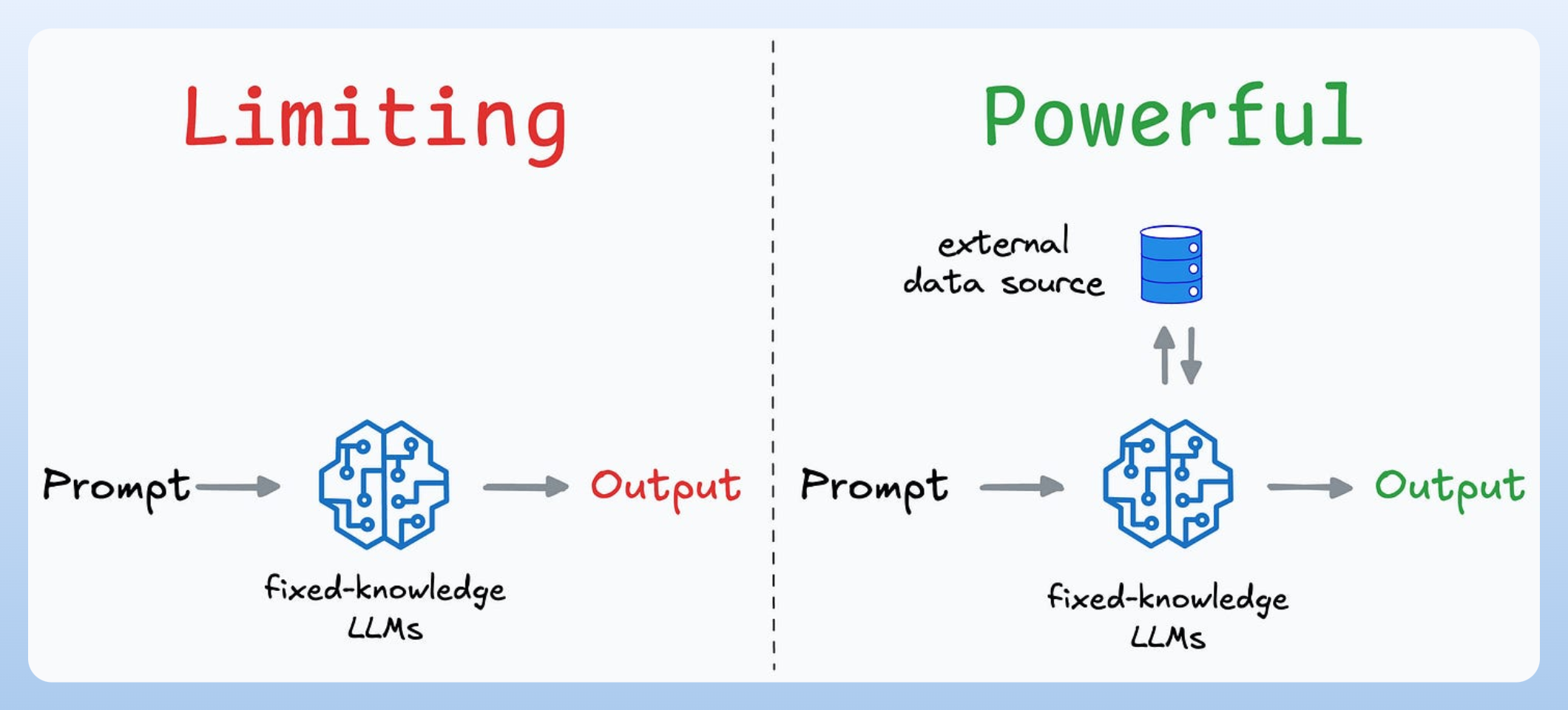

While AI agents are powerful, not every problem requires an agentic approach. Many tasks can be handled effectively with regular prompting or retrieval-augmented generation (RAG) solutions.

However, for problems where autonomy, adaptability, and decision-making are crucial, AI agents provide a structured way to build intelligent, goal-driven systems.

Here are three major motivations for building agentic systems:

1) Beyond RAG

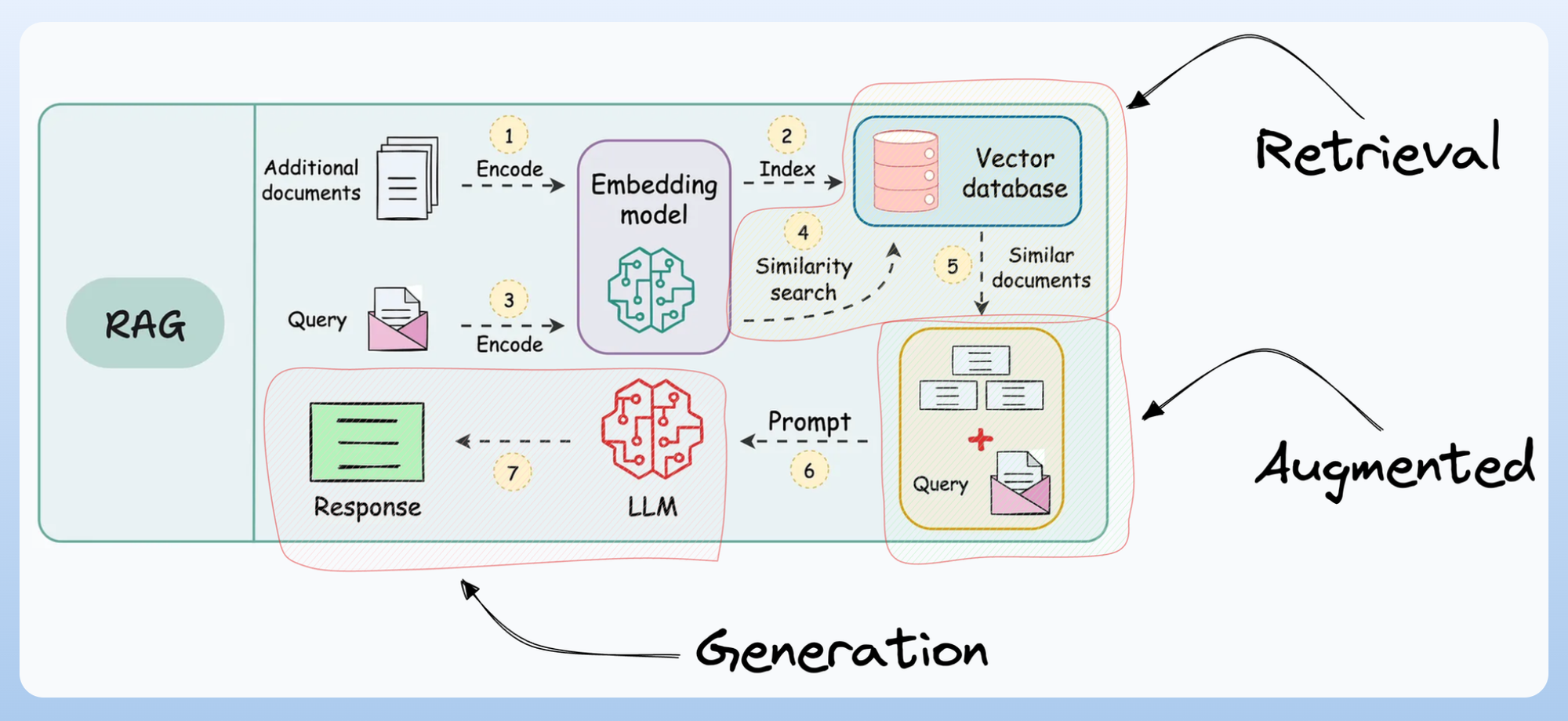

RAG was a major leap forward in how AI systems interact with external knowledge. It allowed models to retrieve relevant context from external sources rather than relying solely on static training data.

However, most RAG implementations follow predefined steps—a programmer defines:

- Where to search (vector databases, documents, APIs)

- How to retrieve data (retrieval logic)

- How to construct the final response

This is not a limitation per se, but it does limit autonomy—the model doesn’t dynamically figure out what to retrieve or how to refine queries.

Agents take RAG a step further by enabling:

- Intelligent data retrieval—Agents decide where to search based on context.

- Context-aware filtering—They refine retrieved results before presenting them.

- Actionable decision-making—They analyze and take action based on the retrieved information.

2) Beyond traditional software development

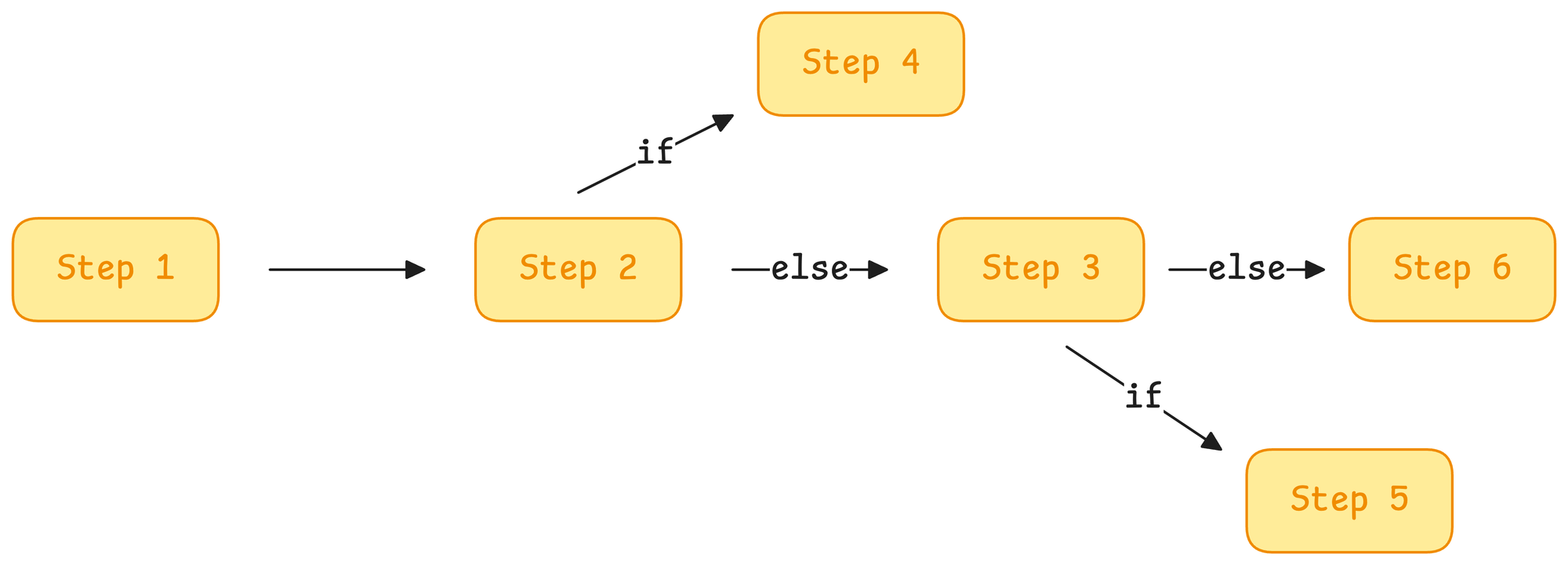

Traditional software is rule-based—developers hardcode every condition:

- IF A happens → Do X

- IF B happens → Do Y

- Else → Do Z

This works for well-defined processes but struggles with ambiguity and new, unseen scenarios.

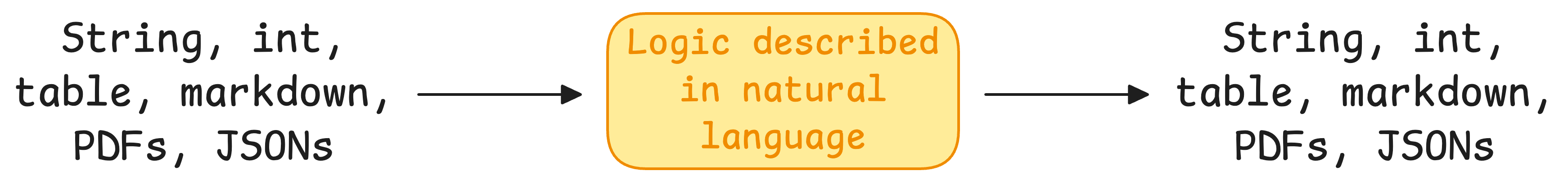

AI agents break this rigidity by:

- Handling uncertain and dynamic environments (e.g., customer support, real-time analysis).

- Learning from interactions rather than following fixed rules.

- Using multiple modalities (text, images, numbers, etc.) in reasoning.

Due to the cognitive capabilities of LLMs, instead of writing hundreds of if-else conditions, AI agents learn and adapt their workflows in real-time.

3) Beyond human-interaction

One of the biggest challenges in AI-driven workflows is the need for constant human supervision. Traditional AI models—like ChatGPT—are reactive, meaning:

- You provide a query → Model generates a response.

- You review the response → Realize it needs refining.

- You tweak the query → Run the model again.

- Repeat until the output is satisfactory.

This cycle requires constant human intervention.

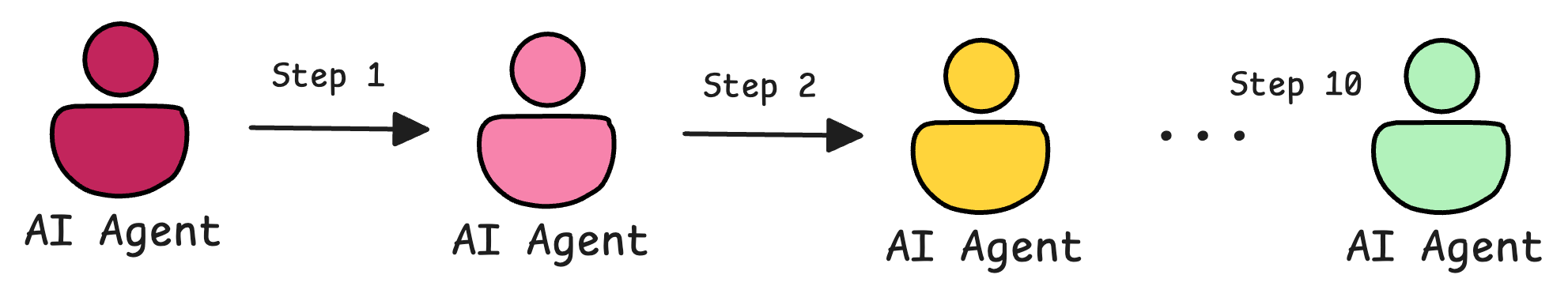

AI agents, however, can autonomously break down complex tasks into sub-tasks and execute them step by step without supervision.

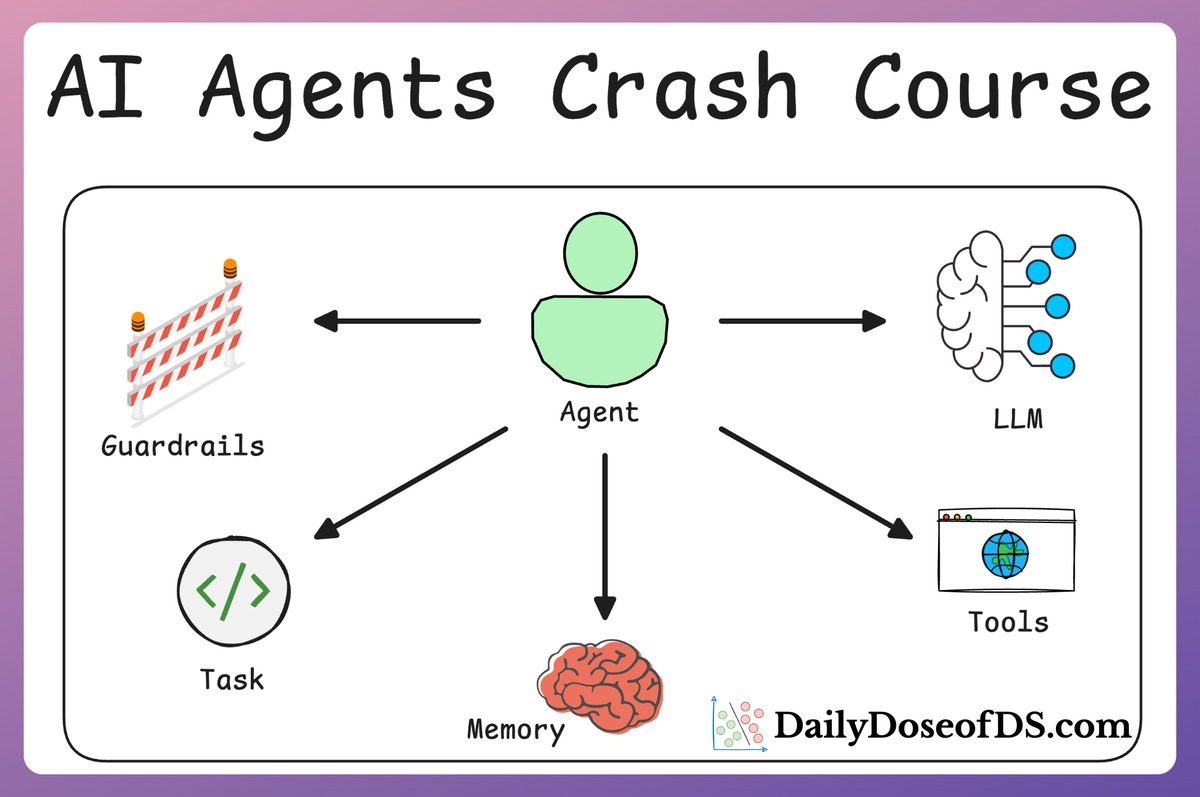

Building blocks of AI Agents

AI agents are designed to reason, plan, and take action autonomously. However, to be effective, they must be built with certain key principles in mind.

There are six essential building blocks that make AI agents more reliable, intelligent, and useful in real-world applications:

- Role-playing

- Focus

- Tools

- Cooperation

- Guardrails

- Memory

We won't cover them in detail once again since we have already done that in Part 1. If you haven't read it yet, we highly recommend doing so before reading further:

Let's dive into the implementation now.

Setup

Throughout this article, we shall be using CrewAI, an open-source framework that makes it seamless to orchestrate role-playing, set goals, integrate tools, bring any of the popular LLMs, etc., to build autonomous AI agents.

To highlight more, CrewAI is a standalone independent framework without any dependencies on Langchain or other agent frameworks.

Let's dive in!

Setup

To get started, install CrewAI as follows:

Like the RAG crash course, we shall be using Ollama to serve LLMs locally. That said, CrewAI integrates with several LLM providers like:

- OpenAI

- Gemini

- Groq

- Azure

- Fireworks AI

- Cerebras

- SambaNova

- and many more.

To set up OpenAI, create a .env file in the current directory and specify your OpenAI API key as follows:

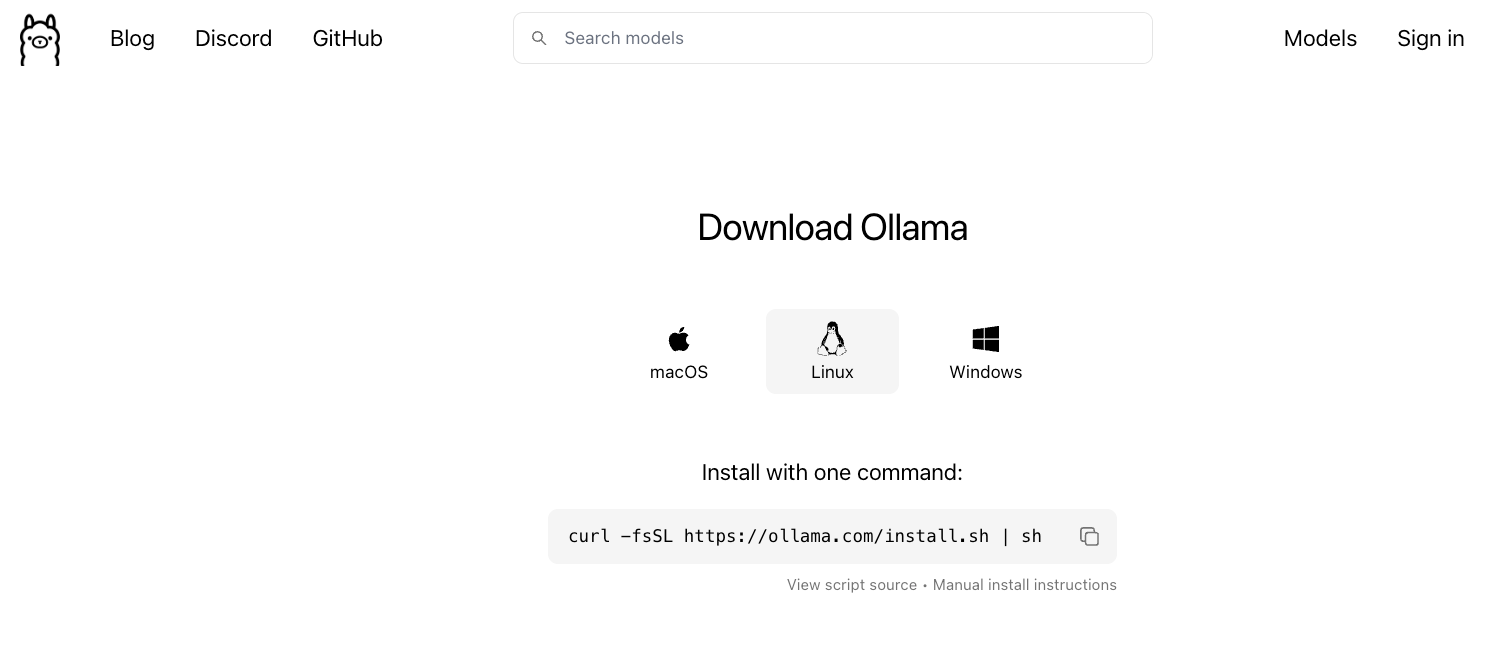

Also, here's a step-by-step guide on using Ollama:

- Go to Ollama.com, select your operating system, and follow the instructions.

- If you are using Linux, you can run the following command:

- Ollama supports a bunch of models that are also listed in the model library:

Once you've found the model you're looking for, run this command in your terminal:

The above command will download the model locally, so give it some time to complete.

That said, for our demo, we would be running Llama 3.2 1B model instead since it's smaller and will not take much memory:

Done!

Everything is set up now, and we can move on to building our agents.

Step 1) Single Agent content writer

First, let’s build a single-agent system: one AI “Content Writer” that crafts an article on a given topic.

This simple demo will give us a foundation for more complex setups later.

In CrewAI, we define an agent (who does the work), a task (what to do), and a crew (the team that runs everything). This gives us the core CrewAI classes (Agent, Task, Crew, etc.).

In your Python notebook, start with these imports:

Now we create our agent:

In CrewAI, an Agent is defined as an autonomous entity that:

- Has a

Role—its function and expertise within the system, like a "Content Writer" - Has a

Goal—its individual objective within the system, like "Craft a publication-ready article" - Has a

Backstory—it provides context and personality to the agent, enriching interactions, like "You're a seasoned researcher..."

Next, we tell the agent what to do by creating a Task. This ties an instruction to the agent:

Here, the description is the prompt/instruction, and expected_output is a hint about the final result. We assign agent=writer so that the system knows that its the content writer agent that will do this task.

Finally, we assemble a Crew. The Crew organizes agents and tasks and runs them in order. For our simple case with one agent and one task:

In the above code:

agents: List of all agents (just our writer).tasks: List of tasks to complete (justwrite_task).process: We useProcess.sequentialto run tasks one by one.verbose=True: Show detailed execution logs (useful for debugging).

Now we can run the crew by providing a topic:

This replaces the {topic} placeholder we declared during Agent and Task definition with our specific TOPIC and executes the workflow.

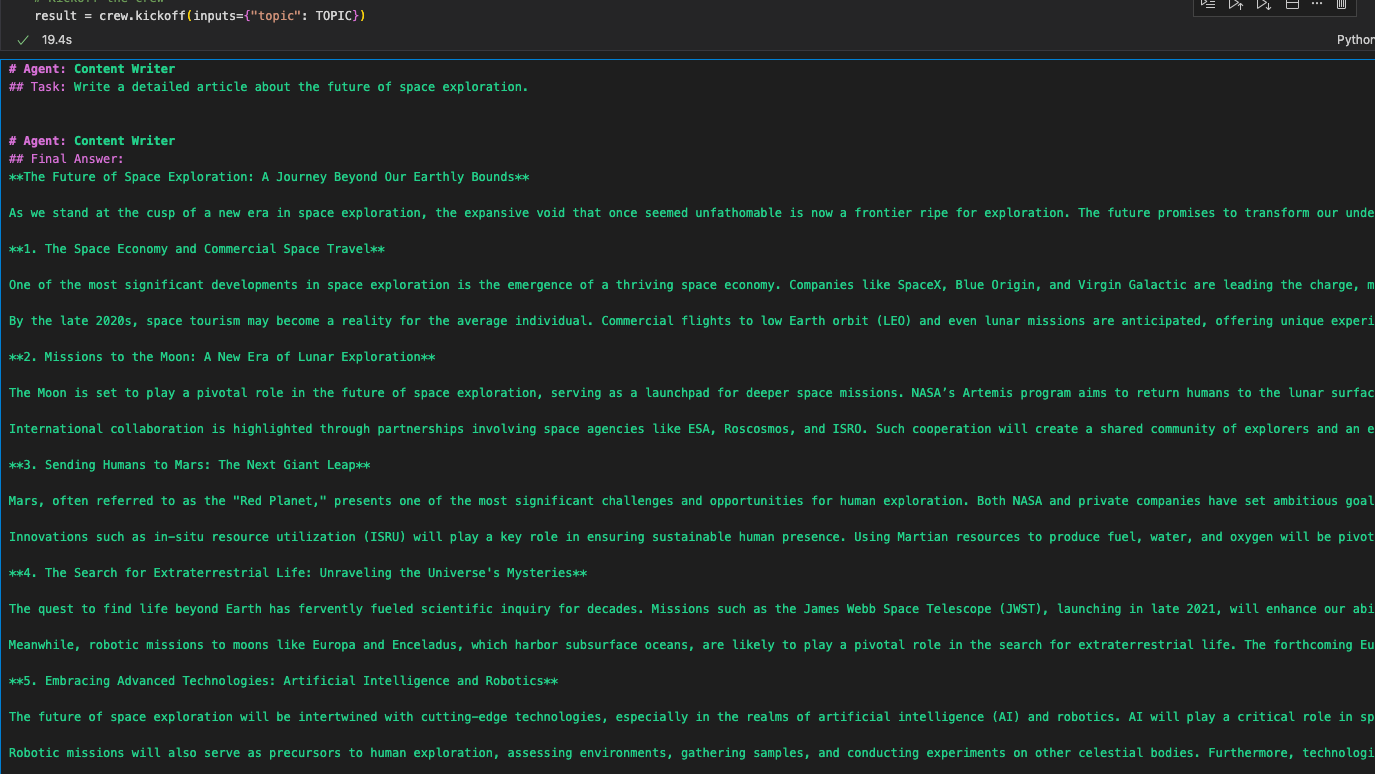

The agent will generate the article text on that topic. While executing the Crew, specifying verbose=True will show status messages as the crew runs, as shown below:

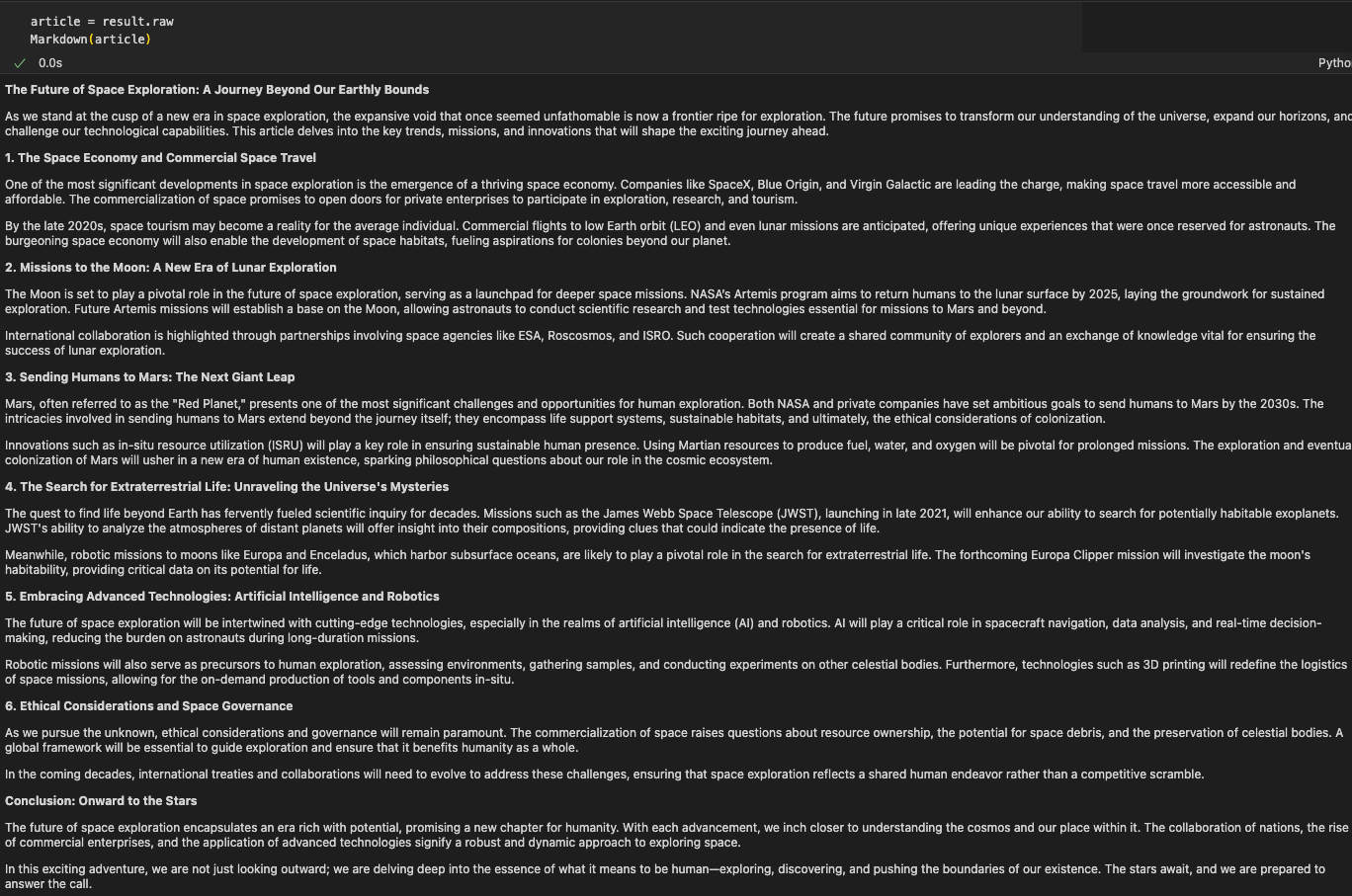

Finally, when the crew runs, we can print the result object to check the final article:

As shown below, the result is...fine. It reads like a generic summary you’d expect from any decent LLM: grammatically correct, somewhat informative, but lacking depth, specificity, or novelty.

There’s no reference to real-time data. No citations. No clarity on whether the facts are accurate or outdated. There’s no structured formatting for further processing, and most importantly—there’s no critical thinking. It’s just a flat block of text that sounds like it belongs in a middle-school essay rather than a serious content workflow.

Also, a single agent handling all steps can miss details.

This is what happens when we rely on a single agent with no tools, no memory, no collaboration, and no system design.

Over the next few iterations, we’ll fix this—layer by layer. For instance, giving our agent tools to fetch information and splitting the work across multiple agents. This will make our AI writing pipeline much more powerful and reliable.

You can download the code below: