Implementing Multi-agent Agentic Pattern From Scratch

AI Agents Crash Course—Part 12 (with implementation).

Introduction

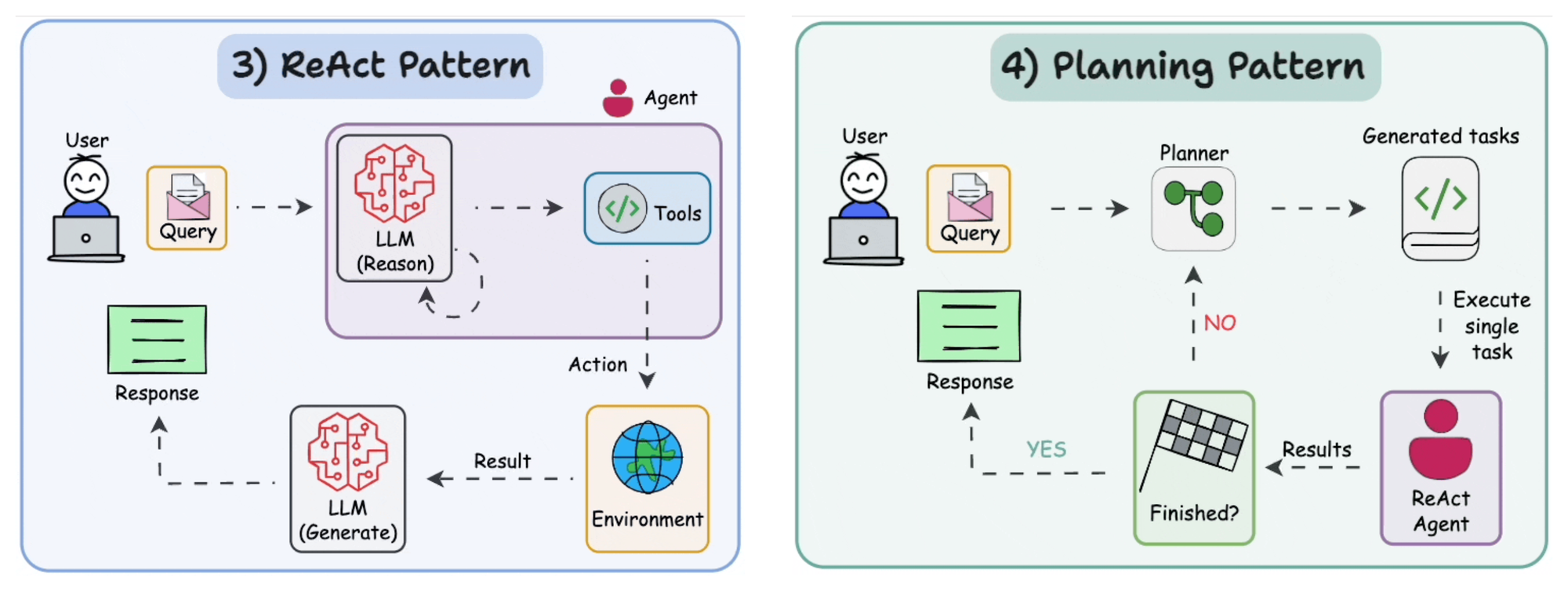

In this crash course series, we’ve already implemented two powerful patterns that power modern AI agents: the ReAct (Reasoning + Acting) loop and the Planning pattern for structured task decomposition.

But so far, we've focused on individual agents, each reasoning and acting in isolation.

What if you want multiple agents working together?

What if each agent had a specific goal, role, and toolset, and they had to collaborate to solve a broader task?

That’s where the multi-agent pattern comes in.

In this article, we’ll build a multi-agent framework from scratch, without relying on high-level orchestration libraries like LangChain or CrewAI.

Instead, we’ll break the entire logic down into three simple and logical building blocks:

- An

Agentclass that thinks, acts, and maintains its own reasoning loop. - A

Toolclass that agents can invoke to interact with the outside world. - A

Crewclass that combines multiple agents and coordinates their workflows.

By doing so, we gain full control over the agent’s behavior, making it easier to optimize and troubleshoot.

We’ll use OpenAI, but if you prefer to do it with Ollama locally, an open-source tool for running LLMs locally, with a model like Llama3 to power the agent, you can do that as well.

Along the way, we’ll explain the multi-agent pattern, design an agent loop that interleaves collaboration and tool usage, and implement multiple tools that the agent can invoke.

By the end, you’ll walk away with a fully working multi-agent system built using plain Python and an LLM backend—one where agents reason independently, call tools, and pass results to each other in a controlled pipeline.

Let’s get started.

Why Multi-Agent?

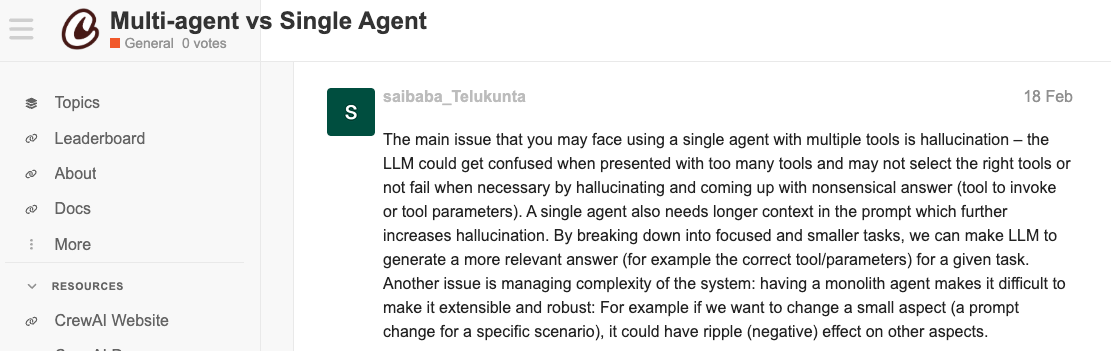

As we have seen before, a single large language model (LLM) agent is very capable, but there’s a limit to what one agent can handle alone, especially for complex, multi-step tasks.

A multi-agent approach (using a team of specialized agents) has emerged as a powerful way to tackle intricate workflows.

By breaking a big problem into parts and assigning each part to a dedicated agent, we gain several benefits over a monolithic agent.

For instance:

- Each agent focuses on a specific subtask or role, making the overall system easier to develop and debug.

- Changes to one agent’s prompt or logic won’t derail the entire system, since other agents are isolated in their own roles (although, there, one's output may be used by another).

- This separation of concerns means we can update or replace one agent (say, improve the “research” agent) without unintended side effects on others, much like swapping out one specialist on a team without retraining everyone else.

- It also reduces complexity: a single agent trying to juggle all tools and instructions in one huge prompt can become confused or hallucinate instructions, whereas multiple focused agents stay on track:

- A multi-agent setup lets each agent become a master of one trade.

- For example, in a coding assistant workflow, you might have a “Planner” agent to break down tasks, a “Coder” agent to write code, and a “Reviewer” agent to check the code, each with prompts and tools tuned to their specialty.

- Because each agent is specialized, they can employ domain-specific reasoning or use dedicated tools most effectively.

- This distributed problem-solving means complex jobs (like planning a vacation) can be split up: one agent checks the weather, another finds hotels, another maps routes, and so on.

- Together, the specialists solve the whole problem more efficiently than one generalist agent.

- Having multiple agents inherently creates a clear structure of who does what, which makes the system’s reasoning process more transparent.

- Each agent typically “thinks out loud” (via chain-of-thought prompts) and produces an intermediate result that other agents or the developer can inspect.

- In fact, multi-agent workflows often allow or even encourage agents to cross-verify each other’s outputs, catching mistakes and providing justification for decisions.

- For instance, one agent can generate a plan and another agent can critique or refine it, producing a traceable dialogue.

- This yields an audit trail of reasoning, improving explainability since you can see the rationale from each specialist rather than a single opaque giant model answer.

- Recent AI systems have used this approach to provide detailed rationales; one real-world platform reported its multi-agent setup gave defensible, nuanced recommendations with clear explanations for each decision.

- Moving on, another benefit is that when something goes wrong in a multi-agent pipeline, it’s easier to pinpoint the issue.

- Because tasks are segmented, you can tell which agent (and which step) produced a bad output or got stuck (using the verbose output or other logging procedures).

- This is much harder with one mega-agent doing everything.

- Developers can examine the intermediate outputs (e.g. the search results from the Researcher agent, or the draft summary from the Writer agent) and identify where a flaw occurred.

- Also, you wouldn’t give one person too many tasks at once and expect perfect results. The same applies to agents.

- Dividing the work not only boosts quality, it lets you trace errors to the responsible agent quickly and fix that part of the process without overhauling the rest.

- Lastly, the multi-agent pattern mirrors how human teams operate, making AI workflows more natural to design.

- In organizations, people specialize in roles – you have analysts, planners, builders, reviewers, and they collaborate to accomplish something complex.

- Similarly, in a multi-agent AI system, each agent is like a team member with a clear job, and a coordinator ensures they work in concert.

- This mapping makes it conceptually easier to design AI solutions for complex problems by thinking in terms of “Which agents (roles) would my team need?”

- For example, an AI travel concierge service can be designed as a crew of agents: one for looking up attractions, one for checking logistics (flights, weather), one for optimizing the itinerary, etc., all working together on the user’s request.

- Such a division not only feels intuitive but has been shown to handle the complexity better than a single all-purpose agent.

In summary, it must be clear that using multiple agents introduces modularity, specialization, and clarity into AI reasoning.

It can make AI systems more robust and interpretable by having dedicated mini-brains tackling each part of a problem and then sharing their findings.

Recent research and industry experiments consistently find that multi-agent collaboration can solve more intricate tasks and provide better transparency than a lone AI agent, much like a well-coordinated team outperforms a single overworked individual.

Multi-agent internal details

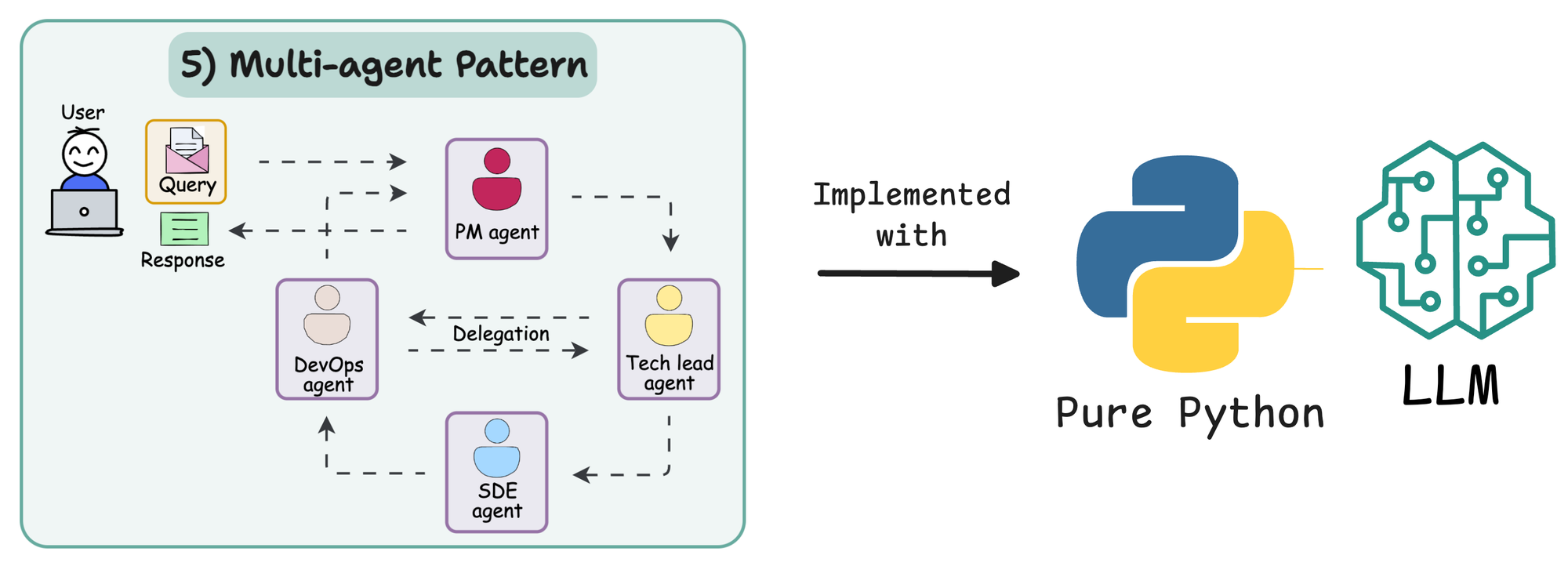

As discussed earlier, a multi-agent pattern structures an AI workflow as a team of agents that work together, each with a well-defined role.

Instead of one agent handling a task from start to finish, you have multiple agents in a pipeline, where each agent takes on one part of the task and then hands off the result to the next agent.

This design is often (not always) coordinated by an orchestrator that makes sure the agents run in the right sequence and share information.

The idea is analogous to an assembly line or a relay team: Agent A does step 1, then Agent B uses A’s output to do step 2, and so on, until the goal is achieved.

Each agent is autonomous in how it thinks/acts for its sub-problem, but they collaborate through the defined workflow to solve the bigger problem together.

Technically speaking, each agent in a multi-agent pattern still follows the reasoning+acting loop internally (often implemented via the ReAct paradigm, which we have already seen in the ReAct pattern issue).

That is, an agent will receive some input (or context), think about what to do (generate a "Thought"), then act by either producing an output or calling a Tool, observing the result, and so on.

The crucial difference is that an agent’s scope is limited to its assigned role.

It has a narrower focus, a smaller set of tools, and a specific goal in the chain. For example, an agent tasked with “database lookup” will primarily reason about how to query the database and act by executing that query, rather than also worrying about how to present the final answer to the user, which would be another agent’s job.

By constraining an agent’s responsibilities, we make its prompts simpler and more targeted, which often leads to more reliable behavior.

System overview

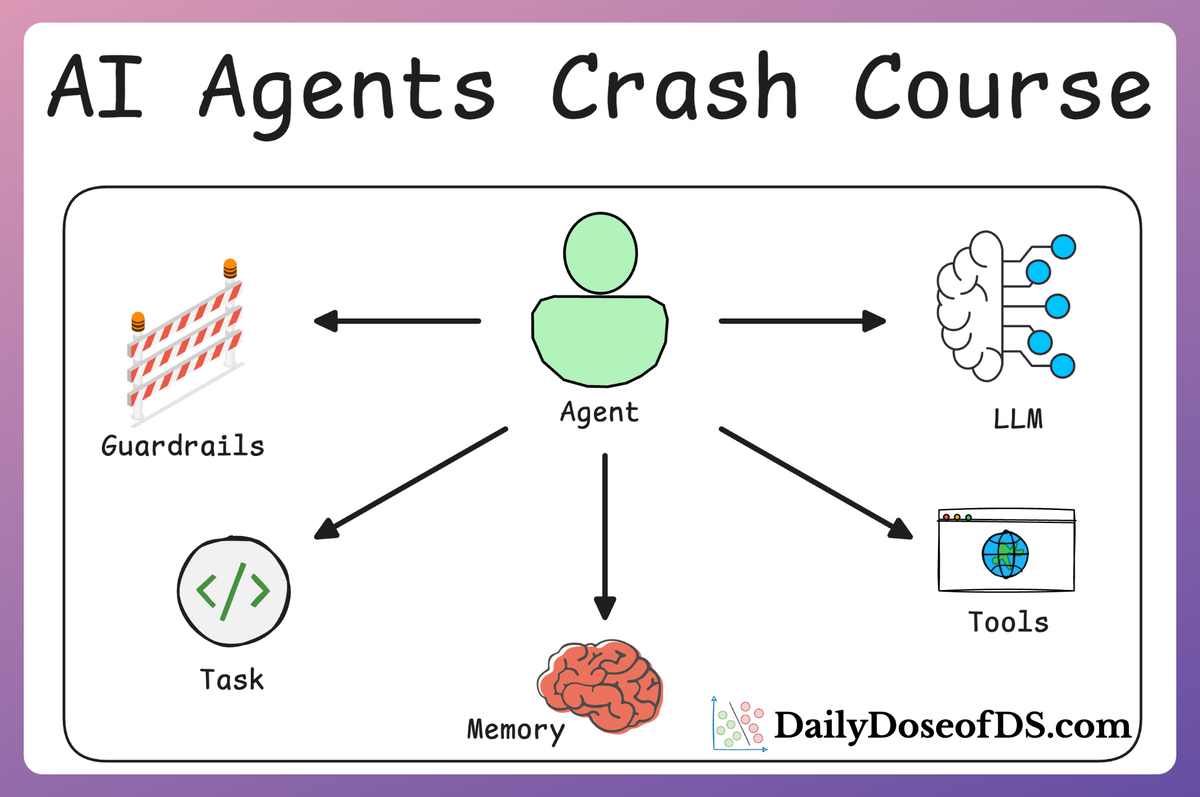

To clarify the pieces of this architecture, let’s break down the main components involved in a multi-agent pipeline:

- Agent:

- In this context, an Agent is an autonomous AI unit (usually an LLM with a prompt) that can perceive inputs, reason (via chain-of-thought), and perform actions to complete a subtask.

- Each agent is typically configured with a specific role and has access to only the tools or information it needs for that role.

- For instance, you might have a

ResearchAgentthat can use a web search tool, and a separateSummaryAgentthat can use a text generation tool. - The agent will loop through thinking (“Thought…”) and acting (“Action…”) until it produces an outcome for its part of the job.

- Because it’s focused, it can follow a strict prompt format or protocol (often defined by the framework) that keeps its behavior safe and on-task.

- Tool:

- A Tool is any external capability that an agent can invoke to help it act on the world or fetch information.

- Tools could be things like a web search API, a calculator, a database query interface, an email-sending function, etc.

- In the multi-agent pattern, tools are the specific actions available to agents. Each agent usually has a limited toolbox relevant to its role.

- For example, the ResearchAgent might have a “Search the web” tool, while the SummaryAgent might have a “Lookup knowledge base” tool.

- The ReAct loop of the agent involves choosing a tool and providing inputs to it (e.g. a search query), then reading the tool’s output (observation) to inform its next thought.

- By structuring tool use this way, we ensure the agent’s reasoning and actions are transparent and can be logged (we see what query it searched for, what result came back, etc.).

- Notably, frameworks like LangChain or CrewAI define tool interfaces and force agents to adhere to a format (e.g. always output a JSON for tool inputs) to keep this interaction reliable.

- Crew

- The Crew is essentially the orchestrator, a component (or class, in code) that sets up the multi-agent workflow and manages the execution flow.

- If each agent is a team member, the Crew is the team manager that knows the overall plan.

- In a sequential pipeline, the Crew will pass the initial input to the first agent, take that agent’s output and feed it to the next agent, and so forth, until the final agent produces the answer.

- This Crew defines the order of agents (and sometimes the specific task each agent performs on the data).

- For example, using the CrewAI library, one can instantiate a

Crew(agents=[Agent1, Agent2, ...], tasks=[Task1, Task2, ...], process=Process.sequential)and then callcrew.kickoff()to run through the agents in sequence. - The Crew handles coordination details like ensuring each agent gets the right input, and it can collect or consolidate outputs.

- In more complex setups, the coordinator might also implement logic for looping (repeating agents or steps until a condition is met) or branching (choosing which agent to invoke based on some criteria), but the basic idea is that the Crew oversees the agents so they work together smoothly.

- In some architectures, the coordinator is itself an agent (often called a “manager” or “supervisor” agent) that has the job of delegating tasks to other specialist agents.

- Whether it’s an explicit class or a manager agent, this coordinating role is what makes the group of agents function as a unified system rather than a bunch of isolated bots.

In a multi-agent pipeline, information typically flows from one agent to the next.

For instance, consider a simple pipeline where Agent A gathers data, Agent B analyzes it, and Agent C writes a report.

The Crew would give Agent A the initial query; Agent A uses its tools and returns, say, some raw data; that data is passed to Agent B, which produces an analysis; then Agent C gets the analysis and produces the final report.

Each agent only deals with the input relevant to its task and doesn’t worry about the overall mission beyond its part.

This sequential hand-off is what we mean by the multi-agent pattern: it’s a design where multiple narrow AI agents are chained together, each one’s output feeding into the next’s input until the goal is achieved.

Implementing Multi-agent system from scratch

So far, we’ve seen why multi-agent setups offer better modularity, clarity, and interpretability compared to single-agent systems.

Now, let’s get our hands dirty and implement a multi-agent system from scratch, without relying on any external orchestration libraries like LangChain or CrewAI.

As discussed earlier, our system will be built using three core abstractions:

- Tool class:

- The Tool class wraps around real functions (like search_news, summarize_text, or calculate_stats) and exposes them in a way that agents can discover, validate inputs against, and invoke dynamically. Each tool includes:

- A parsed function signature,

- Automatic input validation and type coercion,

- A standardized calling interface.

- This allows agents to reason about what tools are available and how to use them, without hardcoding function calls.

- The Tool class wraps around real functions (like search_news, summarize_text, or calculate_stats) and exposes them in a way that agents can discover, validate inputs against, and invoke dynamically. Each tool includes:

- Agent class:

- The Agent class represents an individual AI agent that can:

- Think through a task (via the ReAct loop),

- Invoke tools to act on the environment,

- Pass outputs downstream to dependent agents.

- Each agent has a distinct backstory, task, output expectation, and toolset, making it highly modular and role-specific.

- Agents can also define dependencies: e.g., Agent A must run before Agent B. Internally, each agent is powered by a

ReactAgentwrapper that handles its reasoning loop using a language model.

- The Agent class represents an individual AI agent that can:

- Crew class:

- The Crew class is the orchestrator that glues everything together.

- It manages:

- Agent registration,

- Dependency resolution using topological sorting,

- Ordered execution of agents based on dependencies.

In the next sections, we’ll walk through each class in detail, understand how they work, and see how they come together to form a working multi-agent system.

Let’s start with the Tool class.

You can download the code below: