Reproducibility and Versioning in ML Systems: Weights and Biases for Reproducible ML

MLOps Part 4: A practical walkthrough of W&B-powered reproducibility.

Recap

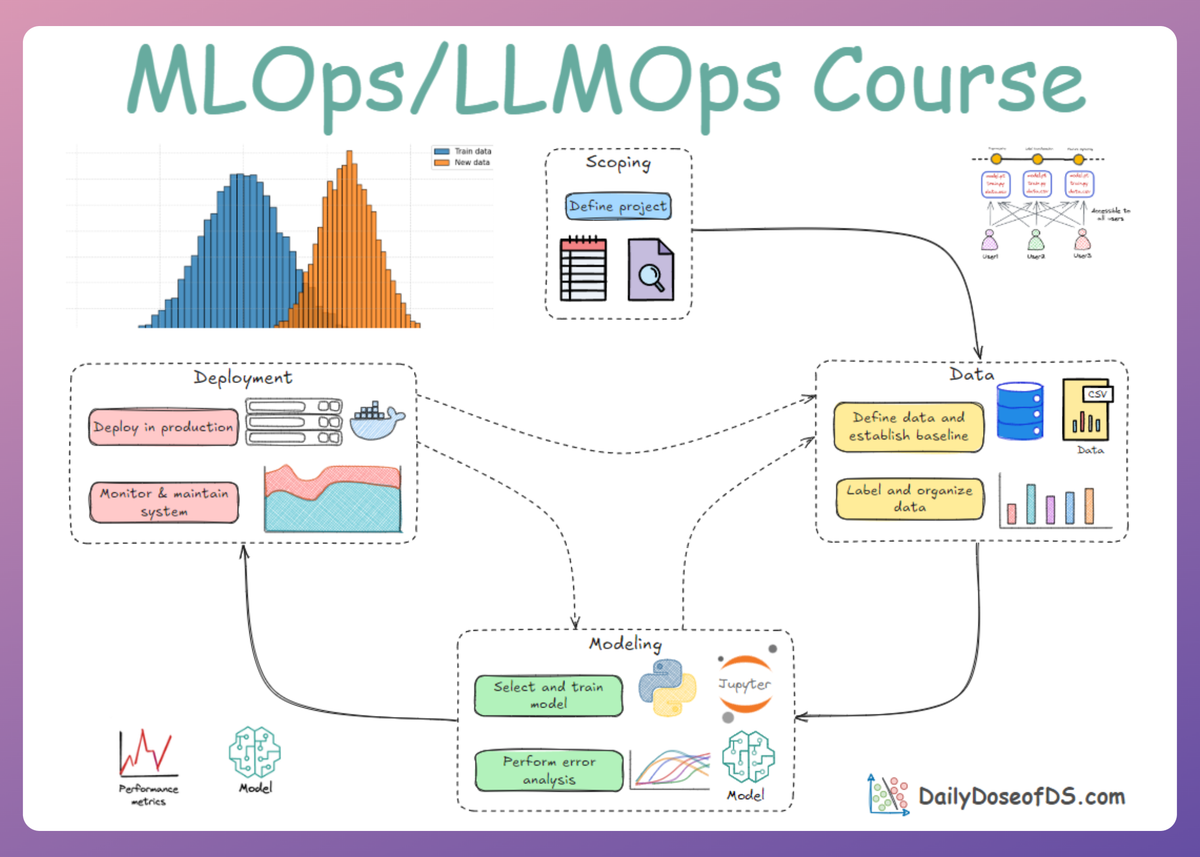

In Part 3 of this MLOps and LLMOps crash course, we deepened our understanding of ML systems by exploring the importance of reproducibility and versioning.

We began by exploring what reproducibility is, how versioning plays a key role in achieving it, and why these concepts matter in the first place.

We examined the importance of reproducibility in areas such as error tracking, collaboration, regulatory compliance, and production environments.

We then discussed some of the major challenges that can hinder reproducibility. We saw how ML being "part code, part data" adds the extra layers of complexity.

After that, we reviewed best practices to ensure reproducibility and versioning in ML projects and systems, including code and data versioning, maintaining process determinism, experiment tracking, and environment management.

Finally, we walked through hands-on simulations covering seed fixation, data versioning with DVC, and experiment tracking with MLflow.

If you haven’t explored Part 3 yet, we strongly recommend going through it first since it lays the conceptual scaffolding and implementational understanding that’ll help you better understand what we’re about to dive into here.

You can read it below:

In this chapter, we’ll continue with reproducibility and versioning in ML systems, diving deeper into practical implementations.

We'll specifically see how to achieve reproducibility and version control in ML projects using Weights & Biases (W&B) as a primary tool and compare W&B’s approach to that of DVC and MLflow.

Keeping W&B central to the implementations, we'll cover:

- Experiment tracking.

- Dataset and model versioning.

- Reproducible pipelines.

- Model registry.

As always, every concept will be backed by concrete examples, walkthroughs, and practical tips to help you master both the idea and the implementation.

Let’s begin!

Introduction

Before we dive into the details of Weights & Biases (W&B), let's quickly refresh the core ideas related to reproducibility and versioning.

As discussed multiple times throughout this crash course, machine , projects don’t end with building a model that performs well on a single training run.

In machine learning systems, as we know, we have not just code but also data, models, hyperparameters, training configurations, and environment dependencies.

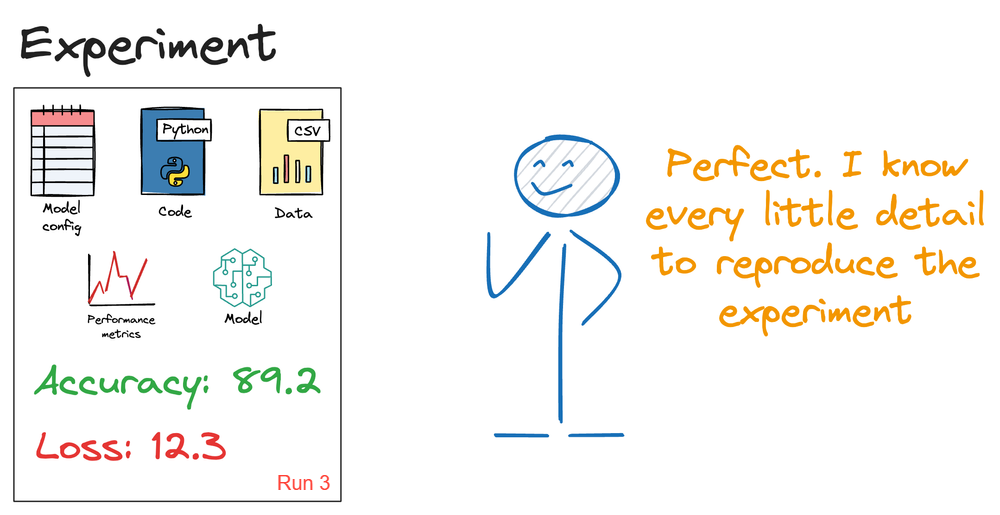

Ensuring reproducibility means that any result you obtain can be consistently reproduced later, given the same inputs (code, data, configuration, etc.).

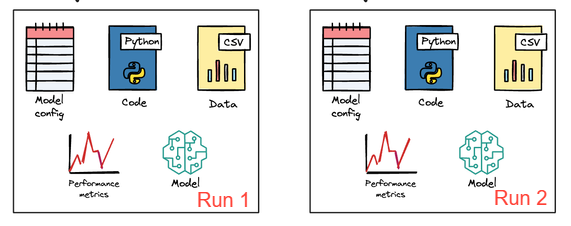

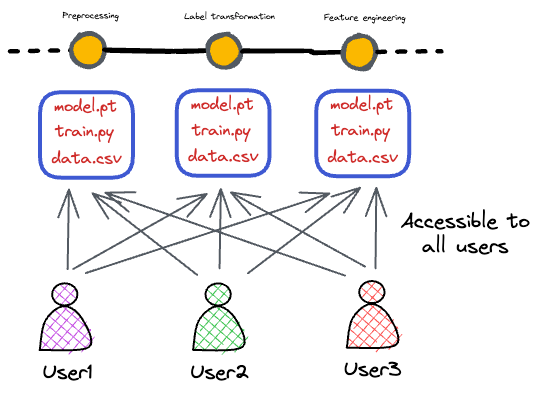

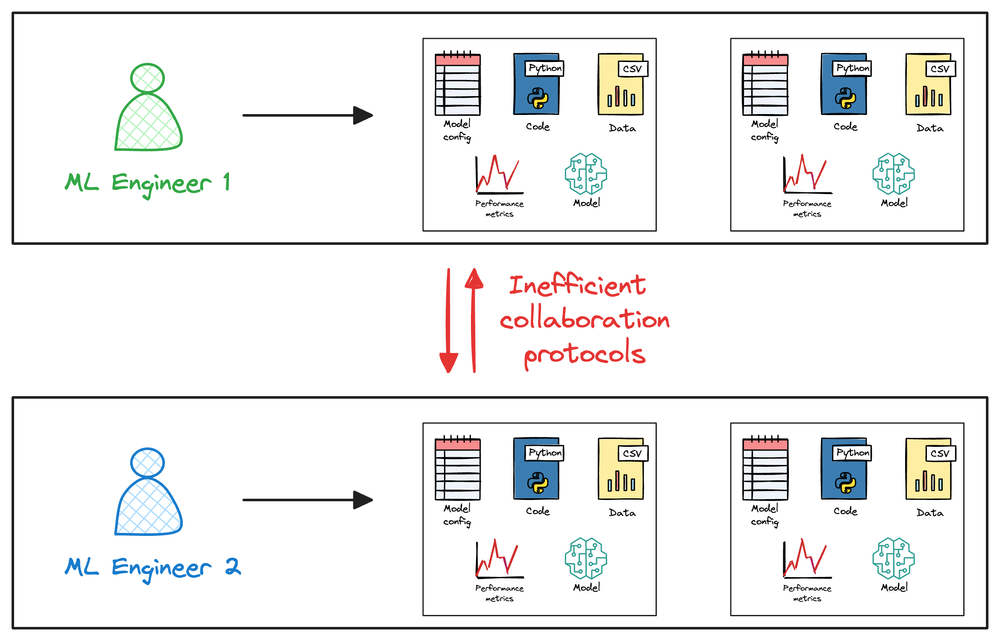

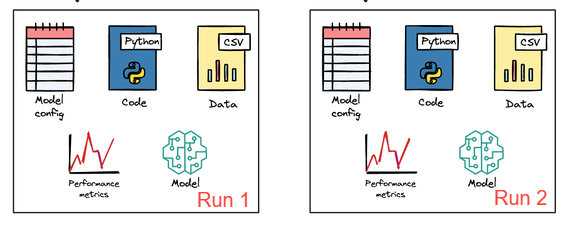

By systematically logging these, you enable what’s called experiment reproducibility and auditing. Teams can validate each other’s results, compare experiments knowing they are on equal footing, and roll back to a previous model or dataset if needed.

Versioning in ML goes hand-in-hand with reproducibility. We need version control not only for code, but for datasets and models:

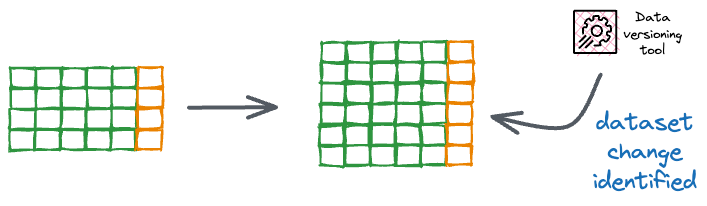

- Dataset versioning: Imagine your dataset gets updated with new samples or improved labels. In the previous chapter, we looked at DVC for this purpose. In this chapter, we will focus on W&B Artifacts, which also allow you to manage datasets as versioned assets, similar to how code is versioned.

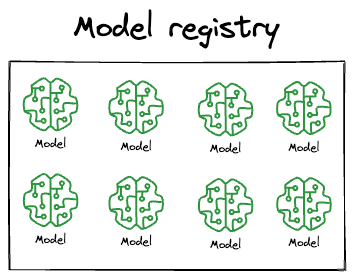

- Model versioning: Likewise, you might retrain a model multiple times. A model registry and versioning mechanism lets you keep track of different model checkpoints (e.g., “Model v1.0 vs v1.1”) along with their evaluation metrics. This ensures you can always roll back to a prior model if a new deployment has issues.

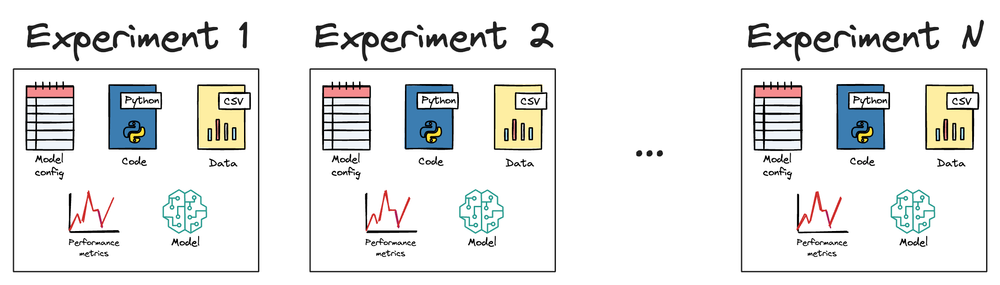

To summarize, reproducibility and versioning in MLOps are about bringing order to the experimentation chaos:

- They ensure that you can repeat any experiment exactly by having recorded everything that went into it.

- They allow you to compare experiments apples-to-apples, because you know the precise differences in code/data/configuration between them.

- They provide traceability: for any model in production, you should be able to trace back to how it was trained, with which data, and by whom. Commonly referred to as data and model lineage.

- They foster collaboration: team members can share results with each other through tracking tools, rather than sending spreadsheet summaries over email.

As highlighted in the previous chapter, without these practices, ML teams risk a lot of pain: models that can’t be reproduced or trusted, lost work because you can’t remember which notebook had that one great result, difficulties in merging contributions from multiple people, and even deployment disasters (deploying the wrong model version, etc.).

Now that we’ve made the case clear, let’s look at W&B services and how they help solve these problems.

Weights and Biases: core philosophy

W&B positions itself as "the developer-first MLOps platform". It is cloud-based and primarily focused on experiment tracking, dataset/model versioning, and collaboration.

The central thesis of W&B is that the highest-leverage activity in machine learning is the cycle of training a model, tracking its performance, comparing it to previous attempts, and deciding what to try next.

W&B is designed to make this loop as fast, insightful, and collaborative as possible.

In our experience, it stands out with its interactive UI, providing dashboards to compare runs, visualize metrics, and even create reports.

While W&B is primarily offered as a hosted service, it also supports self-managed and on-premise deployments. In this chapter, our focus will be solely on using W&B as a hosted service.

W&B integrates with many frameworks (PyTorch, TensorFlow, scikit-learn, etc.) out of the box for easy logging.

With having built a very basic understanding about Weights & Biases and its approach, it also becomes important to understand how it differs from MLflow. Let's quickly take a look at that too.

Comparison: MLflow vs. W&B

| Feature / Aspect | MLflow | Weights & Biases (W&B) |

|---|---|---|

| Nature | Open-source, self-hosted (local or server) | Cloud-first, hosted (free & paid tiers) |

| Experiment Tracking | Logs parameters, metrics, artifacts | Similar but with richer visualizations |

| UI | Basic web UI, simple plots | Advanced dashboard with interactive charts |

| Collaboration | Limited | Strong: team dashboards, reporting |

| Artifacts Storage | Local (default) | Hosted (or external bucket with integration) |

| Ease of Use | Simple Python API, more manual config | User-friendly, lots of integrations (PyTorch, Keras, HuggingFace) |

| Offline Use | Fully possible (local logging + UI) | Offline possible, but main strength is online |

| Best For | Local/enterprise setups, custom infra | Fast setup, collaboration, visualization-heavy workflows |

Hence, to answer, "Why learn W&B if I already know MLflow?"

Both MLflow and W&B are top-notch, but if you or your team don’t want the hassle of setup and maintenance, W&B is the better fit because:

- Managed vs. self-hosted: MLflow usually needs a tracking server; W&B can offer fully managed SaaS.

- Experiment tracking: Both log runs/metrics, but W&B offers richer visualizations, dashboards, and collaboration out of the box.

- Artifacts & registry: W&B integrates artifact storage and model registry seamlessly, while MLflow’s is more basic unless on Databricks.

- Collaboration: W&B is team-oriented with easy sharing and reporting; MLflow is more of a flexible toolkit.

With this gentle introduction and comparison, let’s jump straight into implementations and see exactly how we will use W&B in ML workflows.

Predictive modeling with scikit-learn

Let's build our first complete, reproducible machine learning project using Weights & Biases.

We'll start with the classic, house price prediction problem that's perfect for demonstrating the core W&B workflow without getting lost in model complexity.

This is a foundational regression problem, and a reliable model could power everything from property estimates to investment strategies.

For this walkthrough, we'll use the well-known California housing prices dataset, but the principles apply to any tabular regression task, such as predicting customer lifetime value, forecasting inventory demand, or estimating insurance claim amounts.

Our goal will be to train a RandomForestRegressor model using scikit-learn and, in the process, build a fully versioned and reproducible pipeline with W&B. We will systematically:

- Version our raw dataset using W&B Artifacts.

- Track our training experiment, log hyperparameters and evaluation metrics.

- Leverage W&B's built-in scikit-learn integration.

- Version the final trained model as an artifact.

- Link our best model to the W&B Registry to mark it as a candidate for staging.

Project setup

This project's code is meant to be run using Google Colab. We recommend uploading the .ipynb notebook in Colab, and running it from there.

Download the notebook here: