Model Deployment: Introduction to AWS

MLOps Part 14: Understanding AWS cloud platform, and zooming into EKS.

Recap

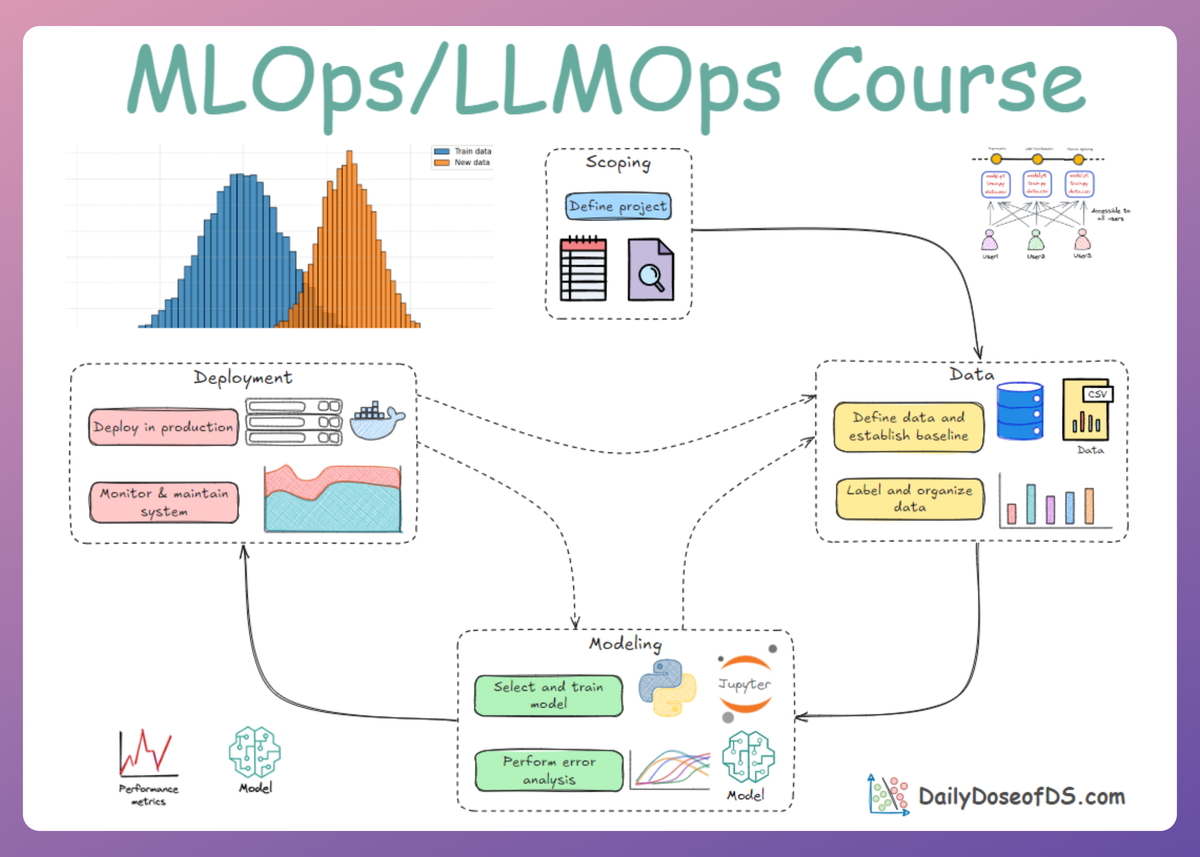

In Part 13 of this MLOps and LLMOps crash course, we explored the fundamentals of cloud computing.

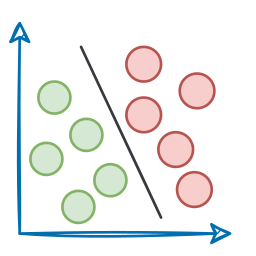

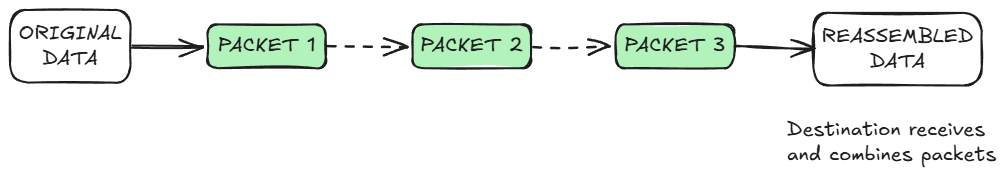

We began by exploring the basics essential for understanding Cloud. This included learning about IP addresses, domain names, and the movement of data as packets.

After that, we introduced cloud computing, understood the definition, and key characteristics. We also learnt about the essential characteristics of any cloud system, as specified by NIST (National Institute of Standards and Technology).

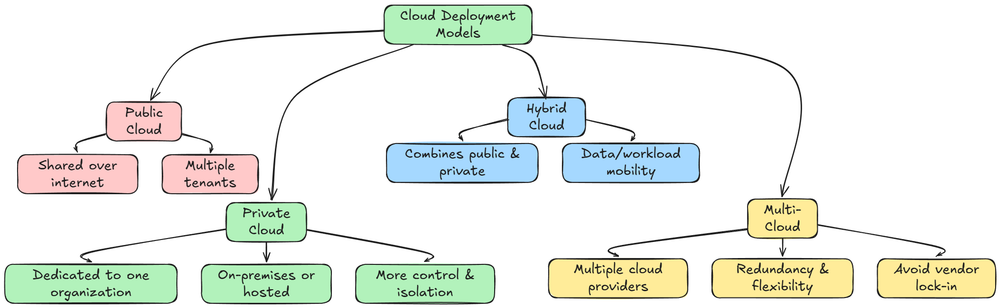

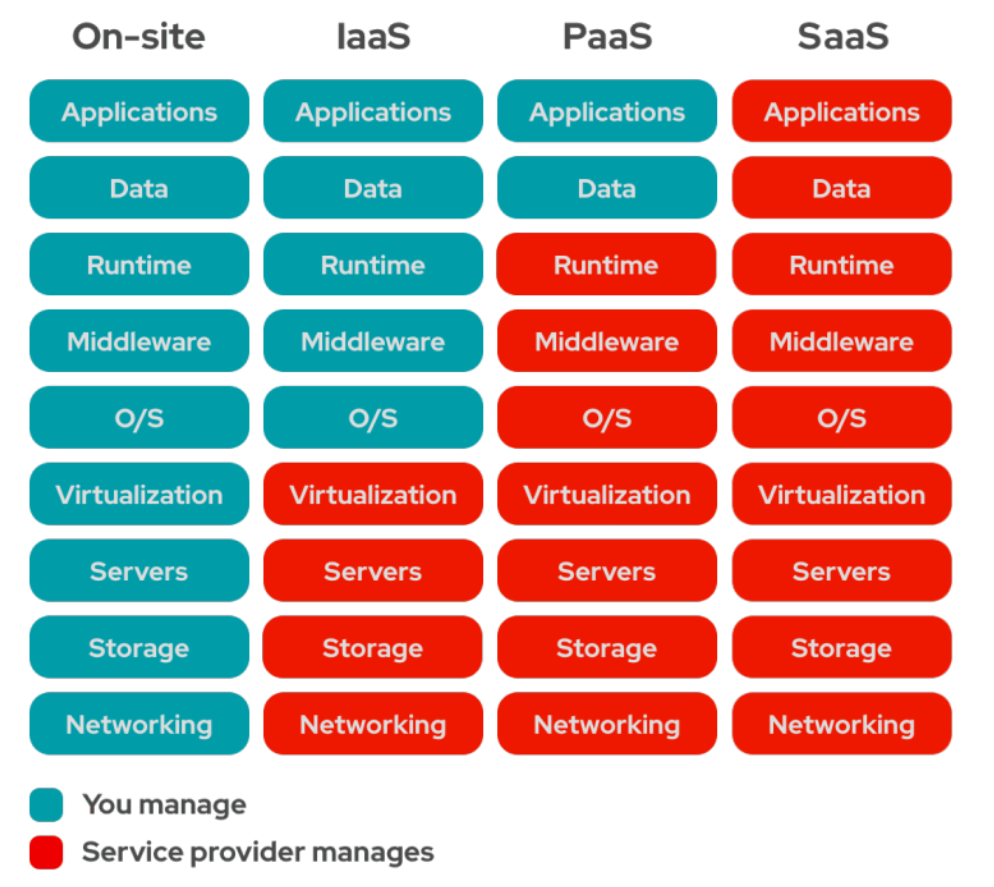

Next, we dived into the different types of models in cloud computing. This included understanding deployment models, service models, cost models, and the shared responsibility model.

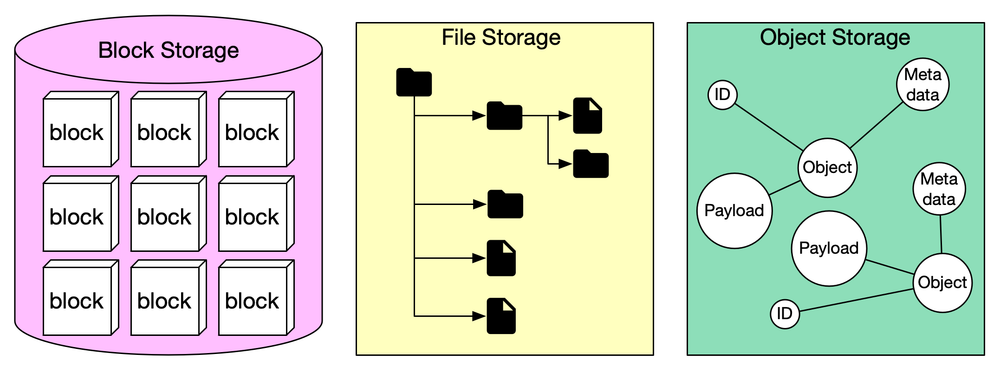

Moving ahead, we explored the cloud infrastructure components: compute, storage, networking, IAM, monitoring, security, and compliance.

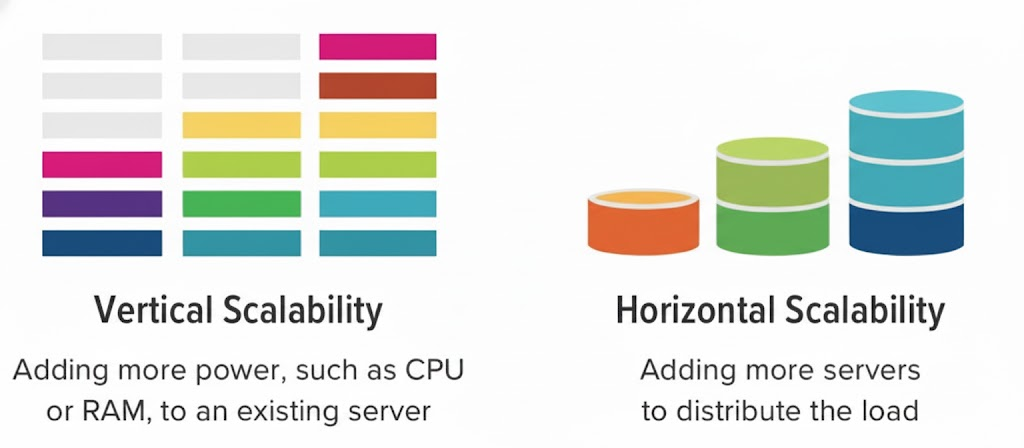

We also explored key reasons why we should be using cloud services for machine learning operations. One of the prime reasons being easy scalability.

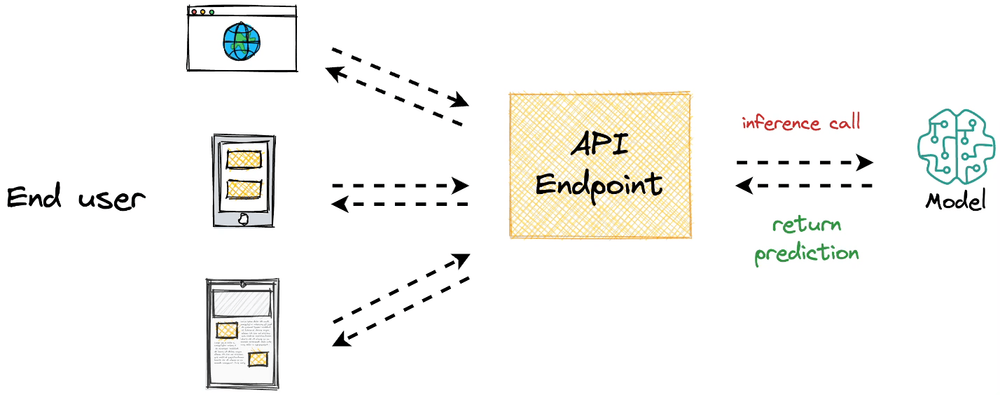

Finally, we went ahead and learnt about patterns for ML systems in the Cloud, especially inference serving mechanisms. With this, we concluded the previous chapter.

If you haven’t explored Part 13 yet, we strongly recommend going through it first since it lays the groundwork essential for understanding the stuff we’re about to dive into here.

Read it here:

In this chapter, we’ll continue our discussion on the deployment phase, diving deeper into a few more important areas, specifically model deployment on AWS (Amazon Web Services).

We'll cover:

- Understanding AWS and its ecosystem.

- Understanding the EKS (Elastic Kubernetes Service)

- Understanding EC2 (Elastic Compute Cloud) and how EC2 integrates with EKS for node provisioning.

- Other related AWS services

As always, every notion will be explained through clear examples to develop a solid understanding.

Let’s begin!

AWS: Introduction

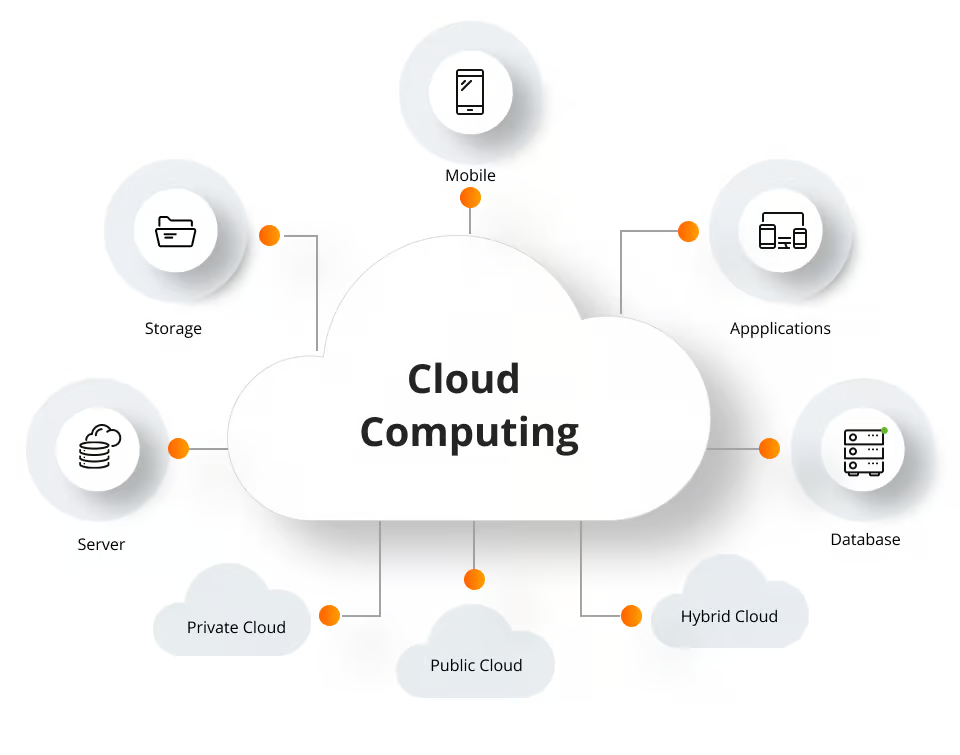

AWS, or Amazon Web Services, is a comprehensive and widely used cloud computing platform that provides IT resources such as computing power, storage, and databases over the internet.

It allows businesses and individuals to run applications without managing physical servers, data centers, or underlying infrastructure.

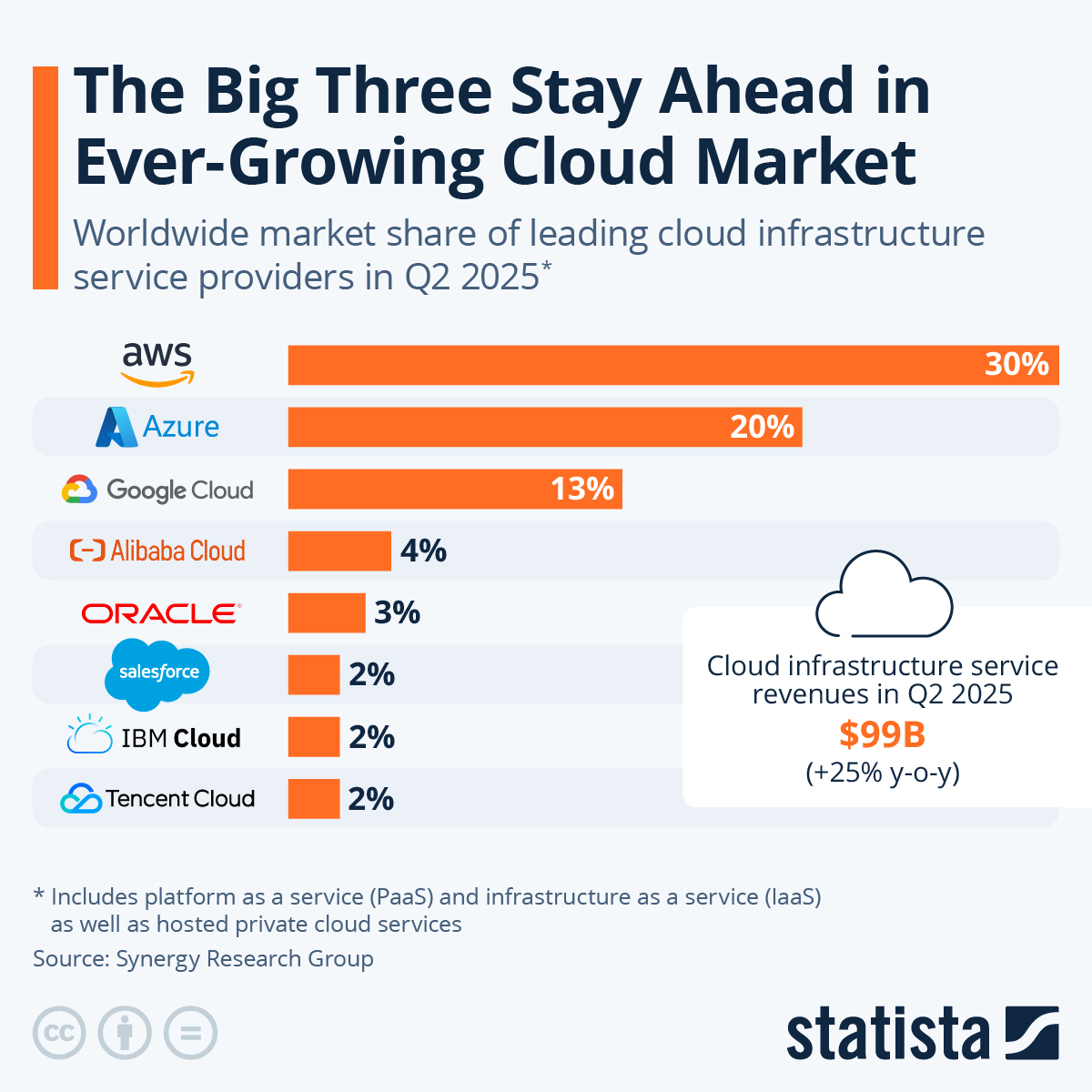

AWS holds the largest market share in the cloud computing space, currently around 30–31%, maintaining its leadership despite increasing competition from Microsoft Azure and Google Cloud. It describes itself as “the world’s most comprehensive and broadly adopted cloud, offering over 200 fully-featured services from data centers globally.”

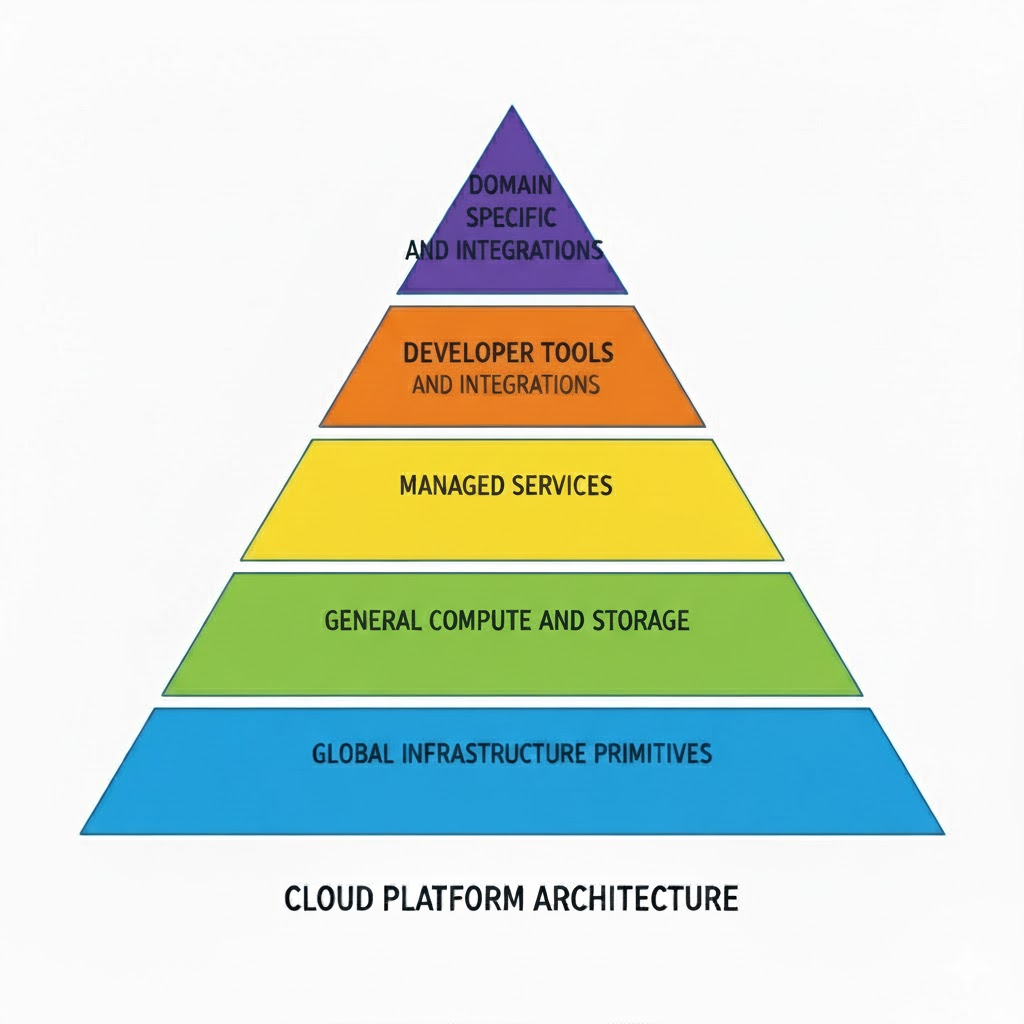

AWS, and other cloud platforms, operate as a layered platform: starting with global infrastructure primitives (regions, availability zones, networks, and identities), moving to general-purpose compute and storage, then to managed services, and finally to domain-specific offerings such as analytics, streaming, observability, machine learning, and security.

This means we can treat AWS either as a bare-metal-like substrate hosting Linux-based workloads or as a sophisticated control plane that enables the composition of managed building blocks with minimal operating system management.

In practice, AWS is far more than a virtual data center. It serves as a versatile platform for building almost any type of digital system, ranging from simple websites and mobile back-ends to complex data pipelines, global IoT deployments, or real-time machine learning systems.

A defining characteristic of AWS, and many other major cloud providers, is its evolution from providing basic infrastructure to delivering managed, integrated, and automated services. This shift from “infrastructure provision” to “platform service” has fundamentally changed how systems are architected, operated, and governed.

The AWS ecosystem: layers and composition

To understand AWS effectively, it helps to view it as a layered platform:

Global infrastructure

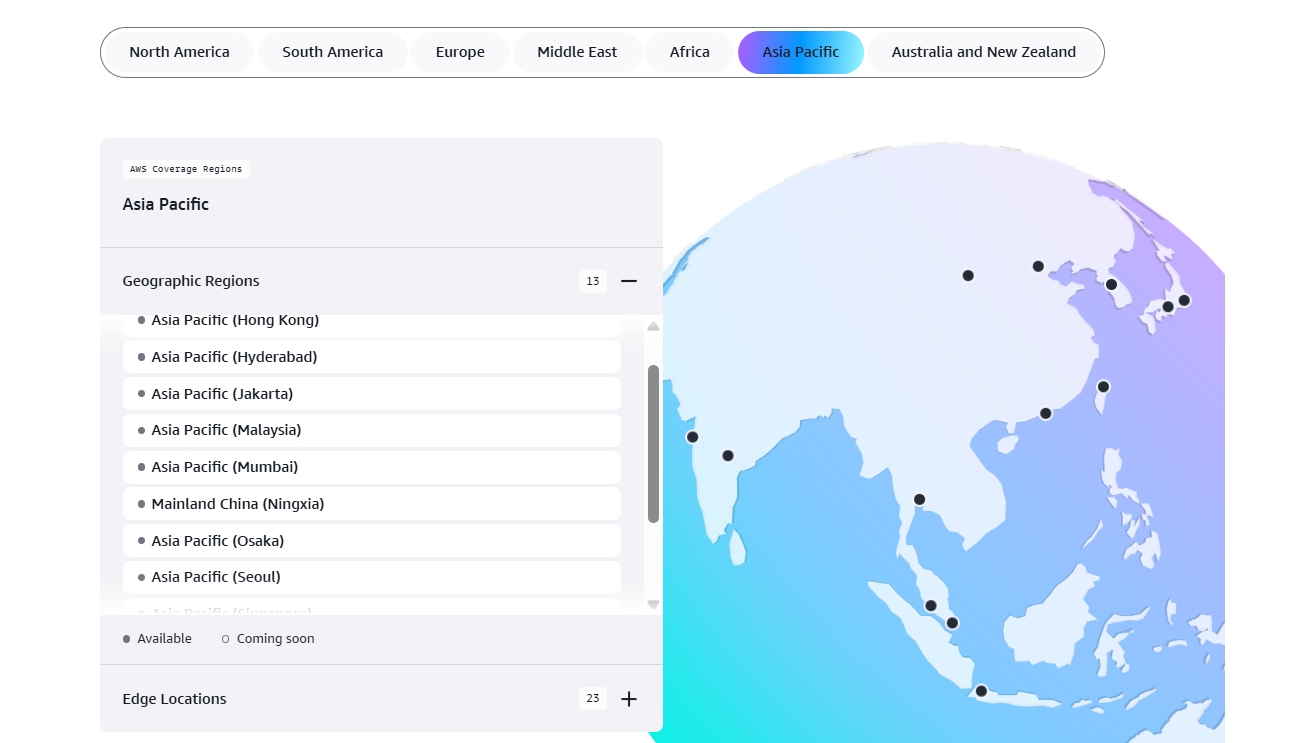

This layer underpins everything. AWS operates globally distributed regions and availability zones built on high-performance networking and storage fabrics.

Core services

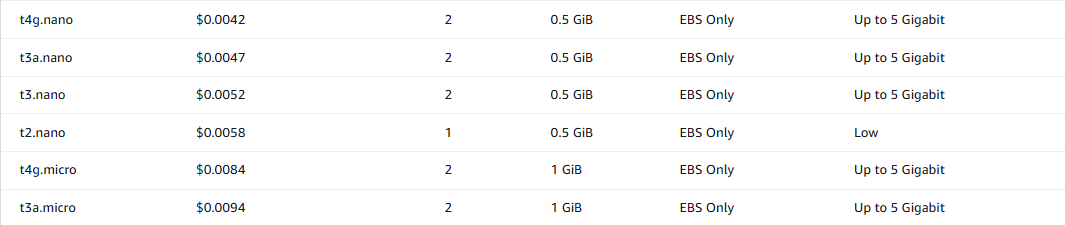

Core services such as EC2 (compute), EBS (block storage), and S3 (object storage) provide the foundational components that mirror traditional data centers.

Managed platform services

On top of this foundation lies a wide range of higher-level managed services, including databases (relational and NoSQL), analytics, serverless computing, containers, managed Kubernetes (EKS), IoT, edge computing, and AI/ML platforms.

Developer tools and automation

AWS includes robust tools for building, deploying, and monitoring applications. These cover CI/CD pipelines, infrastructure management, monitoring, logging, and deployment automation frameworks.

Operational governance, security, and cost management

A strong suite of services supports cloud operations at scale, such as IAM for identity and access control, encryption and auditing tools, cost tracking, and compliance management.

Though it can be seen as a layer, it also effectively spans across all other layers too.

Partner, marketplace, and community ecosystem

AWS has a large ecosystem of partners, including consulting firms and independent software vendors, as well as a marketplace of third-party integrations and an open-source community around its platform.

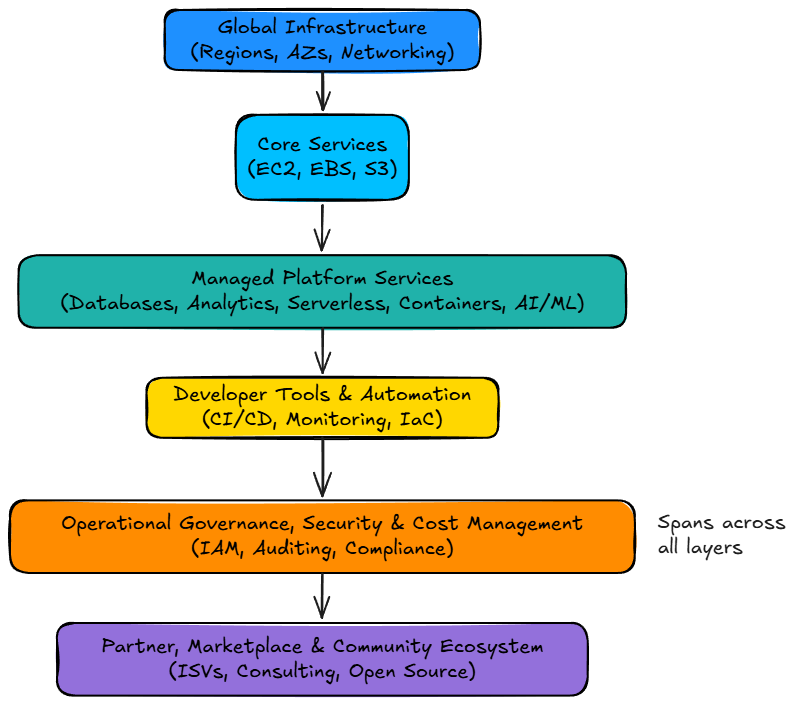

Here's a diagram summarizing the composition of AWS, and many other cloud providers in general:

When designing systems on AWS, understanding these layers and their interactions is critical. Architects must decide whether to use raw compute resources or managed services, containers or serverless functions, and how to balance control with abstraction.

Why this matters?

The richness of the AWS ecosystem has fundamentally changed how modern systems are designed. AWS is not merely infrastructure; it shapes the principles and patterns of cloud-native architecture.

By providing modular, composable, and managed services, AWS encourages architects to think in terms of abstractions, automation, and resilience rather than servers and manual configurations.

This transformation enables rapid innovation. Since foundational components such as databases, queues, and streaming systems are available as managed services, teams can focus on building product features rather than managing infrastructure.

AWS and other cloud providers offer global scale and reach. The multi-region architecture allows systems to be deployed worldwide with low latency and high availability.

Applications can serve global users through distributed deployments, load balancing, and data replication, natively supported by the backbone network.

Elasticity is another hallmark. The infrastructure is designed to scale automatically based on demand, making scalability a built-in architectural property instead of a later concern.

Another critical change is the move from services to abstractions to automation. Traditionally, provisioning and managing servers required manual effort. AWS introduced an API-driven paradigm where every resource, like compute, database, or networking, is programmable. This supports event-driven architectures, auto-scaling, and seamless service integrations without constant human intervention.

In essence, AWS has transformed architecture into a discipline of composition, automation, and managed abstraction. Its ecosystem doesn’t merely simplify operations; it changes how teams design, collaborate, and scale in the cloud-native era.

Key characteristics and design dimensions

When evaluating AWS from a system-design perspective, several defining characteristics stand out.

First, service breadth is a core strength of AWS. It offers one of the most extensive portfolios in the cloud ecosystem, spanning compute, storage, databases, analytics, AI/ML, IoT, developer tools, and more.

This wide range allows architects to design end-to-end solutions using native services without relying heavily on external tools or third-party integrations.

Next, AWS' global infrastructure provides a robust foundation for distributed, low-latency, and highly available systems. With multiple regions and availability zones across the world, teams can design architectures that are fault-tolerant and support disaster recovery scenarios.

This geographical diversity also enables compliance with data sovereignty requirements and performance optimization for users in different locations.

Managed operations are another key differentiator. AWS takes on the responsibility of patching, scaling, and backing up managed services, freeing engineering teams from much of the operational overhead. This lets developers focus more on business logic and innovation rather than maintenance and infrastructure management.

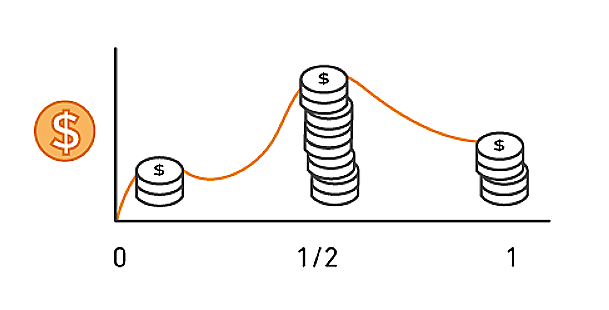

The pay-as-you-go model and elasticity of AWS fundamentally change how systems are designed. Instead of provisioning for peak capacity, teams can build architectures that automatically scale up and down with demand, optimizing both performance and cost.

This flexibility promotes efficiency and supports variable workloads with minimal manual intervention.

Additionally, AWS’s ecosystem integration ensures that services work seamlessly together. Data can flow efficiently between compute, storage, and analytics components, simplifying workflows and enabling complex architectures such as data lakes, event-driven systems, and microservices-based applications.

Finally, the continuous evolution of AWS means that architectural flexibility is critical. New services and features are released regularly, allowing architects to refine or re-architect components to leverage improved capabilities. This constant innovation encourages modular, adaptable designs that can evolve alongside AWS’s rapidly changing landscape.

Together, these characteristics shape how architects think about scalability, resilience, cost optimization, and innovation when designing systems on AWS.

Now that we have a clear understanding of how AWS and other cloud providers bring convenience and flexibility to technology development, it’s equally important to consider their broader implications from an organizational perspective. So, let’s go ahead and take a moment to explore this aspect too.